Energy Consumption Power Aware Data Delivery in Wireless Network ()

Received 8 May 2016; accepted 20 May 2016; published 2 August 2016

1. Introduction

Actually, most studies of the wireless networks are mainly focused on system performance and power consumption circuit system. There are many studies in the hardware aspects of energy efficiency of mobile communications, such as low-power electronics, power-down modes, and energy efficient [1] [2] modulation. Due to fundamental limitations though, progress towards further energy efficiency will become mostly an architectural and software level issue. The communication protocol for wireless communication may play a major role in energy consumption and other important factors. The portable devices like Personal Digital Assistance (PDA) and others are mainly focusing on the efficient energy consumption (power control) in wireless networks. In general each node may consume some amount of energy (power), it can be aggregated and these are mainly contributing to the final outcome in negative side. In recent days many applications are supported by wireless environment at different situations. In general applications are concerning QOS, routing, virtualization and reliability. In detail QOS can provide services of different technologies which include relay, transferring mode of the node, 802.11 networks and others. Routing is to choose better way to finalize the paths and can also concern the traffic controls. An operating system virtualization can play a vital role in storage of network resources and reliability can also be performed by measuring the consistent value of similar portions. The ultimate aim of the paper was to reach the goals through the DDN algorithm.

This research includes various chapters which are expressed in related work, existing work, virtualization based QoS, system architecture, simulation result and conclusion.

2. Related Work

Wireless computing is environmentally sustainable usage of computers and related resources, and each resource can efficiently and effectively communicate inside the network system. The main goal is to reduce the use of hazardous materials, maximize energy efficiency [3] - [5] urging the product’s lifetime and promote the recyclability or biodegradability of defect products and factory waste. Nowadays computers are used in everywhere (like school, office, etc.). The numbers of computers are increased day by day and the problem has been realized by people and measures are being taken which help in minimizing the power usage of computers. Wireless computing has introduced a range of equipments and technologies which help in limiting the impact on the energy efficient environment. The energy consumption and carbon footprints are separation of Data and GUI by using thin client [6] [7] concept of terminal card. We are choosing high energy consumption from the industry, they are end users to tend to save the resources in computing devices which are considered zero- knowledge basically for the server resources. It’s mainly educated to maximize the awareness to the people.

GUI (Graphical User Interface) can split the data in two different ways (client side and server side), each way can support and interact with any registered node into the dynamic access network system. A system administrator can combine many nodes (system) into virtual machine (unique system). The terminal may act as centralized server for end user. The environment may get together through thin clients [8] - [10] with DDN framework, resulting in a decrease of energy costs and increase of energy consumption. Example PowerPoint application software as the source software is in developing the documentation. PDF format is maximum ability to save more energy consumption compared to PPT. In general terminal server software includes Terminal Services (Windows) and Linux Services (Linux).

2.1. Virtualization

Computer virtualizations are referred to the process of running on logical computer systems. Computer administrator combines several physical systems into single virtual machine (single machine). It is powerful system. Virtualization can make single function which combines more number of data usages. Logically each data center can properly install the virtualized infrastructure which can support several applications in the operating system for energy consumption. There are different types of virtualization (i.e.,) server virtualization, application virtualization, network virtualization, storage virtualization and desktop virtualization. End user can run on thin client machine.

2.2. Graphical Terminal

It can be shown images as well as text. It has been divided into two types of modes (vector mode and raster mode). The vector-mode displays drawn lines on the face of cathode-ray tube over the control of host computer system. The continue formation of lines are formed, but the electronics speed is limited. This mode is no longer used. Raster mode is usually scanning the picture and the visual element represents rectangular array of elements of pixel. Since the raster image has been perceptible to the human eye as a whole for a very short time, the raster must be refreshed many times per second to give the appearance of a persistent display. Today Most of the terminals are graphical i.e., they can show images on the screen. The modern term for graphical terminal is “thin client”. It typically uses a protocol like X11 for Unix-terminals or RDP for Microsoft Windows. The bandwidth needed depends on the protocol used [11] , resolution, and the color depth. Modern graphic terminals allow display of images in color, and text in varying sizes, colors, and fonts. In the early 1990s an industry consortium attempted to define a standard that would allow a single CRT screen to implement multiple windows, each of which was to behave as a distinct terminal.

3. Existing Work

Efficiency Algorithm

Efficient Algorithm has determined the number of resources to execute; running time of an algorithm is related to input length (time complexity) and storage location (space complexity). In compare Slack Reduction Algorithm (SRA) tasks are executed in minor frequency. The linear algebraic algorithms which can be very different approach to save energy, power-aware (simulator) and scheduling the execution. The results of these procedures are modulated by an energy-aware simulator are charge of scheduling or mapping the execution of tasks in to the cores. The Integer bit power allocation algorithm (IPAA) is an optimal algorithm achieves the solution by the channel. It can be used to solve the problem of both data rate and margin maximization. It consumes little bit of energy consumption when compare to other. duEDF is dynamic task scheduling algorithm describe the combination of CPU energy and device energy are considered by CuSYS and duSYS. The CTRB and EPCLB algorithm has maximum power consumption varying from 10% to 40.3%.

Design for Disassembly (DFD) algorithm is an essential issue for product end-of-life management. It involved two major procedures, i.e. disassembly analysis (DA) and disassembly process planning (DPP). DPP is “bottom- up” design systems which include number, type, nature of connections, details of parts and assembly etc, however in a “top-down” approach; DA could be a single crucial procedure in the course of product creation. It uses wave propagation algorithm to find a disassembly sequence that minimizes the disassembly cost. DFD have been intensively studied [12] [13] but some problems are still remaining, i.e. it could not find the 'best' DPP incorporating a composite factor, like mass, weight and disassembly directions etc. and the “graph splitting” algorithm need to be modified to suite the conceptual design and the “top-down” paradigm. To summarize, two conceptual design issues are talked about in this paper focusing on green design perspectives. In detail a specific knowledge base with the drag & drop functionalities for mechanical kinematical design is introduced. Secondly, the object oriented assembly data structure is exploited to support product life cycle information. DFD analysis and planning are developed to facilitate the design process and help to search for preference design ideas.

4. Architecture and System Design

Data card has two modules: the first module data node is connected with microprocessor and the other node is connected with Graphical User Interface. The architecture mainly focuses on product of long term efficiency, algorithmic efficiency, resource allocation, virtualization and power energy consumption.

Data Quality = Data Transferred (aggregate fps)

Render Time (aggregate fps)

Ideal Transfer (aggregate fps)

Data Transferred (atomic fps)

Render Time (atomic fps)

Ideal Transfer (atomic fps)

For a resource (ri) at any given time

the utilization (Ui) is defined as

Ui = n/tj

J = 1

where

n ―the number of tasks at running time,

Ui―the usage of resources of a task

Tj―minimum process of energy pmin

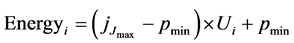

The energy consumption Ei of a resource ri at any given time is defined as

In Figure 1 it shows the interaction between the GUI and Server. The server which maintains the GUI and Data at all the time, this is one of the most difficulties of the client side access. Due to this client can’t perform perfectly at the running time. This is the major drawback of the existing work, in general The Windows operating system using thin client setup by the embedded windows environment. It includes core operating system and the minimum amount of Catalog items necessary to support embedded windows powered thin client. This includes a resource-constrained shell and Desktop Protocol and the major challenges of Performance decreases, Power consumption increases and Efficiency decreases.

Figure 2 mainly focused on GUI (Graphical User Interface) performance in client side, it can perform many operations at this end (insertion, Deleting, Copying, moving and controlling operations) and the other side (server) data can perform storing and retrieving the information. Our main aim is to generate GUI, it can split the third party data in two ways (client side and server side) also can support every user can interact at any node by the system. A system administrator can combine many nodes (system) in to virtual machine (unique system). The terminal may act as centralized server for end user. This environment may get together through thin clients with DDN framework, resulting in the benefit of separation of data and graphical user interface in Performance increases, Power consumption and Efficiency increases.

In Figure 3 thin client is a lightweight machine that can split the Data and GUI for every individual user node also built for removing operation(controlling operations) into a client (virtualization environments). It heavily depends on the next node (its server) to fulfill its computational roles. Which is a computer designed to take on these roles by itself. The specific roles assumed by the server may vary, from hosting a shared set of virtualized applications, a shared desktop stack or virtual desktop, to data processing and file storage on the client or users behalf. Thin clients occur as components of a broader computing infrastructure, where many clients share their computations with a server. The server side infrastructure makes use of wireless computing software such as Data Delivery network (DDN). This combination forms what is known today as a wireless based system

![]()

Figure 1. Existing basic interaction with GUI and server.

![]()

Figure 2. Proposed system separation of data and GUI.

![]()

Figure 3. Split data and GUI Thin client (centralized server).

where desktop resources are centralized into one or more data centers. The benefits of centralization are hardware resource optimization, reduced software maintenance, and improved security. The ultimate aim of the paper has reached the goals through the DDN algorithm.

In thin client network computing devices are centralized server by client machines (systems connected to the network). In fact, the central server performs most of the computing tasks, stores data and hosts all the applications. The outdated desktop machines are running to the applications, to control footprint by Virtualization technology that can help save both hardware and software resources by creating an operating system or a peripheral devices. After separate the data and GUI from terminal server, Data can be operated by server side and GUI can work with client side (thin client concept has been used). When need for virtualized application [14] is over, then we can put back the splitting of data into its original state, at the end power has been saved energy. Finally the system comprised of data library, original media server and edge streaming servers. The data library and original server are usually co-located in the same physical site. The data library stores the video content. The original server provides online service portal to the end user, while performing the resource scheduling, billing and other service logic. The edge servers are distributed into different sites to provide video streaming to its local end users.

5. DDN

Algorithm for DDN

Input: Data Ij and a set video streaming of r-resources (Modified Best Fit Decreasing)

Output: Consolidating Data and GUI for power management

1. Let r* split

2. for V, rεR do

3. Compute the power function value of on resources Ri

4. if r belongs to client

5. if Vm belongs to server

6. do

7. split data di and GUIi

8. if Vm List

9. Allocation of Vm VmList sort

decreasing Energy utilization()

10. for each VmList

11. Do

12. minpower←max

13. allocated client←GUI

14. if power←estimate power (client,Vm)

15. Allocated server←data

16. end if

17. end for

18. end if

19. end if

20. end if

21. compute the cost value of function

22. for consolidating di and GUIi

23. end for

24. return Energy consumption.

6. Simulation and Result

6.1. Power Consumption

Generally search Engine’s initial pages are simple information with more white spaces (it consumes more power of watts). Normal white spaces may produce more brightness and more power of watts than black window (screen). The simulation result of power consumption shows the better performance (percentage of result) than the existing module; especially the simulator separately performs the evaluated result of DDN power consumption against simulation time, DDN power consumption against number of nodes, DDN startup delay against simulation time, DDN startup delay against number of nodes. Therefore in recent trends in wireless network power consumption is a more challenging fact. It may mainly consider the black and white screen in watts. As long years ago volume of (Energy Efficient) computers were used in offices, business, hotels, institution, etc. Even though personal computer consumed very less (one third of lamp bulb) power watts utilized. These devices may runs in limited operating system (LINUX―some Giga bits of processor and RAM) does not have moving parts and a fan.

We compare the performance of DDN with a state-of-the-art solution LEACH and Chen et al. (1998). DDN and LEACH are deployed in the wireless network whose settings are described. These solutions are modeled and implemented in NS-2. The DDN server stores more files and the length of each file is set to minimal graphical values. The initial target location and speed of seventy-some hundred mobile nodes are randomly assigned. When the mobile nodes arrive at the assigned target location, they continue to move according to the reassigned target location and speed. We generate the information of 70 nodes (including name and introduction) and 10,000 playback logs (including played ID and time) where the information and 10,000 playback logs are used to calculate the access probabilities between nodes. 100 plus mobile nodes join the system following distribution and play content following generated 300 logs where the popularities of played content meet the distribution and 70 nodes play 3 files during the simulation time.

When any node finishes the playback, it quits the system. In DDN, the value of threshold is set to 0.5 - 6. Before starting the simulation, the chain-based tree structure of DDN has been built and the logical relationship between nodes has been defined. When the mobile nodes join the system, they form the communities corresponding to the played node.

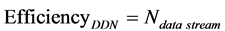

6.2. Performance Evaluation

The performance of DDN is compared with that of LEACH in terms of power consumption, startup delay, packet loss rate and simulation time, respectively. Figure 4(a) shows, the curves corresponding to the results of DDN and LEACH have a fast increase trend during the whole simulation time. The increment and peak value (83.8%) of DDN are larger than LEACH. Figure 4(b) also shows the increase trend of three curves with increasing number of nodes. The red curve of LEACH maintains a fast rise during the process of node joining the system, but it also has an obvious fluctuation. DDN curve keeps higher levels than that of LEACH have larger increment and peak value.

In DDN, the nodes with long online time are grouped into an AVL tree and other nodes which store the same with the nodes in tree form the chain and attach the tree. In initial simulation, the nodes which have joined the system first form the AVL tree and obtain the resources from the media server. The increase in the number of nodes provides the relatively enough available resources for the new system members. The change of user interests for the content brings the uncertainty of resource demand; namely, some nodes still do not obtain the requested resources from the P2P network and only receive the data from the server. Therefore, DDN groups the nodes into a chain-based tree structure and enables the nodes to form the communities corresponding to the

![]()

Figure 4. Simulation results. (a) DDN Power consumption against simulation time; (b) DDN Power consumption against number of nodes; (c) DDN Startup delay against simulation time; (d) DDN Startup delay against number of nodes.

played nodes. Moreover, the nodes pre-fetch the nodes of interest into local buffer and make use of pre-fetched resource to serve other nodes; namely, the pre-fetched content increases the available resources in the P2P network.

Startup Delay: The difference values between the time of sending request message and receiving first data are defined as the startup delay. The mean values of startup delay during a time interval 2(s) and the process of node joining the system are shown in Figure 4.

Maintenance Cost: Which is defined in terms of control messages like (joining nodes, leaving nodes and finding) under the Network of P2P? In addition that maintenance cost with related to tree traversal (in-order, pre-order and post order) is given the better result than chain process. The chain cost indicates the higher traversal than tree traversal process. Figures 4 (a)-(d) show the two curves corresponding to DDN and LEACH have three fluctuation processes during the whole simulation time. The blue curve of DDN experiences a slight fluctuation from = 2 s to = 20 s, starts to quickly increase and shows the fast fall from = 2 s to = 4 s. The red curve of LEACH has a slight increase and quickly decreases in some interval. The curve also keeps a fast rise trend.

7. Conclusion

In this paper, we discussed how to define the power efficiency of video DDN system computing framework and the key impacting factors especially local cache hit ratio and power proportionality. DDN algorithm can split the data and store the data in data center, and data center can provide the data (proper request from the user) using DDN from client to server side. In particular, thin client infrastructure runs the application only in server side. Key strokes and mouse click are sent over network to the server to process and give back the result (screen). Clients can be a low powered PC or Thin client device. They don’t have HDD, FDD, CDROMS, Cooling Fans and Very Low Processing Power. Now they have thin client with DDN framework. The simulation graph shows the evaluated result of DDN power consumption against simulation time, DDN power consumption against number of nodes, DDN startup delay against simulation time, DDN startup delay against number of nodes.