Modeling Ocean Chlorophyll Distributions by Penalizing the Blending Technique ()

1. Introduction

A detailed study of the ocean environment and its constituent elements are of utmost importance in guiding decision-makers on policies regarding marine activities such as fishing and their consequences for human life and society as a whole. In the ocean food chain, phytoplankton, which are found in the upper layer of the ocean, are of extreme importance. Indeed, aquatic life and production revolve about the distribution and biomass of these unicellular algae. Thus, to better understand the ocean food chain, it is necessary to track their existence and monitor their population distribution in the ocean environment. To measure their population by cell counts is very difficult, because of their resemblance to other non-algae carbon rich particles. An alternative method of doing this is in terms of their photosynthetic pigment content, chlorophyll, which is endemic across all taxonomic groups of algae [1]. In fact, an appealing method of estimating primary productivity in the ocean is determined by the concentration of ocean chlorophyll [2] and also emphasized by [3]. Therefore, to better monitor and predict the abundance of this phytoplankton, it is important that the distribution of chlorophyll concentration in this environment be determined as accurately as possible. The blending technique described by [4] was successfully used to analyze sea surface temperature [5]. The pioneers of the use of this technique in the calibration of ocean chlorophyll expressed the need for further work to be done in order to improve ocean chlorophyll predictions in areas where observations could not be obtained by ship and buoy [6]. One problem faced when using the technique in ocean chlorophyll calibration is distortion of the blended field as one approaches the coastal land. This distortion is due to the sparseness of data obtained by ship and buoy (in situ) and the noisiness of the satellite field [7]. These factors have been the main handicap to the smooth calibration of ocean chlorophyll estimates from the satellite observations. The penalized regression splines are a technique that could be used to model noisy data [8]. The use of this statistical technique in the calibration of ocean chlorophyll was also suggested by [1].

The objective of this article, therefore, is to demonstrate how the principles of penalized regression could be applied to the blending process in order to obtain better estimate of ocean chlorophyll from the satellite data field. The approach would mainly address the noisiness of the data fields by introducing a penalty on the differences between their observations at positions where both fields have values. The belief is that, by penalizing the differences between the satellite and the in situ fields, the satellite will become closer to the in situ field and can thus be used to sufficiently estimate ocean chlorophyll values at positions where the in situ field could not provide values. Since the process of penalization involves smoothing, the efficiency of the technique will depend on the choices of the smoothing parameters.

Inspiration to this was drawn from the interpolation equation

(1)

(1)

found in Onabid (2011) in the section dealing with the proof as to why results from the corrector factor and the smooth in-fill methods should coincide. From this equation, the term of interest is

which is the sum of the solution to the partial differential equation obtained at each boundary point k where there is a difference between the satellite and in situ value. In order to penalize these differences, the interpolation equation (1) had to be represented using basis functions. Consider the equation

where  is actually the solution to

is actually the solution to

(3)

(3)

subject to the boundary conditions

.

.

This equation (2) can be re-written with each of the  separately as

separately as

(4)

(4)

where  is set to the difference between the in situ and satellite values at boundary point k and Δk(x; y) representing the basis function is the solution to

is set to the difference between the in situ and satellite values at boundary point k and Δk(x; y) representing the basis function is the solution to

(5)

(5)

with external boundary points set to zero and the internal boundary points set to zero everywhere except at the kth position where it is set to 1.0, that is the knot of the basis.

What this means is that, for each internal boundary point (knot), the blending process is performed to estimate the entire blended field with that particular boundary point acting as the only boundary point for the process. During the process, the value of this boundary point equals 1.0 and the resulting field is the basis for this knot. The blended field corresponding to this particular knot is obtained by multiplying the original knot value with its basis. Blended fields obtained from each of the knots are summed up. This sum is then added to the satellite field to obtain the final blended field which we call the basis blend.

2. Penalizing the Blending Process

For the penalized regression spline to be applied, it was necessary to represent the term of interest in the blending process as a regression equation.

Representing Blending as a Regression Equation

Considering the equation (4) which is the interpolation form of the blending process represented using basis functions, also consider the fact that the objective is to control the differences between the satellite and the in situ fields, it is obvious that focus here should be on the term

from where the  ss′ could be estimated by penalized least squares in order to minimize the effect of these differences and consequently maximize the use of the satellite field as estimate to ocean chlorophyll at points where in situ could not provide observations Let

ss′ could be estimated by penalized least squares in order to minimize the effect of these differences and consequently maximize the use of the satellite field as estimate to ocean chlorophyll at points where in situ could not provide observations Let

be calculated for each point it K, where satellite and in situ have observations. This can be written as a regression equation of the form,

(6)

(6)

where the  s′ are unknown parameters to be estimated and

s′ are unknown parameters to be estimated and  the error term. This expression is equal to

the error term. This expression is equal to

Thus if Zk is expressed using the basis space, one obtains this model;

Fitting this model by least squares will simply result in the interpolation scheme since there is exactly one parameter per datum, thus nonparametric techniques were then explored. The thin plate regression spline was then used to introduce a penalty to this blending regression equation.

3. Penalizing the Blending Regression Equation

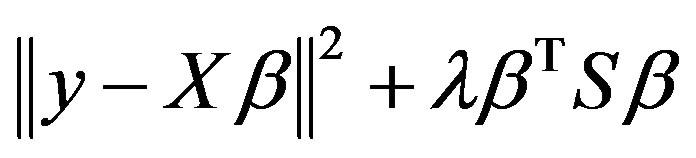

From Equation (6), the control of the smoothness of the differences can be achieved by either altering the basis dimension, that is changing the number of selected knots or keeping the basis dimension fixed and then adding a penalty term to the least squares objective. The later was used. Therefore the penalized least squares objective will be to minimize

(7)

(7)

where  is a penalty function which penalizes model wiggliness while model smoothness is controlled by the smoothing parameter

is a penalty function which penalizes model wiggliness while model smoothness is controlled by the smoothing parameter , as described by [9]. As a first step in estimating the penalized least squares objective, the simple penalized least squares technique of ridge regression was used. In this process, the intention is to penalize each of the parameters separately by introducing a penalty to each of the estimated parameters. Following this method, the penalized least squares objective will be to minimize

, as described by [9]. As a first step in estimating the penalized least squares objective, the simple penalized least squares technique of ridge regression was used. In this process, the intention is to penalize each of the parameters separately by introducing a penalty to each of the estimated parameters. Following this method, the penalized least squares objective will be to minimize

(8)

(8)

with respect to  s′: The penalty is represented by the term

s′: The penalty is represented by the term  with

with  being the smoothing parameter to control the trade off between model fit and model smoothness. Thus the problem of estimating the degree of smoothness of the model is now the problem of estimating the smoothing parameter

being the smoothing parameter to control the trade off between model fit and model smoothness. Thus the problem of estimating the degree of smoothness of the model is now the problem of estimating the smoothing parameter .

.

Assuming that the smoothing parameter is given, how then can the  s′ be estimated in this penalized least squares objective?

s′ be estimated in this penalized least squares objective?

From equation (8), the term Δk(xk; yk) reduces to a n×n identity matrix. Now, define an augmented Z, say Z; as  (with n zeroes) which can also be augmented directly in the objective.

(with n zeroes) which can also be augmented directly in the objective.

When this is done, equation (8) could now be written as

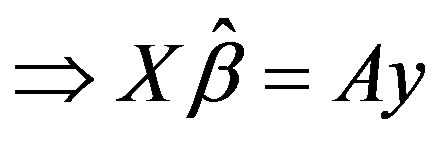

From here,  can be calculated as follows:

can be calculated as follows:

with ; this implies that,

; this implies that,

3.1. Choosing How Much to Smooth

This refers to the selection of the smoothing parameter . This must be done with care such that the selected value should be suitable, so much so that if the true smooth function is f the estimated smooth function

. This must be done with care such that the selected value should be suitable, so much so that if the true smooth function is f the estimated smooth function , should be as close as possible to it. The reason being if

, should be as close as possible to it. The reason being if  is too high, the data will be over-smoothed and if it is too low, the data will be under-smoothed hence the resulting estimate will not be close to the true function. The aim as described by [9] will be to select a

is too high, the data will be over-smoothed and if it is too low, the data will be under-smoothed hence the resulting estimate will not be close to the true function. The aim as described by [9] will be to select a  which will minimize the difference between

which will minimize the difference between  and f that is to say if M is the difference, then

and f that is to say if M is the difference, then  should minimize

should minimize

This could have been easier if the true values for f existed already. Because this is not the case, the problem was approached by deriving estimates of M plus some variation. This was achieved by making use of the ordinary cross validation (OCV) technique. In this technique, a model is fitted to the rest of the data, when a datum is left out. The squared difference between the datum and its predicted value from the fitted model is calculated. This is done for all the points and the mean taken over all the data. Thus the ordinary cross validation criterion is written as

where  is the estimate from the model fitted to all data except Zi. The idea of calculating V0 each time leaving out a datum has been proven not to be efficient as described by [9]. It can be shown that

is the estimate from the model fitted to all data except Zi. The idea of calculating V0 each time leaving out a datum has been proven not to be efficient as described by [9]. It can be shown that

where  is the estimate from fitting to all the data and A is the corresponding influence matrix.

is the estimate from fitting to all the data and A is the corresponding influence matrix.

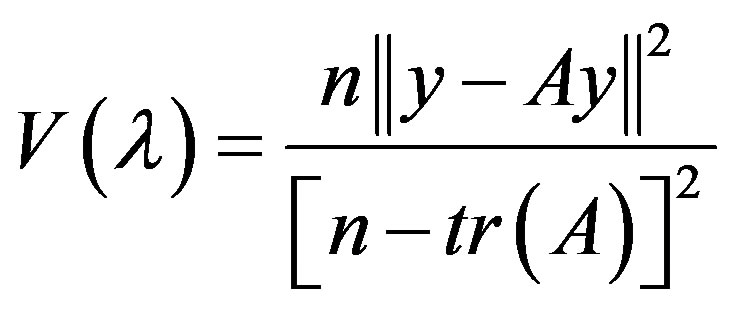

[9] Emphasizes the fact that though OCV is a reasonable way of estimating smoothing parameters, it has the drawbacks of being computationally expensive to minimize in the case of additive models where there could be many smoothing parameters and secondly it has a slightly disturbing lack of invariance. Thus in practice, the weights 1−Aii are often replaced by the mean weight tr(I − A)/n in order to arrive at the generalized cross validation (gcv) score given as

This has the computational advantage over OCV and can also be argued to have some advantages in terms of invariance. Therefore, an easy way to look for the best smoothing parameter would be to search through a sequence of ′s, each time fitting a penalized regression model with the new

′s, each time fitting a penalized regression model with the new  value and calculating the gcv score. At the end, the

value and calculating the gcv score. At the end, the  value corresponding to the lowest gcv score will be the optimal smoothing parameter.

value corresponding to the lowest gcv score will be the optimal smoothing parameter.

3.2. Calculating the gcv Score

Amongst the techniques of ridge regression, integrated least squares, integrated squared derivatives and efficient method used in computing the gcv score, only the efficient method herein described provided and better estimate for the gcv score.

Efficient Calculation of the gcv Score

The idea here is to provide a means of obtaining optimum values for the gcv score, the degree of freedom tr(A) and the smoothing parameter  which will minimize the gcv score. These will be very important since the objective is to build a model that will produce estimates in the blended field which are as close as possible to the true field. The QR decomposition described in [10] will be used because it is believed that QR is more stable than the Cholesky decomposition. This was achieved as follows.

which will minimize the gcv score. These will be very important since the objective is to build a model that will produce estimates in the blended field which are as close as possible to the true field. The QR decomposition described in [10] will be used because it is believed that QR is more stable than the Cholesky decomposition. This was achieved as follows.

The objective is to minimize

with respect to .

.

where

The corresponding gcv score for the given  is then given as

is then given as

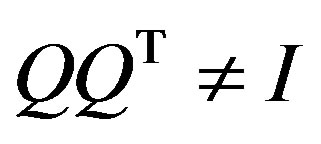

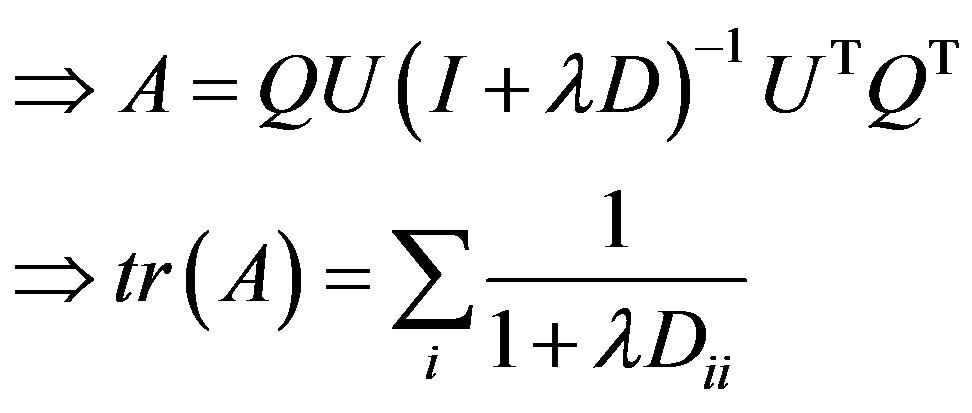

In order to calculate the efficient gcv score, let X = QR where R is the upper triangle and Q consist of the columns of an orthogonal matrix such that QTQ = I but

From an eigen-decomposition

where D is a diagonal matrix of eigen values, the columns of U are eigenvectors and U is orthogonal.

(9)

(9)

(10)

(10)

where .

.

From equations (9) and (10) it follows that  could be evaluated very cheaply for each new

could be evaluated very cheaply for each new  since the QR and eigen-decompositions are only needed once.

since the QR and eigen-decompositions are only needed once.

The smoothing parameter  corresponding to each of these lowest gcv scores were then use in fitting the penalized regression models. The results obtained are then compared to those from the other techniques.

corresponding to each of these lowest gcv scores were then use in fitting the penalized regression models. The results obtained are then compared to those from the other techniques.

4. Validating the Blended Fields Obtained from the Various Blending Methods

The strength of this method in predicting existing in situ observation was compared to that of the normal blending method. Because penalizing the blending method had to make use of the basis function, the blended field obtained from the basis function method was also compared. Since the basis function method works by the use of a basis set (knots), after the selection of the validation data set the remaining in situ observations were then used as knots for the basis function blending method. The penalized blended field was obtained by using parameters obtained from the efficient method of calculating gcv as described in Section 3.2.1. Randomly selected validation data sets each containing 175 observations from the observed in situ data for the month of May were used in a validation study. May was selected because it had the highest number of observations in the in situ field. The mean squared differences between the predicted and the observed in situ values were computed and plotted (Figure 1).

There was not so much difference between predictions from the basis and the penalized. Though most of the times the differences are visible only after the third or fourth decimal place, there are a few times where the differences appear very distinct between the two in favour of the penalized blending method. Penalized models are always expected to perform better than non penalized ones. The poor performance here could have been caused by the choice of the smoothing parameter which is being obtained in this case by cross validation.

5. Discussions

We have been able to successfully establish a procedure for implementing smoothing on the blending process by making use of corrector factor blending technique model of [7]. This was achieved by expressing the interpolation formula used by the corrector factor blending technique in a form making use of the basis function. The aim of expressing the blending process using basis functions was to pave the way to implement penalization. This was implemented by adding a penalty term to the least squares objective. This term contained the penalty function which penalizes the model and a smoothing parameter to control the smoothness of the model. The main issue here was to be able to choose the right smoothing parameter such that the estimated smooth function should be as close as possible to the true function. Cross validation technique was used to obtain the smoothing parameter. To obtain the cross validation score three techniques were used, namely ridge regression, integrated least squares and the integrated squared derivative.

Calculating the cross validation score using ridge regression failed because the final expression for calculating the score did not depend on the smoothing parameter.

Figure 1. A box plot of the mean squared differences between predicted and observed in situ values from the different blending methods.

As described by [9], this is not surprising since if a Zk is dropped from the model sum of squares term in equation (8), the only thing influencing the estimate of  would be the penalty term, which will be minimized by setting

would be the penalty term, which will be minimized by setting , whatever positive value the smoothing parameter takes. This complete decoupling will cause crossvalidation to fail. Thus, if a datum is left out, its corresponding estimate will always be zero since no other data has influence on it. This behavior occurs for any possible value of the smoothing parameter.

, whatever positive value the smoothing parameter takes. This complete decoupling will cause crossvalidation to fail. Thus, if a datum is left out, its corresponding estimate will always be zero since no other data has influence on it. This behavior occurs for any possible value of the smoothing parameter.

Making use of the cross-validation score calculated from the integrated least squares did not improve on the results in this research. This again, according to [9], is not surprising because if one considers any three equally spaced points x1, x2, x3 with corresponding f(xi) values to be ,

,  and

and . Also, if

. Also, if  then in order to minimize

then in order to minimize

one should set . This condition does not hold for the data fields used in this research since the data fields were sparse, and the missing values were replaced by pseudo zeroes, so it was not uncommon to find a set of three adjacent points with similar values. In a situation like this, [9] states that, if the middle point is omitted from the fitting, the action of the penalty will send its estimate to the other side of zero from its neighbors. Meaning that a better prediction of the omitted datum will only be possible with a high smoothing parameter and this will be closer to zero since the high smoothing parameter will tend to shrink the values of the other included points towards zero and hence the omitted point. With this, cross validation will also have the tendency to always select an estimate for the omitted points closer to zero from the model. This could have been the cause of the poor results obtained. The integrated squared derivative penalty is not expected to suffer from the same problems faced by the previous methods. This is because the action of the penalty is simply to try and flatten the smooth function around the vicinity of the omitted datum. If the smoothing parameter is large, it will increase the flattening and consequently pulls the estimate far away from the omitted datum. The penalty obtained by this technique had very little or no effect on the smoothing function hence the equality in results from the penalized and the basis function model.

. This condition does not hold for the data fields used in this research since the data fields were sparse, and the missing values were replaced by pseudo zeroes, so it was not uncommon to find a set of three adjacent points with similar values. In a situation like this, [9] states that, if the middle point is omitted from the fitting, the action of the penalty will send its estimate to the other side of zero from its neighbors. Meaning that a better prediction of the omitted datum will only be possible with a high smoothing parameter and this will be closer to zero since the high smoothing parameter will tend to shrink the values of the other included points towards zero and hence the omitted point. With this, cross validation will also have the tendency to always select an estimate for the omitted points closer to zero from the model. This could have been the cause of the poor results obtained. The integrated squared derivative penalty is not expected to suffer from the same problems faced by the previous methods. This is because the action of the penalty is simply to try and flatten the smooth function around the vicinity of the omitted datum. If the smoothing parameter is large, it will increase the flattening and consequently pulls the estimate far away from the omitted datum. The penalty obtained by this technique had very little or no effect on the smoothing function hence the equality in results from the penalized and the basis function model.

6. Conclusion

It is expected that a penalized model would be able to perform better than a non penalized model in a situation where penalization is necessary. Three techniques have been used to obtain penalty matrices in this research with the intention of improving the results from normal blending method. The penalized model was obtained by first representing the blending method by making use of the basis function which was also considered as a model on its own. Even though the results from the basis function and penalized model were relatively identical since most differences occurred at the third or fourth decimal place, it is important to know that the difference between these methods and the normal blending method is quite alarming (Figure 1) and therefore should be encouraged especially if more data could be obtained from ship and buoy. With the emergence of this result, it is hoped that most of the analysis on primary productivity and management in the ocean environment will be greatly affected, since chlorophyll is one of the most important components in the formation of the ocean life cycle.

Future Work

The failure of the penalized blending regression models to perform better than the basis function model could have been because the right penalty was not obtained. Therefore, more work could be done towards obtaining other penalties. Maybe, an integrated squared second derivative could be tried or one could try a combination of the first and second derivatives (double penalization). To enable the blending process to be very close to reality, the possibility of extending it to three dimensions could be looked into.