Error Estimation and Assessment of an Approximation in a Wavelet Collocation Method ()

1. Introduction

In the wavelet theory a scaling function  is used, which has properties that are defined in the MSA (multi scale analysis). Through the MSA we know, we can construct an orthonormal basis of a closed subspace

is used, which has properties that are defined in the MSA (multi scale analysis). Through the MSA we know, we can construct an orthonormal basis of a closed subspace , where

, where  belongs to a sequence of subspaces with the following property:

belongs to a sequence of subspaces with the following property:

is an orthonormal basis of

is an orthonormal basis of  with

with

.

.

We use the following approximation function:

, with

, with .

.

and

and  depend on the approximation interval

depend on the approximation interval .

.

Now we can approximate the solution of an initial value problem  and

and  by minimizing the following function (

by minimizing the following function ( is the Euklid norm)

is the Euklid norm)

(1)

(1)

For  we get an equivalent problem:

we get an equivalent problem:

, with

, with  and

and .

.

The advantage of calculating  by minimizing

by minimizing  is that we can choose more collocation points

is that we can choose more collocation points  as shown in the following example. In that case we apply the least squares method to calculate

as shown in the following example. In that case we apply the least squares method to calculate . Many simulations had shown that if

. Many simulations had shown that if  was very small then the approximation yj would be good. An even better criterion for a good approximation

was very small then the approximation yj would be good. An even better criterion for a good approximation  is

is  (see (3)). Moreover, the equations have been ill-conditioned in several examples.

(see (3)). Moreover, the equations have been ill-conditioned in several examples.

Analogously we could use boundary conditions instead of the initial conditions. This method can be even used analogously for PDEs, ODEs of higher order or DAEs, which have the form

If  is an ODE system, then we use the approximation function:

is an ODE system, then we use the approximation function:

For the i-th component of the solution y, we use the notation  as usual. We use for the i-th component of

as usual. We use for the i-th component of  the notation

the notation , in order not to lead to a confusion with the approximation

, in order not to lead to a confusion with the approximation  out of

out of , so it will be always distinguished whether the approximation

, so it will be always distinguished whether the approximation  or the i-th component of

or the i-th component of  is used.

is used.

We use the collocation points , with

, with  and

and

(2)

(2)

Simulations have shown that even with  we get good approximations.

we get good approximations.

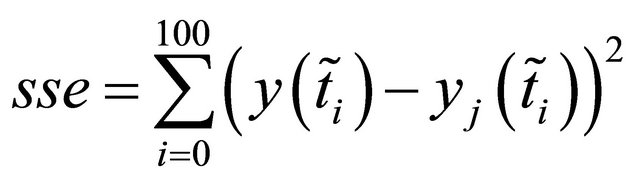

For the assessment of the approximation we use the value , with

, with

(3)

(3)

,

,  and

and  is an integer. For big

is an integer. For big  we should weight

we should weight  with

with .

.

Remarks 1:

1) We get

for , because of:

, because of:

Analogously for smaller .

.

2) The sums in (1) and (3) could start with , too.

, too.

3)  in (1) could also be used as a constraint if the initial value should be fulfilled. But in all good approximations,

in (1) could also be used as a constraint if the initial value should be fulfilled. But in all good approximations,  was very small.

was very small.

In the examples we use the Shannon wavelet. Although it has no compact support and no high order, in many examples and simulations we got a much better approximation than using other wavelets (f.e. Daubechies wavelets of order 5 to 8), even with a small n. The Meyer wavelet yields good results, too.

We even get a good extrapolation outside the interval .

.

Example 1:

1) We use the following ODE

The exact solution is .

.

We approximated the solution on the interval  and chose

and chose , like in all examples.

, like in all examples.

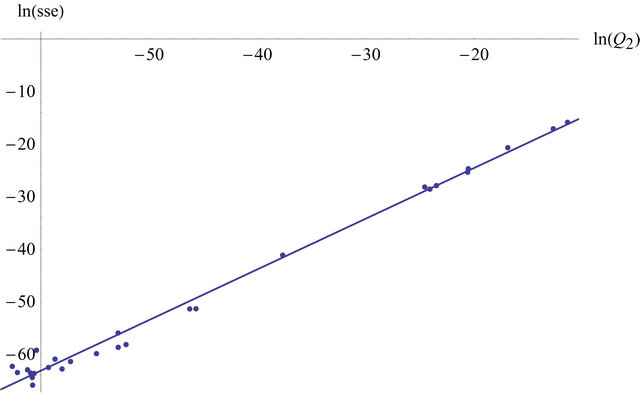

With  we could see in all our simulations, if the approximation was good. We got a linear relationship between

we could see in all our simulations, if the approximation was good. We got a linear relationship between  and

and . In Figure 1 we see the graph of a linear regression (with an R squared of 0.991196) of

. In Figure 1 we see the graph of a linear regression (with an R squared of 0.991196) of  against

against  with the points

with the points , which have been calculated with different

, which have been calculated with different and

and  with the ODE and I of the example 1.

with the ODE and I of the example 1.

is the mean squared error

is the mean squared error

with

with .

.

Now we see a regression table (Table 1) of  on

on , which shows a linear dependency in our example and the graph of the linear regression function.

, which shows a linear dependency in our example and the graph of the linear regression function.

Here is a graph of the regression function and the graphs of the functions yi and  for j = 0, kmax = 15 and

for j = 0, kmax = 15 and  on the approximation interval

on the approximation interval  (see Figures 2 and 3) and on the interval

(see Figures 2 and 3) and on the interval  (see Figures 4 and 5). In Figures 4 and 5 we see that we get even a good extrapolation.

(see Figures 4 and 5). In Figures 4 and 5 we see that we get even a good extrapolation.

Figure 1. Linear regression plot of  against

against .

.

Table 1. Linear regression table of  on

on .

.

2. Error Estimation and Assessment of the Approximation

In the example we used the Shannon wavelet. For this wavelet we have additional information about the error in the Fourier space from the Shannon theorem. For a good approximation with a small j the behavior of  with growing

with growing  is important, because (if yi is an orthogonal projection from y on Vj and

is important, because (if yi is an orthogonal projection from y on Vj and )

)

With the Parseval theorem we get

so

With the Riemann-Lebesgue theorem we get:

For the approximation error the decay behaviour of the detail coefficients  is important:

is important:

On the other side: we have got in many simulations with the Shannon wavelet better approximations (with the described collocation method) than with higher order wavelets.

Remarks 2:

1) For a theoretical multi resolution analysis we could consider  instead of

instead of , because when

, because when  is in

is in  then

then  is in

is in , if we need an approximation on

, if we need an approximation on . Here

. Here  is the indicator function of the interval

is the indicator function of the interval .

.

2) For interpolating wavelets there are a number of publications with error estimates and also for the approximation of the solutions of initial value problems and boundary value problems (for ordinary and partial differential equations) see [1,2], as well as to the sinc collocation method (see [3-5]) with special collocation points (“sinc grid points”, see [5]).

Theorem 1 (for the decay behaviour):

The wavelet  has the order

has the order ,

,  with

with  and

and  is Lipschitz continuous. Then exists a

is Lipschitz continuous. Then exists a  independent from

independent from  with

with

is the wavelet transform of

is the wavelet transform of  with

with

A proof is in [6]. So we get for the detail coefficients an appraisal because

and so

Now we saw that the decay of the detail coefficients depends on the order of a wavelet.

From the Gilbert-Strang Theory (see [7]) we know additionally an upper bound of the approximation error in dependency of the order : if the wavelet is of order

: if the wavelet is of order  then the approximation error has the order

then the approximation error has the order  if

if  and (if

and (if  is an orthogonal projection from

is an orthogonal projection from  on

on  and

and )

)

.

.

If a wavelet is of order  the scaling function

the scaling function  even has an interpolation property, because then we can construct the functions

even has an interpolation property, because then we can construct the functions  with

with  over a linear combination of

over a linear combination of  (see [7]). That’s also a property of the so called interpolating wavelets. For interpolating wavelets we find error estimations in [8] and [9].

(see [7]). That’s also a property of the so called interpolating wavelets. For interpolating wavelets we find error estimations in [8] and [9].

Remarks 3:

1) Error estimations for the sinc collocation with a transformation can be found in [4] and [5].

2) Although the approximation error is depended on the order of a wavelet in many simulations the Shannon wavelet led to much better approximations than Daubechies wavelets of higher order, if the approximation function  was calculated by minimizing the sum of squares of residuals Q. Even when comparing the extrapolations the Shannon wavelet was significantly better.

was calculated by minimizing the sum of squares of residuals Q. Even when comparing the extrapolations the Shannon wavelet was significantly better.

The reason is, that we do not calculate an orthogonal projection on  like in the appraisal above and the function y is in general case not quadratic integrabel on R (we consider only a compact interval I).

like in the appraisal above and the function y is in general case not quadratic integrabel on R (we consider only a compact interval I).

The following appraisal takes account of the fact that we calculate the approximation function by the minimization of Q. We first need a theorem, which follows from the Gronwall-Lemma.

Theorem 2:

Assumptions: we have a initial value problem  with

with  and

and

(4)

(4)

and

(5)

(5)

Then we get for :

:

For a proof see [10].

Theorem 3:

With the assumptions from Theorem 2 we get (if  ):

):

So we get the follow inequality for , which is used in the example 2:

, which is used in the example 2:

(6)

(6)

depends on

depends on  and

and  only on the initial value problem and the collocation points. We write

only on the initial value problem and the collocation points. We write  instead of

instead of  because in example 2 we set

because in example 2 we set  on the x-axes so we have a comparison with example 1 where we set

on the x-axes so we have a comparison with example 1 where we set  on the x-axes.

on the x-axes.

Remark 4:

We get with

If additionally  for one (or more)

for one (or more)  we get:

we get:

This is analogously right for  instead if

instead if  with

with

and  and an integer

and an integer . Qa is an upper bound for

. Qa is an upper bound for . With

. With  we could assess in all simulations the quality of an approximation and in linear regressions from

we could assess in all simulations the quality of an approximation and in linear regressions from  on

on  we got in almost all simulations a

we got in almost all simulations a  (R squared) greater than 0.99 (see next example). Only if all approximations have been bad, then

(R squared) greater than 0.99 (see next example). Only if all approximations have been bad, then  was less than 0.99 (but we still have a dependency). If

was less than 0.99 (but we still have a dependency). If  is the exact solution, then

is the exact solution, then . Because we get not only a approximation with points (we get a approximation function

. Because we get not only a approximation with points (we get a approximation function ) we must not calculate a second minimization for the calculation of

) we must not calculate a second minimization for the calculation of .

.

will be in general (for

will be in general (for ) less than M, because we use the collocations points ti and so

) less than M, because we use the collocations points ti and so  is very small at these points (see the next graphic).

is very small at these points (see the next graphic).  was in many good simulations less than 10−16.

was in many good simulations less than 10−16.

In many simulation  is relative big between to collocation points (or at the edge of I if we start with i = 1 in the sum (1)).

is relative big between to collocation points (or at the edge of I if we start with i = 1 in the sum (1)).

In Figure 6 we see the graph of

in example 1 for  and

and . Here a too small

. Here a too small  results in a very bad approximation.

results in a very bad approximation.

We see that  could be very small with a too small m, but

could be very small with a too small m, but  is very big here. In the graph we see that d is very small at the collocation points

is very big here. In the graph we see that d is very small at the collocation points  but between them d is very big. That’s the reason because we could identify with

but between them d is very big. That’s the reason because we could identify with  a worse approximation in any our simulations. On the other hand a big

a worse approximation in any our simulations. On the other hand a big  is an indicative of a too small j.

is an indicative of a too small j.

So we can approximate M here with the maximum of  at the points

at the points  with

with  like we do it in the next example.

like we do it in the next example.

Now we want to apply the result from theorem 2. Furthermore we will see a correlation between an approximation of  and

and  in this example like we saw it before between

in this example like we saw it before between  and

and .

.

Example 2:

We use the initial value problem and the approximations with the different parameters ,

,  and m of example 1. If

and m of example 1. If  than follows from theorem 2 (under the assumptions from this theorem):

than follows from theorem 2 (under the assumptions from this theorem):

Here we get (see (6)):

We now apply a linear regression of  on

on  with the approximation

with the approximation

from M2 with  (the points from

(the points from  beginning with

beginning with ). sse and

). sse and  have been calculated with the points

have been calculated with the points  (and the summation indices

(and the summation indices ).

).

Here is the regression table (Table 2) (with a R squared of 0.986877).

In Figure 7 we see a graph from  (in red), the graph of the regression function (in blue) and the regression points

(in red), the graph of the regression function (in blue) and the regression points .

.  was not considered (this means we set

was not considered (this means we set ) because it was very small.

) because it was very small.

Here are the graphs of  with

with ,

,

and  in Figure 8. In most simulations

in Figure 8. In most simulations  was less than 10−16.

was less than 10−16.

Generally we can use

,

,

and

and  (with an integer

(with an integer ) for an approximation of

) for an approximation of . Here we know the following relation:

. Here we know the following relation:

3. Conclusions

We defined a variable  with which you can evaluate an approximation. In many simulations and in the examples of this article we saw that we get good results with

with which you can evaluate an approximation. In many simulations and in the examples of this article we saw that we get good results with . A linear relationship between

. A linear relationship between  and

and  was shown in example 1. It is also shown that the approximation can be used to extrapolate outside the approximation interval.

was shown in example 1. It is also shown that the approximation can be used to extrapolate outside the approximation interval.

Using Theorem 2 we derive an estimate (see theorem 3). Then it is shown how to detect a too great step size using . In example 2 we show that the deduced estimate represents a straight line (in the coordinate system with

. In example 2 we show that the deduced estimate represents a straight line (in the coordinate system with  on the x-axes and

on the x-axes and  on the yaxes), which runs approximately parallel to the regression line (it is approximately parallel because the regression function is an estimation, theoretically it must be parallel because it cannot cross the upper bound line). In a research project we got analogous results in many

on the yaxes), which runs approximately parallel to the regression line (it is approximately parallel because the regression function is an estimation, theoretically it must be parallel because it cannot cross the upper bound line). In a research project we got analogous results in many

Figure 7. Linear regression plot of  against

against .

.

simulations, even with systems and higher order odes.

It is shown that  (the size of the estimate) can be approximated via

(the size of the estimate) can be approximated via , and this approximation has

, and this approximation has  as upper bound. The regression of the points

as upper bound. The regression of the points

returns a slightly larger

returns a slightly larger  than the regression with the points

than the regression with the points . As a consequence, Q2 is well suited to assess, especially as you can estimate the approximation of

. As a consequence, Q2 is well suited to assess, especially as you can estimate the approximation of  with Q2 and in Q2 more information is included. Moreover we can compare Q2 with

with Q2 and in Q2 more information is included. Moreover we can compare Q2 with  to assess the approximation (see Figure 6).

to assess the approximation (see Figure 6).