A Class of Algorithms for Solving LP Problems by Prioritizing the Constraints ()

1. Introduction

A linear programming (LP) problem can be described mathematically by the following general form:

(1)

where z the given objective function,

,

,

and

,

,

,

and

[1] .

While formulating real-world LP problems, extra constraints usually are added besides the necessary ones, in order to avoid losing some useful information [2] . As a result, a plethora of redundant constraints exist, and not all of the constraints contribute to the detection of an optimal solution. The issue that must be faced is to avoid the usage of redundant information existing in the constraints. During the last six decades, due to the important role of LP problems both in theory and practice, attempts have been made by many researchers, regarding to either eliminate the redundant constraints or the detection of the binding ones in order to contribute to the reliability of the utilized algorithm to solve the problem and to save resources and computational time.

The importance of focusing on binding constraints in order to achieve the above objectives is of major importance in real-world LP problems, where resources are limited and constraints may vary. Identifying the binding constraints allows us to optimize the decisions by making more efficient use of available resources. An example of a real-world LP problem considers the optimal allocation of resources among different products in order to maximize a company’s overall profit, where the variables correspond to the quantities produced of each product, while constraints refer to the available resources, such as available workers, materials and equipment. To achieve maximum profit, the optimal allocation of these resources to each product must be found, taking into account the quantities of labour, materials and equipment required to produce each product.

In the present paper, the LP problem is dealt with a proposed criterion, named proximity criterion. The innovative idea is based on [3] , which is referred to two-dimensional LP problems. This criterion focuses on ranking the constraints according to the greater possibility to be binding by making use of a simple comparison process between the coefficients of the objective function and the coefficients of the constraints. The key idea is to take into account the proximity between the coefficients of the objective function and the coefficients of the constraints for every variable of the given LP problem. The direct use of the values of the coefficients of the constraints and of the objective function is avoided and the proposed methods do not be affected by the accuracy of the arithmetic operations. The constraints having their coefficients as close as possible to the coefficients of the objective function have the greatest possibility of being the binding ones.

The proposed technique, named proximity technique, uses a matrix, named Utility matrix, to create indices per variable and per constraint and a ranking procedure that is based on this Utility matrix. Summarizing the information per each row of the Utility matrix a vector, named Utility vector, is proposed. Based on the Utility matrix and Utility vector, correspondingly, two algorithms are introduced and a proposed mathematical framework including both of them is presented. It is worth mentioning that the proposed algorithms are able to deal with LP problems with coefficients

that are positive, negative or zero. The two presented algorithms are directly compared in terms of the numerical results with the algorithms RAD and GRAD [4] [5] [6] [7] [8] . Those two algorithms derive more information from the constraints and less information from the objective function. Our two algorithms make use of the proximity between the coefficients of the constraints and those of the objective function. The approach here is this of the nonparametric statistics: By making use of the vectors’ ranking, instead of their arithmetic values, it is impossible to deal with issues of arithmetic nature such as the precision of the operations. In other words, because of the simplicity of the arithmetic procedure, it is also possible to avoid arithmetic errors. It is worth noticing that with the proposed technique, it is possible that some terms for

, are equal to zero. Last but not least, under the notion of the proposed utility matrix, known methods such as those referred to in [9] and [10] can be formulated under the proposed framework.

As has already been mentioned in many real-world problems, the number of constraints is much larger than the number of variables, thus the problems are more complicated. A generator of random linear programming problems with many constraints may represent the complexity of such problems.

In the rest of the paper, in Section 2 a concise literature review to establish the current state of research in LP problems for handling binding and redundant constraints, is presented. In Section 3 the proposed mathematical framework and the new algorithms under this, are presented. In Section 4 an analytic step-by-step example for each of the new algorithms is given. In Section 5 firstly a way to create the random LP problems used for testing the effectiveness of the proposed algorithms is presented. In the same Section, the proposed algorithms are tested in well-known benchmarks (netlib problems), that in their majority make use of sparse matrices [11] [12] . Then, a discussion of these results is introduced via hierarchical regression trees. Moreover, in the same Section, a proper fitting function is given. Finally, in Section 6 conclusions for the present paper and proposals for further research are described.

2. Literature Review

In [13] during progressively solving an LP problem with the simple method, having a feasible solution, it is estimated whether a constraint that does not participate yet in this solution is redundant or not. In [14] a theorem is presented concerning whether a constraint is redundant or not in a specific LP problem. In [15] , Gal proposed a procedure for the determination of redundant constraints when the nearby vertex is degenerated.

In [10] the theorem given in [14] is used and the method classifies the constraints according to decreasing

, where

is the angle between the i-th constraint and the objective function. Moreover, the vector b is not taken under consideration for the classification, thus it is not affected by whether the initial LP problem is a minimization or a maximization one. In [16] [17] , by using a ratio of

,

and

, a constraint is classified either in the group of redundant constraints or the binding ones. This ratio expresses the contribution of the variable

of this constraint in the maximum change (that arises when the constraint applies as equality) of the objective function. In [18] a method for finding solutions to LP problems using some of the constraints by a heuristic approach is proposed.

In [2] [9] [19] new approaches that gradually add constraints to the given problem in order to solve and simultaneously alleviate the redundant constraints to LP problems, are addressed. The proposed method in [20] utilizes the bounds of the variables whenever it is possible in order to define redundant constraints. In [21] [22] techniques for removing redundant rows and columns utilizing robust bounds are proposed by making parallel use of the dual LP problem; in [23] a method is proposed for reducing time and for more data manipulation for the above techniques.

Moreover, there is a class of methods comparing the ratios

and

concerning two constraints k, s, in order to investigate the redundancy [24] [25] . In addition, in [26] [27] heuristic methods have been proposed to identify the redundant constraints in order to alleviate them for the solution process of the LP problem. In [28] [29] , a comparison is presented between the heuristic method presented in [26] and Llewellyn’s rules [24] . Finally, in [30] the ratio

is used for each constraint. Constraints are ranked in ascending order according to the distance that this ratio has from the average of the ratios of all constraints. From the above, it can be observed that a lot of the research work concerns the identification of redundant constraints although binding constraints are the required ones as they determine the solution of the problem. A very good idea was presented in [4] [5] [6] [7] [8] [10] where an effort was made to locate the bindings, by observing the angles that form the constraints with the objective function.

In [3] using the sign of slopes of the constraints and the slope of the objective function, it becomes noticeable that the possibility for a constraint to be binding is reduced as the difference between its slope and the slope of the objective function becomes larger. In [31] the idea presented in [3] was generalized for n-dimensional LP problems. It presents a way of classifying the constraints based on the ratio of the coefficients

. In [32] a combination of the proximity technique, with the one proposed in [30] is developed, in order to derive a new ranking using the minimum or the average or the geometric mean of the ranking numbers of the constraints.

3. The Proposed Methodology

This section discusses a new technique, called proximity based on a proposed criterion, called the proximity criterion. This technique ranks the constraints by prioritizing those that are more likely to be binding. Two new algorithms are introduced with the proposed technique.

3.1. Definitions

Before the detailed presentation of the proximity technique, the basic necessary definitions are presented:

Definition 1. A feasible solution is a solution for which all the constraints are satisfied [33] .

Definition 2. The feasible region is the area

denoted by all the feasible solutions [33] .

Definition 3. The optimal solution of an LP problem is the solution for which all the constraints of the problem are satisfied and which gives the maximum value of the objective function if we are referring to a problem of maximization and the minimum value for a problem of minimization, accordingly [33] .

Definition 4. A constraint on an LP problem is called redundant if its removal from the list of constraints does not change the feasible area of the problem [34] .

Definition 5. A constraint is called weakly redundant if it is redundant and its boundary touches the feasible region [15] [35] .

Definition 6. The binding constraint is a constraint of an LP problem, for which equality in an optimal solution point applies [33] [34] .

3.2. The Proximity Criterion

The key idea for the proposed criterion is to use the proximity between the coefficients of the objective function and the corresponding coefficients of the constraints. Proximity has the advantage of having no cost and of not being complex while making comparisons and can be used in LP problems with a very large number of constraints. In order to define the proximity of each constraint with the objective function, a norm

is required. The proposed criterion, named proximity criterion, applies as follows:

Definition 7. Proximity criterion: rank the constraints of an LP problem, based on the norm

between the coefficients

of the objective function and the coefficients of each constraint i, for

.

3.3. The Framework

The proposed framework under which LP algorithms focus on the detection of redundant or binding constraints is presented. In this specific framework, both the already existing algorithms referred to in [9] and [10] as well as the newly proposed ones can be included. For the setting of this framework, the following definitions are given:

Definition 8. The Utility matrix U is a matrix with its elements to be norms. For the calculation of each element of the matrix U, it is possible to use data from matrix A, and vectors c and b given in Equation (1).

Definition 9. The Ranking matrix

is the matrix having as elements the rankings that arise from the elements of the Utility matrix U taken either by rows or by columns.

Definition 10. The Ranking vector

is the vector having as elements the rankings that arise from the Utility matrix U by applying a norm for each row of U.

Due to the above definitions, the matrix used by Corley [10] is considered to be the Utility matrix:

Furthermore, the method presented in [10] may be considered to be the Ranking Vector that reveals the position in descending order of the sum of the elements of each row of the Utility Matrix.

Likewise, based on the definitions above, the Utility matrix that corresponds to the method presented at [9] is:

and the method presented at [9] may be considered to be the Ranking Vector that reveals the position in ascending order of the sum of the elements of each row of the Utility Matrix.

3.4. The Proposed Technique

For the implementation of the proposed technique two algorithms are presented where the utilized Utility matrices U are defined as follows:

Definition 11. The PRMac Utility matrix is a Utility matrix

with elements

given by:

(2)

Definition 12. The ranking matrix

of a Utility matrix

is defined as the matrix where each element

, for

and

, presents the ranking position of the element

for each j-th column of the matrix

, in ascending order.

Definition 13. The ranking vector

is defined as:

(3)

where

is the ranking of the coefficients c of the objective function in descending order.

3.5. The Proximity Ranking Matrix (PRMac) Algorithm

In the first of the two proposed algorithms, named Proximity Ranking Matrix (PRMac), the Utility matrix given in Definition 11 is used. Specifically, the presented algorithm takes under consideration for each constraint the proximity of

to the corresponding

, for

. The rising ranking indicates the possibility each constraint has to be binding. The constraints with smaller rankings are assumed to the more likely to be the binding ones. Especially for the equality constraints, since they are classified to be binding in advance, they should receive a

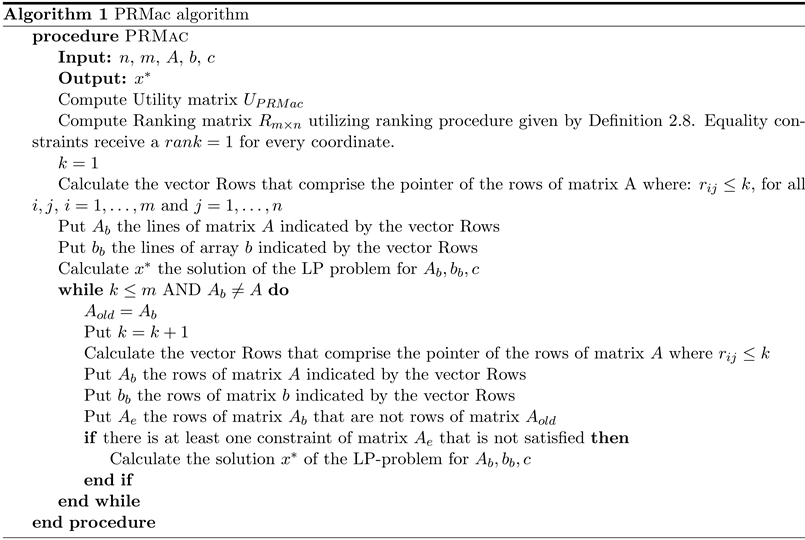

for every coordinate. In the proposed algorithm, the step-by-step procedure presented in [9] is utilized and the constraints are inserted by groups according to their ranking.

Specifically, the proposed algorithm gradually puts into classes the constraints of the LP problem, in order to solve a smaller problem, by considering the ranking of each variable. For this scaled insertion, the order given by the ranking matrix is taken under consideration, thus the smaller rankings per each variable are prioritized. During this insertion, the constraints that have not been used are checked according to their ranking, whether they are satisfied or not. In case they are satisfied, by making use of the Theorem 3.1 presented in [14] , the solution that has been found is not affected. In the opposite case, they must be added to the existing system and a new solution point is searched. Thus, progressive insertion of the useful constraints for solving the given LP problem is taking place, by classifying the constraints according to the possibility to be binding. As the process evolves, the majority of the remaining constraints are utilized for the verification of an optimal solution. As a result, the minority of the remaining constraints is expected to be used in the solving procedure of the given LP problem.

The proposed algorithm PRMac, is presented in Algorithm 1.

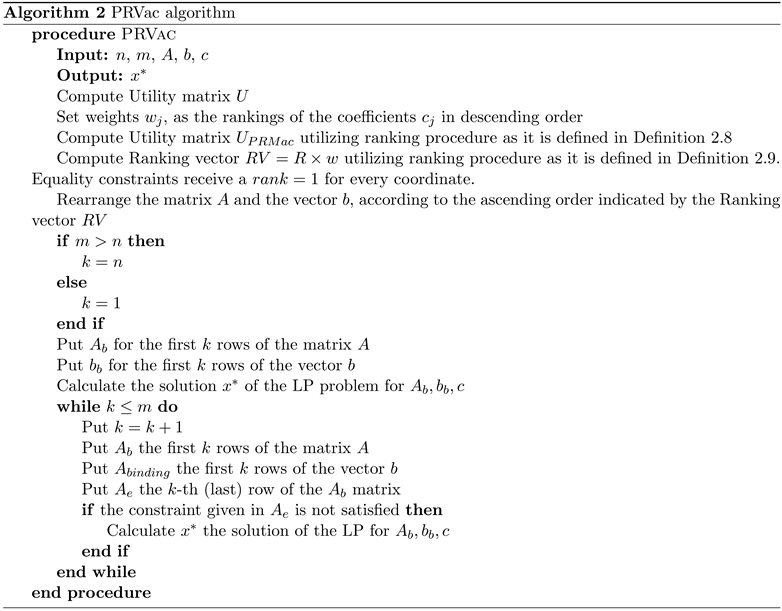

3.6. The Proximity Ranking Vector (PRVac) Algorithm

A different approach is the information that arises from each line of the utility matrix by making use of the PRMac algorithm, to be substituted from a number. Thus, the matrix is substituted from a vector. In order to accomplish this, techniques that calculate the norms of the vectors can be used, for instance

or statistical indicators like mean, median, geometric mean, harmonic mean, etc. By making use of the same information as in the PRMac algorithm, the new proposed algorithm, named Proximity Ranking Vector (PRVac) transforms the Utility matrix

to a proximity vector, by applying

norm for each row of the Utility matrix. The elements of the proximity vector are derived from the inner product of the vector’s ranking of coefficients

of the objective function with the vector of the rankings

, where

is given by Definition 12 for the i-th constraint. As a result, a ranking vector as it is given in Definition 13 is derived.

On the contrary, with the proposed PRMac algorithm, the priority of the constraints is given in vector form instead of a matrix. Thus, the progressive insertion of the constraints is implemented by ascending priority order instead of priority groups, using the Theorem 3.1 presented in [14] , as before.

The proposed PRVac algorithm is presented in Algorithm 2. A simple example of the PRMac and PRVac algorithms is given in the next section.

4. Numerical Example

, where:

,

,

.

The optimum point is

and the binding constraints are the 1st and the 5th ones.

Compute Utility matrix by relation (2) and the corresponding ranking matrix

(4)

Set k = 1

For k = 1 no constraint is selected, so the system has a solution that tends to infinity.

Set k = 2

For k = 2, based on the ranking matrix R, Equation (4), three constraints are selected: the 1st, the 2nd and the 5th. Thus

.

The optimum of the above problem satisfies the rest of the constraints of the given problem. Thus, in the following steps (

) the PRMac algorithm checks, without solving the problem, that the rest of the constraints are met.

In Figure 1 two steps of the PRMac algorithm are depicted. In the left hand

![]()

Figure 1. Graphical representation of the algorithms PRMac and PRVac.

side, for

where the first insertion of constraints takes place and in the right hand side the final step of the algorithm, with all the constraints included.

PRMac algorithm verifies that for

the constraints given by the lines

and

are satisfied in the minimum point B defined for

.

By utilizing PRVac algorithm after the computation of Utility matrix, weights are given as follows:

(5)

The PRVac algorithm, in its first step for

, inserts the fifth and the first constraints that are the binding constraints of the problem. Thus, it will give the point B as the optimum for the given LP-problem.

On the left-hand side of Figure 2, the ranking of the proximity of the constraints by coordinate is depicted according to the matrix R of the relation (4) for the PRMac algorithm. The corresponding representation for the matrix R, as given in relation (5), of the PRVac algorithm is given on the right-hand side of Figure 2. Because of the fact that the PRVac algorithm makes an overall consideration of the rankings, due to uniformity, the rankings have been represented in the bisector of the first quadrant. In both figures, the numbering of the ranking vectors corresponds to the numbering of the constraints. The second constraint, in black, is chosen by the PRMac algorithm because it has the same ranking as

![]()

Figure 2. Graphical representation of prioritization zones made by proximity rankings using R matrices of the PRMac and the PRVac algorithms.

the fifth constraint, in red, in terms of the first coordinate. The binding constraints, the first and fifth ones, are shown in red. With the PRVac algorithm, the second constraint is ranked to be third. Note that the optimal point has been located already by using the first and the fifth constraints, which have been chosen as those with the smallest ranking.

5. Numerical Results

The R package was used for the numerical results [36] - [41] . The multivariate analysis was performed using mgcv and nlme libraries [42] - [48] . Graphs have been made using the libraries ggplot2, gridExtra, RColorBrewer and sjplot [49] - [55] while for the formatting of the tables libraries htmltools, kableExtra and jtools have been used [56] [57] [58] . Moreover, hierarchical regression trees were implemented using rpart and rpart.plot R-libraries [59] [60] . The data files are all deposited to Github and it is possible to grant access to whoever it is desired to do so through the link given in [61] .

From all those mentioned above, it can be noticed that the PRVac algorithm makes gradual insertion of the constraints in the LP problem that is going to be solved, contrary to the PRMac algorithm where the insertion takes place in groups (their number tends to the dimension n of the LP problem) and the number is analogous to the dimension of the LP problem, so it increases together with the increment of the dimension. Thus, the PRMac algorithm inserts large groups of constraints regardless they are binding or not, whilst the PRVac algorithm inserts a constraint only after checking it for being binding. As a result, while the dimension of the problem increases, this feature gives the PRVac an advantage over the PRMac algorithm, whereas in LP problems with a few number of constraints, the PRMac has an advantage, because of the insertion of small groups of constraints. For the testing of the proposed algorithms were used:

• Set of 69 well-known benchmarks (netlib problems) [11] [12] , where the number of the variables is significantly bigger than the number of the constraints (subsection 5.1).

• Random problems, where the number of the constraints is significantly bigger than the number of the variables (subsection 5.2).

5.1. Random LP-Problems

5.1.1. Creating the Random LP-Problems

The random LP-problems are constructed by randomly selected variables and coefficients as follows:

• Dimension of each LP problem

,

,

• Number of constraints

,

,

•

,

•

.

In order to increase the probability that a solution exists in the randomly constructed LP-problems, a random solution (

) of the created LP-problem under the assumption the coefficients of

to be randomly chosen in the interval

is given. Afterwards,

is calculated and perturbation, with random noise of scale 10% takes place. The chosen perturbation is not particularly great, because the objective is the creation of random LP problems that can be solved and it is a common assumption that while the dimension of the LP problem n increases, bigger perturbation in b could make extremely difficult the existence of an LP problem that can be solved.

Figure 3 and Figure 4 depicts the histogram of the number of variables and

![]()

Figure 3. Histogram of number of variables where different colors indicate the number of binding constraints (n.binding).

![]()

Figure 4. Histogram of number of constraints where different colors indicate the number of binding constraints.

constraints for the test problems accordingly. Furthermore, Figure 5 shows the histogram of the percentages of binding constraints in the total number of constraints. In all those figures, a colourful escalation has been applied, depending on the number of binding constraints of the LP problem.

Table 1 represents the frequencies of the ratio of the number of binding constraints divided by the total number of constraints. As can be observed, the greater frequency 3499 lies in the interval

followed by the frequency 2748 in the interval

. Note that, the average of the binding constraints is 13.168% of a total number of constraints.

![]()

Figure 5. Histogram of percentage of the binding constraints.

![]()

Table 1. Frequency table of the ratio of the number of binding constraints divided by the total number of constraints.

Remark 1. The constraints that satisfy the relation

are considered to be the binding ones. Without further checking the linear independence of these constraints, this relationship does not ensure that the constraints are binding and not weakly redundant. Therefore, in numerical results, there is a possibility that a number of weakly redundant constraints may be included in the binding constraints.

5.1.2. Including Binding Constraints by a Proper Ranking

As has already been mentioned, whenever a mathematical formulation is given in order to describe a real-world LP problem, usually the number of constraints is more than the necessary ones [2] . Furthermore, when dealing with real-world LP problems, it is impossible to know which and how many the binding constraints are before spotting an optimal solution in case it exists. Utilizing the first k ranked constraints per variable the prop.binding is defined as:

An ascending ranking procedure for classifying the constraints is considered to be successful if small rankings are given to the binding constraints where the number k,

, is randomly selected. In order to examine whether the constraints having small ranking in the PRMac and PRVac algorithms have a greater probability to be bindings, hierarchical regression trees for prop.binding are utilized. Specifically, hierarchical regression trees were used to check if the size of k (hence k/m) affects the size of the prop.binding. Figure 6 presents the hierarchical regression tree for prop.binding using the PRMac algorithm where

is the independent variable. Note that in every node or leaf of the hierarchical tree, it has depicted the percentage of the sample that the node or the leaf corresponds to, as well as the mean value of the dependent variable

![]()

Figure 6. Hierarchical regression tree for PRMac algorithm. The gradations of colors from red to green reflect the average from the lowest to the highest average values of prop.binding.

(prob.binding). The leaves of the tree, refer to the conditions of the independent variable. When the condition is satisfied, then the left-hand side leaf of the hierarchical tree is followed, otherwise, the right-hand side leaf is followed. Moreover, in order to reduce the risk of over-fitting, the process of pruning has been applied. From Figure 6, the following conclusions can be extracted:

• On average the prop.binding for the 10,000 randomly constructed LP-problems is 0.97 (root of the tree).

• If k is selected such that k/m is greater than 0.12 (that happens in 89% of the random LP problems) the number of prop.binding is 0.99, which means that the 99% of the binding constraints has been selected (the rightmost leaf of the tree).

• If k is selected such that k/m is greater than 0.037 (that happens in 97% of the random LP-problems) the number of prop.binding is 0.98, which means that the 98% of the binding constraints has been selected (the node over the rightmost leaf of the tree).

Thus, the PRMac algorithm is able to achieve high percentage in locating the bindings in a small number of selected constraints per variable, which leads to the conclusion that the information from every variable is important.

Figure 7 represents the hierarchical regression tree for the PRVac algorithm. At this point, it is worthy to notice that the PRVac algorithm summarizes the information of the ranking matrix (which is used in PRMac algorithm) into a vector. A random number of k selected constraints,

, is defined and the first k ranked constraints are used.

• On average the prop.binding for the 10,000 randomly constructed LP problems is 0.58 (root of the tree).

• If k is selected such that k/m is greater than 0.75 (that happens in 27% of the random LP problems of the sample) the number of prop.binding is 0.91, which means that the 91% of the binding constraints has been selected (the rightmost leaf of the tree).

![]()

Figure 7. Hierarchical regression tree for PRVac algorithm. The gradations of colors from red to green reflect the average from the lowest to the highest average values of prop.binding.

• If k is selected such that k/m is greater than 0.52 (that happens in 52% of the random LP problems of the sample) the number of prop.binding is 0.8, which means that the 80% of the binding constraints has been selected (the node over the rightmost leaf of the tree).

5.1.3. Relation of the prop.binding with the Ranking

In this subsection the research is focused on the model which would be able to interpret better the value of the prob.binding in relation to the ratio

, by making use of the results from the randomly created LP-problems.

In Figure 8, the scatter plot of the number of variables with the number of constraints for the 10,000 randomly constructed LP-problems is presented. In the same figure, for every LP problem, the ratio of detecting binding constraints has been illustrated colourfully by making use of darker colours for the smaller ratio and lighter colours for the bigger values. This specific illustration has been selected in order to detect visually whether the PRMac algorithm is behaving in the same way for all LP-problems having m constraints and n dimensions. This illustration reveals on the one hand that yellow dots correspond to a high percentage for the prob.binding are the dominant ones—as a result the binding constraints are gathered in low rankings independently of the value of the ratio

—and on the other hand the darker dots correspond in low percentage for the prob.binding,have a rather rare appearance and are evenly distributed among the yellow dots.

Figure 9(a) presents on the left-hand side the scatter plot of the prob.binding in relation with the ratio

and Figure 9(b) presents the same figure with the

![]()

Figure 8. Number of variables and number of constraints of the 10,000 random LP-problems.

![]() (a)

(a)![]() (b)

(b)

Figure 9. Scatter plots of the prop.binding variable in accordance with (a) k/m (b) log(k/m).

logarithmic transformation of the x axis. The left hand side of the scatter plot confirms the fact that for small values of the ratio

, small changes of it cause big increment in prop.binding. While the value of the ratio

increases, the rate of change decreases. This exponential behaviour of the function leads to the logarithmic transformation of the ratio

. Thus, on the right-hand side of Figure 9(b), a sigmoid relation that connects the possibility of including in the first k constraints the bindings and the logarithm of the ratio

is appeared.

ANOVA Table 2 shows that the dependent variable prob.binding is affected by the ratio

.

The influence of k/m on prop.binding is confirmed by ANOVA table given in Table 2. Hence, the logit model of the log of k over m is applied and the result of this model is presented in Table 3.

From Table 3 it is confirmed that

is statistically significant (p-value < 0.001). Moreover, the coefficient of

(41.40) derives that the increment of

will result in a fast increment of the ratio for the detection of the binding constraints.

Figure 10 presents the graph of the logistic function (black curve) derived from the logit model. It can be observed that the points corresponding to the random problems are gathered by the majority above the approximation curve, so the approximated value underestimates the performance of the algorithm. As a result, in the randomly generated LP problems, the usage of

of the constraints is expected to be better in the detection of the binding constraints than the approximation.

5.2. NETLIB LP-Problems

The proposed algorithms were applied to the well-known 69 benchmark problems (netlib problems) [11] [12] .

In Table 4 the results from the netlib problems are depicted with the following labels:

• netlib is the name of the netlib problem.

• n the dimension of each LP problem.

• m the number of constraints.

• n.binding the percentage of the number of the constraints that are binding or weakly redundant constraints (see Remark in subsection 5.2).

• 50 is the number of constraints that must be chosen after ranking the constraints with the proper algorithm, in order to include 50% of the n.binding constraints of the LP problem.

![]()

Table 2. ANOVA Table of the prop.binding in relation with k over m.

![]()

Figure 10. The graph of the logistic function derived from the logit model.

![]()

Table 4. Ranking results of the constraints by making use of the PRMac, PRVac, RAD and GRAD algorithms in netlib problems.

• 90 is the number of the constraints that must be chosen after ranking the constraints with the proper algorithm, in order to include 90% of the n.binding constraints of the LP problem.

• Sparsity the percentage of matrix A (or vectors b and c) elements that are non-zero.

From Table 4 it can be derived that one feature of the netlib problems is that the number of binding constraints is significantly large (on average 78% of the constraints). It is worthy to mention, that those problems have a big percentage of coefficients equal to zero, both for the constraints and for the objective function. Specifically, on average 98.5% of the elements of array A are equal to zero (a sparsity of 0.015), 60.8% of elements of array b (a sparsity of 0.392) and 58.2% of elements of array c (a sparsity of 0.418) are equal to zero. Moreover, it is remarkable the fact that by making use of the PRMac algorithm. The constraints are ranked in such a way that the first 61% of them, including 90% of the binding constraints of the LP problem, considering the fact that the PRMac is implemented in problems with a large number of binding constraints.

From Table 4 it can be revealed that one feature of the netlib problems is that the number of the constraints is on average 1/3 of the number of the variables (941 constraints - 3587 variables).

In the random LP problems, the ranking of the constraints takes place in such a way that for solving the LP problem, 90% of the constraints are used on average. For the netlib problems, it can be observed that the first group of the constraints for the PRMac algorithm can include 61% of the constraints.

Moreover, from Table 4 it can be observed that the average for including in the first k constraints the 50% of the binding constraints is slightly better in the PRVac algorithm, 0.48 instead of 0.55. For 90% of the constraints, the situation is reversed clearly in favour of the PRMac algorithm (0.61 instead of 0.87). On the other hand, the GRAD algorithm is proved to be slightly better in comparison with RAD, for both those cases, with the corresponding values to be 0.56 instead of 0.58 and 0.82 instead of 0.86. Overall PRVac is proved to be slightly better in 50% of the binding constraints, while for 90% of the constraints, PRMac is proved to be far a way better than all the others.

There are Netlib problems where due to their “nature” (the same

for one variable in all the constraints) the PRMac algorithm gives “poor” classification in some variables of the constraints. Thus, the PRMac algorithm attributes to a large number of the constraints the same ranking number. On the contrary, by making use of the PRVac algorithm this disadvantage is overcome and the Netlib problems can be faced efficiently. Consequently, in general, it is preferable when matrix A creates a “poor” classification, to make use of the PRVac algorithm.

5.3. An Overview of the Numerical Results of PRMac and PRVac Algorithm

In order to calculate the mean number of iterations while solving an n-dimensional LP problem with m constraints by using the Simplex Method [62] [63] [64] [65] presented the following approximation relations:

(6)

where L1 and L2 are the minimization norms used in the regression process. As it can be observed, because the exponent in

is smaller in comparison with

,

is expected to do a smaller number of iterations. Moreover, for the same problem by using the dual Simplex method in [62] [63] [64] [65] , the following relation is given for both of the norms:

(7)

The above relations calculate the mean number of iterations. As in every iteration, the dimension of the utilized vectors is m + n and every reduction in the number of m leads both in the reduction of the mean number of iterations as well as of the dimension of vectors used in every iteration.

Next, the relations (6) and (7) are going to be used in order to evaluate the reduction of the computational cost given under the proposed algorithms, for the netlib problems provided in Table 4. Based on the percentages derived from columns Mac90 and Vac90 of Table 4, it is estimated that the percentage of the constraints required in order to include the 100% of the binding constraints lie in the half distance between the 100% and Mac90 or the 100% and Vac90 accordingly. It gives a percentage of 79.19 for the PRMac algorithm and 93.07 for the PRVac algorithm, respectively.

According to relation (6), if the Simplex method is going to be used in order to solve the given LP problem, the mean number of iterations is going to be approximately 501 - 703, and the dimension of vectors used in every iteration is approximately 663, because

and

.

So, by making use of the PRMac algorithm, the reduction of m by 20.81 leads on average in 101 - 150 fewer iterations, while the vectors used in every iteration have on average 177 fewer dimensions. This is equivalent to a reduction of the iterations from 5.54% to 10.34% with the PRMac algorithm. Likewise, by making use of the PRVac algorithm, a reduction of m by 6.93% leads on average in 34 - 50 fewer iterations, while the vectors used in every iteration, have on average 92 fewer dimensions. This is equivalent to a reduction of the iterations from 6.94% to 8.72% with the PRVac algorithm.

Similar results derive from (7) concerning the Dual Simplex method. The usage of the Dual Simplex method requires approximately 238 iterations and the vectors used in every iteration have on average 663 dimensions. By making use of the PRMac algorithm, the number of vectors used in every iteration, have on average 177 fewer coordinates. In case the dual simplex method is used, the reduction of the iterations is going to be on average 5969, which leads to a percentage decrease of 63.01%. Whereas with the PRVac algorithm, the dimension of vectors used in every iteration has on average 92 fewer dimensions. In case the dual simplex method is used, the reduction of the iterations is going to be on average 2763, which leads to a percentage decrease of 40.27%. Despite the exceptional approach in ranking the binding constraints that become feasible by using

, the fact that primarily

may be zero and secondarily if

the j-th variable is not taken under consideration in the ranking of the constraints is a shortcoming. On the contrary, the proposed algorithms of this paper make use of proximity in the classification of the constraints, so both the coefficients of the constraints

as well as the coefficients of the objective function

are taken into consideration, without worsening the functional cost. As a result, arithmetic operations between vectors do not take place and the precision of the operations is not affected. More precisely, in the PRVac algorithm, the classification takes place by taking into account the rankings per coordinate and by setting as weights the values of

, giving the smaller value to the coordinate of

having the greater absolute value. Netlib problems, have

in a regular basis, so in order to test the RAD algorithm,

was set to be

instead of

. In the same algorithm, the constraints are classified by considering as best the one with the biggest RAD (smallest ranking).

6. Conclusions and Further Research

In the present paper, two new algorithms for solving LP problems are presented. The proposed algorithms rank the constraints according to the possibility of being binding based on the idea of proximity which can be used in LP problems with a very large number of constraints. Moreover, in the theoretical framework of the proposed algorithms already known algorithms can be involved. By making use of the distance of the coefficients of the constraints with the corresponding coefficients of the objective function, the proposed PRMac and PRVac algorithms, utilize a utility matrix or a utility vector correspondingly. In order to rank the constraints the process is simple, as it is only based on comparisons between the coefficients and does not require any further calculations.

For testing PRMac and PRVac algorithms, a generator of random LP problems is addressed for constructing 10,000 random LP problems of dimension n with m constraints, where

. As has already been mentioned a generator of random linear problems with many constraints may represent a wide range of real-world problems. From the numerical results, it is derived that in the majority of the random LP problems, the proposed algorithms are considered to be efficient since they rank the constraints in such a way that the binding ones are gathered in a lower ranking. Moreover, the application of the proposed algorithms to problems of the Netlib repository gave slightly better numerical results than their application to random problems. The algorithms that use as a classification criterion a ratio where

lies in the numerator, multiply the numerator with zero, even when a single

. Thus, the other variable of the numerator does not affect the result. In reality, though,

affects only the constraints but not the objective function. For instance, the constraints that may be met in transportation companies (logistics), may affect the profits but are not related to the company. As a result, the company has not the ability to improve the constraints in order to increase its profits. In the objective function that reflects the profits, these variables exist with a

coefficient. In the methods where

lies in the numerator, it remains unchanged, whether the aforementioned variables exist or not, while it is well known that they contribute significantly in the definition of the feasible area. With the proposed methodology in this paper, the proximity of each

with the corresponding

is calculated, so even if

, the j-th variable is taken into account for the ranking and the classification of the constraints. The already existing algorithms use a measure of comparison and afterwards based on this measure. They rank the constraints. The renovation of the proposed algorithms here is that by knowing in advance that they are intended in ranking instead of using precise data. They focus in advance on ranking the proximity of

with the corresponding

and based on those values they classify the constraints. In other words, the achievement of great precision in the arithmetic operations is sacrificed, just in order for the algorithms to remain efficient under any circumstances as it was shown above. In general, when dealing with optimization problems with constraints, the constraints contribute the major part in comparison with the objective function, because they define the feasible area of the LP problem. As it concerns the objective function, the only point of interest is the extreme point that lies in the feasible area. So, it is obvious that an efficient algorithm should prioritize the constraints over the objective function.

As a future research, extensive use of the proposed theoretical framework can be done, by making use of different utility functions or by combining different techniques. The promising results of the present paper reveal the worthiness of dedicating time and research for the adaptation of the proposed algorithms in various categories of LP problems with constraints.

Moreover, it is worth to study the implementation of the substitution of the utility matrix used in the PRMac algorithm by utilizing a different norm or different statistical indices of univariate analysis.

Acknowledgements

The authors wish to acknowledge Prof. Theodoula N. Grapsa for her helpful suggestions.