Recognizing Expression Variant and Occluded Face Images Based on Nested HMM and Fuzzy Rule Based Approach ()

Received 11 March 2016; accepted 20 May 2016; published 23 May 2016

1. Introduction

The face recognition has rapidly emerged as an active research area in the biometric field to provide various secure real world applications like security monitoring, law enforcement and surveillance systems [1] . Because of using a single image per person to identify the face, the database can avoid storing huge amount of face images which needs restricted storage capability [2] . Most of real world applications using face recognition technology would require identifying a person under some controlled conditions like variations in illumination, pose, and expression [3] - [5] and occlusion [6] . The objective is to identify a person from the database in any of these unpredictable limitations. Therefore, face recognition [7] is recently considered as most challenging environments rather than fingerprint, iris and speech recognition in Biometric field. In proposed work, the face is recognized under expression and occlusion variation with the highest recognition rate. All the face recognition approaches are basically classified as four major categories like geometric based methods, template matching methods, appearance based methods and statistical approaches. Geometric based methods [8] recognize the face, according to geometric relationship or spatial distances between facial features by locating feature points on the image [9] such as elastic bunch graph matching, landmark localization and feature tracking [10] . The template based methods [1] compare the given image with a set of stored templates that is generated by using statistical tools such as support vector machine (SVM), Linear discriminant analysis (LDA) and principle component analysis (PCA). The appearance based methods [11] builds 2D/3D a morphable model like human faces in which parameters of the model are used to recognize faces. Some of reconstruction methods are used to frame 2D/3D models [10] [12] [13] . In statistical approaches [14] , some of the facial features are only taken and the relationship between these features is estimated to identify a person. Hidden Markov model (HMM) is one of statistical approach and it forms an observation vector sequence by considering every facial feature as a state in Markov chain. It calculates the similarity index with the training set to recognize faces. HMM technique is only the approach to produce the highest recognition rate when comparing with alternative techniques [15] . The proposed work uses Nested Hidden markov model (NHMM) with Baum Welch algorithm [16] to find the relevant characteristics of the image.

2. Related Work

Many of face recognition method do not considered face recognition with expression and occlusion variations. Bronstein [17] introduced a face recognition method to handle missing data and produces high recognition rates on a limited database. In his study, the missing data were synthetically derived from frontal scans of the image. Dibeklioglu [18] presented curvature based segmentation method to recognize person with significant poses variations. But, it could not apply to facial scans with yaw rotations greater than 45 degrees and it requires storing several samples per person. Jingo [19] reconstructed 3D generic elastic model (GEM) for each subject with pose invariant recognition by involving 2D image. The distance between synthesized image and test image is computed by using a normalized correlation matcher. It produces less accuracy for expression invariant or extreme pose invariant face recognition. Josef [20] presented an image matching method which is formulated on Markov random fields (MRF). The label pruning and error pre whitening measures were introduced to increase the accuracy that addresses the computational burden. Regressor based cross pose face representation [12] was improved by finding bias and variance in regression of different pose variation. The ridge regression and lasso regression were also explored to overcome the problem of subspace based face representation and Gabor features were used to improve recognition rates. Perakis [21] introduced the 3D face recognition method to handle pose variation. The face model is reconstructed from partial face model and it produces 83.7% recognition rate. Automatic landmark detector estimates pose and detect missed data within the partial face model. Rakesh [22] utilize directional and texture information from face images for face recognition. The scale adaptive digital filters and local descriptors were used to capture directionality and to extract features respectively. Rangan [23] improves the performance of face recognition system with pose variation by using truncated transform domain feature extraction (TTDFE) for feature extraction. The binary particle swarm optimization based feature selection algorithm was utilized to search feature space for optimal feature subset. Selva raj [24] investigated with feature extraction from facial electromyography (FEMG) signals for classifying six emotional characteristics like happy, sad, afraid, surprise, disgust and neutral. The k-nearest neighbor (knn) classifier was used to map the extracted features with respective emotions. Principle component analysis was employed for retrieving the emotional information from FEMG signals by analyzing efficiency of the features over conventional statistical features. Vetter [13] proposed a 3D face reconstruction method by fitting 3D morphable model on 3D facial scans for producing 3D synthetic faces from scanned data. These frontal facial scans were tested among FERET database and the work does not consider when the yaw rotations of face image could not exceed 40 degrees. Zhisong Pan [25] developed two models, named as, empirical kernel sparsity preserving projection and empirical kernel sparsity score were employed for feature extraction and feature selection respectively. The nonlinear separable data were mapped into kernel space in which the nonlinear similarity can be captured, and then the data in kernel space was reconstructed by sparse representation to preserve the sparse structure.

3. Expression and Occlusion In-Variant Face Recognition

The expression and occlusion invariant face recognition involves five steps to recognize the face such as:

− Face detection.

− Fuzzy data conversion.

− Feature extraction.

− NHMM training.

− Face Recognition.

3.1. Face Detection

The proposed work is mainly focused on training and face recognition steps. The process of extracting facial portion from input image is known as face detection. It eliminates background, hair, ear and unwanted portions of the facial image. It identifies the face location and it extracts certain relevant facial regions by using principle component analysis (PCA) [26] . The image is normalized to crop only the face without having a background. The facial features are detected according to the skin color and the circle shape of the iris.

3.2. Fuzzy Data Conversion

The detected face image is converted into the fuzzy domain data by using the following algorithm which starts with the initialization of the image parameters; size, minimum, mid and maximum gray level. The fuzzy rule-based approach is an efficient method for many tasks in the image processing.

The algorithm to convert image into fuzzy data is given below:

1) Read the image and convert it into grayscale image if it is RGB image.

2) Find the size of the image (M × N).

3) Find the minimum, maximum gray level of the image also find the average gray level of the image.

4) For x = 0:M, For y = 0:N

5) If gray_value between zero and min

Then a = 0;

6) Else if gray_ value between min and mid

Then a = (1/(mid-min) *min + (1/mid-min) *data;

7) If gray_value between mid and max

Then a = (1/(max-mid)) *mid + (1/(max-mid)) *data;

8) if gray_level between max and 255

Then a = 1;

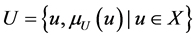

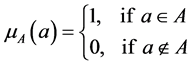

This algorithm converts detected face images of both authorized and unauthorized persons into fuzzy domain data. Every fuzzy data (a) is assigned as fuzzy member of the fuzzy sets A and U, as represented in Equations (1) and (2) that are fuzzy sets for authorized and unauthorized persons respectively.

(1)

(1)

(2)

(2)

where, n is the number of face images,  are fuzzy data which is obtained by using algorithm for authorized persons and they are collected together as members of fuzzy set A. Similarly,

are fuzzy data which is obtained by using algorithm for authorized persons and they are collected together as members of fuzzy set A. Similarly,  are members of fuzzy set U for unauthorized persons.

are members of fuzzy set U for unauthorized persons.

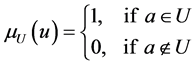

The general representations of these fuzzy sets [27] are denoted with the Equations (3) to (6) as,

(3)

(3)

(4)

(4)

(5)

(5)

(6)

(6)

where, a, u denotes common representation of fuzzy member for the fuzzy sets A and U respectively. X denotes the universe of disclosure. µA(a), µU(u) indicates the membership functions [27] . These fuzzy sets are used in face recognition process to make decision by using fuzzy rule based method.

3.3. Feature Extraction

Haar wavelet transform [28] is employed for extracting features of individual images. The Haar transform is a mathematical analysis tool for the image decomposition and feature extraction in the wavelet transform using decomposition and reconstruction matrices. The image segmentation [29] is carried out by comparing co-oc- currence matrix features of size N × N derived from wavelet transform by decomposing horizontally and vertically.

In this work, the Haar wavelet technique is used for extracting facial features of the face image. Each feature block of the detected face image is extracted based on Haar wavelet by considering following steps:

1) The face image is decomposed into sub images of size 4 × 4 or 8 × 8 in vertical and horizontal directions from left corner of the image [9] as shown in Figure 1.

2) Approximation coefficient matrix (CA) and detailed coefficient matrices such as vertical, horizontal and diagonal (Cv, Ch and Cd respectively) are computed from the sub images [29] .

3) Step i and ii are repeated for each CA with the specified level of decomposition.

4) New feature blocks are generated as given in Equation (3) by finding pixel differences at the desired resolution level.

5) The new feature block is normalized by calculating the mean (µ) and variance (σ) of feature block by Equation (7) as,

(7)

(7)

where, f and F specifies normalized feature block and unnormailized feature block respectively.

3.4. NHMM Training

A model is a statistical model which consists of a set of states to form a Markov chain [15] . A NHMM includes a set of observation data sequences which has two forms of stochastic finite process. The Figure 2 depicts the transfer from one state to another and the probabilities between the states and the observed data.

![]()

Figure 1. Image segmentation using Haar wavelet.

![]()

Figure 2. The one dimensional (1-D) HMM structure.

A NHMM [30] can be described with state transition probability matrix T, an initial state probability distribution π, and the emission probability associated with the observations for each state. A NHMM is defined as λ = [π, T, E]. In general, the transition between the states depends upon transition probability and emission probability. One dimensional NHMM structure (Figure 2) is suitable for analyzing 1-D random signals like speech signals. The NHMM structure (as shown in Figure 3) is developed from one dimensional HMM in which s1, s2, s3 and s4 specifies sub states of each feature like eyes, nose, mouth and chin respectively. Each super state indicates the every subject and the number of super states raises if number of subject is increased.

The NHMM involves three stages such as evaluation; decoding and learning to train the system with a different set of face images which are kept in a database.

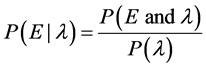

3.4.1. Evaluation

A face image is considered as a super state which consists of four sub states like eyes, nose, mouth and chin (as shown in Figure 3) to construct NHMM. The number of super states is equivalent to a number of training images in the database.

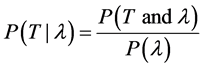

Each normalized feature block is taken as a sub state which denotes facial features in NHMM. The normalized feature blocks are arranged in column wise to form a sub state sequence (Markov chain) s = {e, n, m, c}. The state transition matrix T and emission matrix E are calculated to find the probability of possible state transitions and probability distribution between states respectively. The probability of transition matrix P(T|λ) and the probability of emission matrix P(E|λ) are represented in Equations (8) and (9).

(8)

(8)

(9)

(9)

NHMM parameters such as number of states, number of symbols, state sequence, pseudo counts, pseudo transitions, transition tolerance and emission tolerance are calculated to model NHMM [15] .

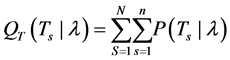

3.4.2. Decoding

The decoding stage of NHMM uses Baum Welch algorithm [16] to estimate the maximum likelihood for each sequence as follows:

1) Posterior state probability p, logarithm of sequence probability logPseq, forward probability fs, backward probability bs, according to scale s are calculated [15] .

2) The maximum likelihood value is initialized for first sequence or updated for proceeding sequences according to the changes of NHMM parameters.

3) The overall transition summation and emission summation are found for each 2D state and up to the entire sequence length, where N and n are number of super states and sub states respectively, whereas, S and s represent each super and sub states respectively.

4) The overall Transition summation and emission summation value is updated according to the changes of parameters of NHMM.

5) The number of iterations is adjusted for the likelihood estimation of each sub state sequence and the overall probability for transition and emission matrices are estimated. The overall probability of transition matrices and the overall probability of emission matrices are denoted in Equations (10) and (11) as,

(10)

(10)

(11)

(11)

3.4.3. Learning

In the training phase, each face image which is kept in the database is trained individually by following the Baum Welch algorithm to find maximum likelihood (as mentioned above). The number of iterations is increased according to the number of training images kept on the database. The accuracy of the training process depends on the number of iterations with the NHMM. The likelihood probability of training images LS is represented by the Equation (12) as,

![]() (12)

(12)

3.5. Face Recognition

The face recognition involves two procedures such as matching score estimation and fuzzy decision making.

1) Matching score estimation:

During recognition process, the expression and occlusion invariant face image is taken as test image which is to be compared with still images on the database. In some cases, an unauthorized face image may be taken as test image that can be recognized as unknown person.

After the facial region is selected by face detection process, the feature blocks are generated and normalized by using Haar wavelet transform. These feature blocks are arranged in column wise to form a Markov chain. The maximum likelihood is found for the test image by following NHMM training procedure (as shown in Figure 4).

The maximum likelihood of test image LTS and maximum likelihood of input image, LS likelihood probability of training images is taken to calculate the matching score, R, in Equation (13) as follows:

![]() (13)

(13)

2) Fuzzy decision making:

As discussed in fuzzy data conversion, the fuzzy sets A and U are formed and they are utilized for decision making by using the fuzzy if then rule which is depicted in Equation (14) as follows:

![]()

Figure 4. Architecture of expression and occlusion invariant face recognition.

![]() (14)

(14)

where, R is “high” is antecedent in which R is matching score and “high” is fuzzy predicate. “A ELSE U” is consequent in which A and U are fuzzy sets. If the R value is high, then the fuzzy data of test image moves towards the fuzzy set A, otherwise, it moves towards the fuzzy set U. The test image is considered as authorized if the fuzzy data of test image is the member of fuzzy set A, otherwise, it is considered as unauthorized. The matching score R becomes high when the test image is authorized person’s image.

4. Experimental Results and Performance Testing

The sample images (as shown in Figure 5) were collected from JAFFE (Japanese Female Facial Expression) database to implement the expression and occlusion invariant face recognition using MATLAB. The system was trained by one sample image per person whereas other images were used for recognition testing. An optimal size of all expression and occlusion varied face images is 256 × 256 pixels.

4.1. Experimental Result

The input images were cropped to remove the unnecessary background area by exploiting PCA [20] . The cropped face images were enhanced to easily predict the features like eyes, nose, mouth and chin (as shown in Figure 6). The facial region is detected based on the skin color and the simplicity of the background automatically. The eye positions were detected automatically based on color cue and the circle shape of the iris.

To decompose the detected facial region, Haar wavelet transform is used by computing coefficient matrices. The features were extracted and normalized by the Haar wavelet (as shown in Figure 7). These features were trained by NHMM and kept on the database. Observation vectors were generated from transformed image.

![]()

Figure 5. Sample images from JAFFE database.

![]() (a)

(a)![]() (b)

(b)

Figure 6. Detection Phase (a) Detect face; (b) Enhancement of face image to extract facial features.

![]()

![]() (a) (b)

(a) (b)

Figure 7. Training Phase (a) Feature Extraction of face images (b) Normalization of the features.

During recognition process, the same procedure is to be followed for test image and trained feature set is compared with feature set which is kept in database. The maximum likelihood is found between input images and the test image with expression variations (as shown in Figure 8(a)). Based on the likelihood similarity, the test image can be recognized when it has a highest matching score. Otherwise, these test images were considered as an unrecognized person. The proposed work uses JAFFE dataset and AR dataset to recognize expression varied images (as shown in Figure 8(a)) and occlusion varied images (as shown in Figure 8(b)) respectively.

4.2. Performance Testing

The Expression invariant face recognition uses the JAFFE dataset which consists of different kind of expressions for thousands of subjects. This proposed work is tested among 212 face images of ten subjects with seven kinds of expressions like angry, happy, neutral, fear, disgust, sad and surprise (as mentioned in Table 1).

Table 1 shows number of recognized images (R) and number of unrecognized images (E) for each subject according to the seven kinds of expressions. Each subject has different number of expression varied images and all of them are tested. They are clearly represented in Table 1 with the result of whether they are recognized or unrecognized. For instance, the third subject has three angry face images in which two images are recognized

![]() (a) (b)

(a) (b)

Figure 8. Recognition phase (a) Expression in variant face recognition using JAFFE dataset (b) Occlusion in variant face recognition using AR dataset.

![]()

Table 1. Experimental evaluation among JAFFE Dataset according to different kinds of Expressions. R denotes number of recognized images and E denotes number of unrecognized images (Error).

(R) and one image is not to be recognized (E). Similarly, third subject totally has 23 Expression varied face images in which 21 images are recognized (R) and remaining two images are not to be recognized (E).

Similarly, the proposed work was tested among 116 occluded face images and it recognizes the 110 images as shown in Table 2. The work was evaluated with different kinds of occluded images like person wearing glasses or scarf and they may have beard or hair fall on face.

The Proposed work recognizes 312 face images successfully among 328 expression and occlusion varied face images. The overall recognition rate is calculated as follows:

Recognition rate = number of images recognized/number of test images = 312/328 = 95.12%.

Error Rate = number of images unrecognized/ number of test images = 16/328 = 4.87%.

Therefore, the system produces 95.12% recognition rate with seven types of expressions. The recognition rate and error rate were found and shown in Table 3.

The comparison of proposed work recognition rate with existing methods according to the expressions is shown in Table 4. The recognition rate is depicted in a graph (Figure 9(b)) according to the expressions. The proposed work produced the highest recognition rate when compared to existing methods like Active Appearance Model [31] , Scaled Gaussian Process Regression (SGPR) [8] , Coupled SGPR (CSGPR) [4] and the combination of SVM [32] and HM [16] .

The proposed work produced the higher recognition rate such as 96.6, 93.3, 92.86, 96.55, 93.9, 97, 96.7 for expressions like neutral, angry, disgust, fear, happy, sad and surprise respectively and it produces 95.28 as average recognition rate. The performance analysis is also depicted with a graph (Figure 9(a)) that shows the increased recognition rate of proposed work among the existing works.

![]()

Table 2. Experimental evaluation among AR Dataset according to different kinds of Occlusion. S denotes Subject, T denotes total number of recognized images, R denotes number of recognized images and E denotes number of unrecognized images (Error).

![]()

Table 3. Recognition rate Vs Error rate.

![]()

Table 4. Comparison of proposed work recognition rate with existing methods.

![]()

Figure 9. (a) Comparison of recognition rate with existing methods (b) comparison of recognition rate with different expression variations of proposed work.

5. Conclusion

This proposed work addresses the face recognition problem among seven kinds of varying expressions. The face recognition uses a new model to recognize the user by improving the recognition rate and it can operate under varying expressions and occlusion. When compared to existing work, the proposed work improves performance features like recognition rate, accuracy and recognition time. This work produced overall recognition rate of 95.12% for expression and occlusion varied face images and the 97% recognition rate for expression wise evaluation. The future work may improve the overall recognition rate by recognizing the face images which have combination of all constraints such as expression, occlusion, pose and illumination.

NOTES

![]()

*Corresponding author.