1. Introduction

Since Kak [1] firstly proposed the concept of quantum inspired neural computation in 1995; quantum neural network (QNN) has attracted great attention by the international scholars during the past decade, and a large number of novel techniques have been studied for quantum computation and neural network. For example, Gopathy et al. [2] proposed the model of quantum neural network with multilevel hidden neurons based on the superposition of quantum states in the quantum theory. Michail et al. [3] attempted to reconcile the linear reversible structure of quantum evolution with nonlinear irreversible dynamics of neural network. Michiharu et al. [4] presented a novel learning model with qubit neuron according to quantum circuit for XOR problem and described the influence to learning by reducing the number of neurons. Gupta et al. [5] defined a new mathematical model of quantum neural network, building on Deutsch’s model of quantum computational network, which provides an approach for building scalable parallel computers. Fariel [6] proposed the neural network with the quantum gated nodes, and indicated that such quantum network may contain more advantageous features from the biological systems than the regular electronic devices. In our previous works [7] , we proposed a quantum BP neural network model with learning algorithm based on the single-qubit rotation gates and two qubits controlled-rotation gates. Next, we proposed a neural network model with quantum gated nodes and a smart algorithm for it [8] , which shows superior performance in comparison with a standard error back propagation network. Adenilton et al. [9] proposed a weightless model based on quantum circuit. It is not only quantum-in- spired but also actually a quantum NN. This model is based on Grover’s search algorithm, and it can both perform quantum learning and simulate the classical models. At present, the fusion of quantum computation and neural computation is gradually becoming a new research direction.

In all of the above models, the fusion of quantum computing and supervised neural networks has been widely studied. However the fusion of quantum computing and unsupervised self-organizing neural network is relatively few. In the classical clustering algorithms, Cai et al. [10] proposed a new algorithm called K-Distributions for Clustering Categorical Data, and Huang [11] investigated clustering problem of large data sets with mixed numeric and categorical values. As it is known to all, unsupervised clustering is the only function of the self- organizing network. For self-organizing network, unsupervised clustering process, in essence, is the application process of the network. This is very different from BP network which must perform a supervised training process before application. Although we proposed quantum self-organizing networks with quantum inputs and quantum weights [12] , this model applied the supervised mode to training, which severely reduces its generalization ability. In addition, although quantum computing effectively enhances the performance of the traditional self-organizing networks, the fusion research of quantum computation and neural computation is still far from mature. It is necessary to further research a new way of integration between them, in order to further improve the performance of neural computation. Hence, we proposed a quantum self-organization network based on Bloch spherical rotation (BQSON), and designed its clustering algorithm in detail. In our approach, both the samples and the weights are denoted by qubits described in Bloch sphere; the weights of the competition winning node and its neighbourhood nodes are adjusted by rotating these qubits to corresponding sample qubit about rotation axis. The experimental results of a benchmark of IRIS clustering show that our approach is superior to the traditional clustering methods such as common self-organizing networks, K-mean clustering, and the adjacent clustering.

2. The Spherical Description and Rotation of Qubits

2.1. The Spherical Description of Qubit

In the quantum computing, a qubit is a two-level quantum system which could be described in two-dimension complex Hilbert space. According to principle of superposition, a qubit can be defined as

(1)

(1)

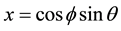

where ,

,

Notation like  is called the Dirac notation, and we will see it often in the following paragraphs, as it is the standard notation for states in quantum mechanics. Therefore, unlike the classical bit, which can only equal 0 or 1, the qubit resides in a vector space parameterized by the continuous variables

is called the Dirac notation, and we will see it often in the following paragraphs, as it is the standard notation for states in quantum mechanics. Therefore, unlike the classical bit, which can only equal 0 or 1, the qubit resides in a vector space parameterized by the continuous variables  and

and . The normalization condition means that the qubit’s state can be represented by a point on a sphere of unit radius, called the Bloch sphere. The Bloch sphere representation is useful as it provides a geometric picture of the qubit and of the transformations that can be applied to its state. This sphere can be embedded in a three-dimensional space of Cartesian coordinates (

. The normalization condition means that the qubit’s state can be represented by a point on a sphere of unit radius, called the Bloch sphere. The Bloch sphere representation is useful as it provides a geometric picture of the qubit and of the transformations that can be applied to its state. This sphere can be embedded in a three-dimensional space of Cartesian coordinates ( ,

,  ,

, ). Thus, the state

). Thus, the state  can be written as

can be written as

(2)

(2)

By definition, a Bloch vector is a vector whose components  represent a point on the Bloch sphere. We can say that the angles

represent a point on the Bloch sphere. We can say that the angles  and

and  define a Bloch vector, as shown in Figure 1.

define a Bloch vector, as shown in Figure 1.

2.2. The Rotation of Qubit about an Aaxi on the Bloch Sphere

In this work, we adjust the weights of competition layer by rotating them around an axis towards the target qubit on the Bloch sphere. This rotation can simultaneously change two parameters  and

and  of a qubit and can automatically achieve the best matching out of two adjustments, which can better simulate the quantum behaviour. To achieve this rotation, it is crucial to determine the rotation axis, as it can directly impact the convergence speed and efficiency of algorithm. To determine the rotation axis, we propose the following method.

of a qubit and can automatically achieve the best matching out of two adjustments, which can better simulate the quantum behaviour. To achieve this rotation, it is crucial to determine the rotation axis, as it can directly impact the convergence speed and efficiency of algorithm. To determine the rotation axis, we propose the following method.

Let ![]() and

and ![]() denote two points on the Bloch sphere. The rotation axis for rotating the qubit from W to X can be written as tensor product of W and X, and the relation of these three vectors is shown in Figure 2.

denote two points on the Bloch sphere. The rotation axis for rotating the qubit from W to X can be written as tensor product of W and X, and the relation of these three vectors is shown in Figure 2.

Let the Bloch coordinates of ![]() and

and ![]() be

be ![]() and

and![]() . According to the above method, the axis of rotating

. According to the above method, the axis of rotating ![]() to

to ![]() can be written as

can be written as

![]() (3)

(3)

![]()

Figure 1. Qubit description on the Bloch sphere.

![]()

Figure 2. The rotation axis of a qubit on the Bloch sphere.

From the principles of quantum computing, on the Bloch sphere a rotation through an angle ![]() about the axis directed along the unit vector

about the axis directed along the unit vector ![]() is given by the matrix

is given by the matrix

![]() (4)

(4)

where ![]() denotes an unit matrix,

denotes an unit matrix, ![]() denotes the Pauli matrix as follows

denotes the Pauli matrix as follows

![]() (5)

(5)

Hence, on the Bloch sphere, a rotation through an angle ![]() about the axis

about the axis ![]() that rotates the current qubit

that rotates the current qubit ![]() towards the target qubit

towards the target qubit ![]() can be written as

can be written as

![]() (6)

(6)

and the rotation operation can be written as

![]() (7)

(7)

2.3. The Measurement of Qubits

From the principles of quantum computing, the coordinates x, y, and z of a qubit on the Bloch sphere can be measured by the Pauli operators using the following equations.

![]() (8)

(8)

![]() (9)

(9)

![]() (10)

(10)

3. Quantum Self-Organization Neural Networks Model

We propose the quantum self-organization neural networks model based on the Bloch spherical rotation are shown in Figure 3, where both inputs and weight values are qubits described on the Bloch sphere.

Let ![]() denote the inputs, and

denote the inputs, and ![]() denote the weight

denote the weight

values of the jth node in competition layer. By the projection measuring, the Bloch coordinates of ![]() and

and ![]() can be written as

can be written as ![]() and

and![]() , respectively. From the spherical geometry, the shortest distance between two points on a sphere is defined as the length of the minor arc on the big circle defined by these two points and the centre of Bloch sphere. As a result of the Bloch sphere radius equal

, respectively. From the spherical geometry, the shortest distance between two points on a sphere is defined as the length of the minor arc on the big circle defined by these two points and the centre of Bloch sphere. As a result of the Bloch sphere radius equal

![]()

Figure 3. The quantum self-organization neural networks model.

to 1, the spherical distance between ![]() and

and ![]() equal to the angle between them, and can be written as

equal to the angle between them, and can be written as

![]() (11)

(11)

Hence, the distance between ![]() and

and![]() , namely, the output of the jth node in competition layer may be given by

, namely, the output of the jth node in competition layer may be given by

![]() (12)

(12)

4. Quantum Self-Organization Neural Networks Algorithm

4.1. Quantum State Description of the Samples

First, all samples data are converted to [0, 1]. Let ![]() denote the lth sample. We adopt the following normalization method.

denote the lth sample. We adopt the following normalization method.

![]() (13)

(13)

where ![]() and

and ![]() respectively denote the maximum and the minimum of all samples.

respectively denote the maximum and the minimum of all samples.

Let sample after normalization be![]() , we use convert

, we use convert ![]() to the phase of qubits by the following equation

to the phase of qubits by the following equation

![]() (14)

(14)

![]() (15)

(15)

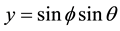

At this point, ![]() may be converted to qubits on the Bloch sphere, as shown in the following equation.

may be converted to qubits on the Bloch sphere, as shown in the following equation.

![]() (16)

(16)

where![]() .

.

4.2. Competitive Learning Rules

Let ![]() denote the weight value of the jth node in the competition layer, as follows

denote the weight value of the jth node in the competition layer, as follows

![]() (17)

(17)

For the lth sample![]() , according to the Equations (11)-(12), the spherical distance between

, according to the Equations (11)-(12), the spherical distance between ![]() and

and ![]() can be written as

can be written as

![]() (18)

(18)

Suppose that the competition layer has C nodes, and that the node with a minimum distance is defined as the winning one. Hence, the winning node should satisfy the following equation

![]() (19)

(19)

4.3. Network Clustering Algorithm

The self-organizing network is a typical unsupervised clustering model; it is suitable for solving the problem of don’t know the class number of clustering beforehand. Its training is completely different from the traditional BP neural networks. If a self-organizing network must apply supervised information in clustering, it is powerless for clustering problems with no supervision information available. The training process of our model does not contain any prior knowledge about samples classification results; otherwise will lose its generalization ability, which is the shortcoming of Ref. [12] . Our approach can be summarized as follows.

Step 1 Quantum state description of the sample. Convert the samples to qubit states by Equations (13)-(16). Measure the quantum samples by Equations (8)-(10) to obtain their Bloch coordinates.

Step 2 The weights of networks initialization. Initialize all the networks weights to randomly distribution of qubits on the Bloch sphere, as shown below.

![]() (20)

(20)

![]() (21)

(21)

where![]() ,

, ![]() ,

, ![]() denotes the number of competition nodes, and

denotes the number of competition nodes, and ![]() denote the random number in (0, 1).

denote the random number in (0, 1).

Step 3 The parameters of networks initialization. Include: the maximum iterative steps![]() , the initial value of learning rate

, the initial value of learning rate![]() , the finial value of learning rate

, the finial value of learning rate![]() , the initial value of neighbourhood radius

, the initial value of neighbourhood radius![]() , the finial value of neighbourhood radius

, the finial value of neighbourhood radius![]() , the initial value of variance

, the initial value of variance![]() , the finial value of variance

, the finial value of variance![]() . Set the current iterative step

. Set the current iterative step ![]() to 0.

to 0.

Step 4 Compute the current learning rate, neighborhood radius, and variance by the following equations.

![]() (22)

(22)

![]() (23)

(23)

![]() (24)

(24)

Step 5 Measure all quantum weights in competition layer by Equations (8)-(10) to obtain their Bloch coordinates. For the lth sample ![]()

![]() , compute the corresponding winning node

, compute the corresponding winning node ![]() by Equations (18)-(19).

by Equations (18)-(19).

Step 6 For the lth sample![]() , in the competitive layer node array, select the neighborhood

, in the competitive layer node array, select the neighborhood ![]() with the centre

with the centre ![]() and the radius

and the radius![]() . For all nodes

. For all nodes ![]() in

in![]() , rotate each component

, rotate each component ![]() to the corresponding

to the corresponding![]() . The rotation angles are computed by the following equation

. The rotation angles are computed by the following equation

![]() (25)

(25)

where ![]() denote the spherical distance between the jth node and the j*th node.

denote the spherical distance between the jth node and the j*th node.

According to theorem, the rotation axis and rotation matrix of rotating ![]() to

to ![]() can be written as

can be written as

![]() (26)

(26)

![]() (27)

(27)

Then, the rotation operation can be written as

![]() (28)

(28)

where![]() ,

, ![]() ,

,![]() .

.

Step 7 If![]() , save clustering results and stop, else set t = t + 1, and go back to Step 4.

, save clustering results and stop, else set t = t + 1, and go back to Step 4.

5. Simulations

In order to experimentally illustrate the effectiveness of the proposed BQSON, the IRIS samples are used to compare it with the Classical Self-Organization Network (CSON ), the K-mean clustering, the Nearest Neighbor Clustering (NNC) in this section. In these experiments, we perform and evaluate the BQSON in Matlab (Version

7.1.0

.246) on a Windows PC with 2.19 GHz CPU and 1.00 GB RAM. To enhance the impartiality of the comparison results, our BQSON has the same structure and parameters as the CSON in this experiment. The IRIS data set contains 150 four dimensional samples. The sample is divided into three classes, and each class contains 50 samples, such as setosa (1 - 50), versicolor (51 - 100), virginica (101 - 150).

5.1. Parameter Settings

Both BQSON and CSON have 4 input nodes and 100 competition nodes arranged in square matrix. Other parameters are set as follows:![]() ,

, ![]() ,

, ![]() ,

, ![]() ,

, ![]() ,

, ![]() ,

,![]() .

.

If the clustering results do not change in 100 consecutive steps, we call algorithm reach convergence. For K-mean clustering, the K is set to 3, and if each of variations of class centers is less than ![]() in two consecutive generations, the algorithm terminates. For NNC, the clustering threshold is set to

in two consecutive generations, the algorithm terminates. For NNC, the clustering threshold is set to![]() . If the distance of the sample X from the center of the kth class is less than

. If the distance of the sample X from the center of the kth class is less than![]() , the sample X is considered to belong to the kth class.

, the sample X is considered to belong to the kth class.

5.2. Clustering Result Contrasts

Considering the log likelihood function is more used in evaluation of the performance of the Bayesian classification network, and less used in clustering algorithm, therefore, we don’t use this index in our work. To facilitate comparison, two relevant concepts are defined as follows:

Precision Ratio Let the correct number of samples in the kth class after clustering be NPR, and the total number of samples in the kth class after clustering be NA. Precision Ratio is defined as follows

![]() (29)

(29)

Recall Ratio Let the correct number of samples in the kth class after clustering be NPR, and the total number of samples in the kth class before clustering be NB, Recall Ratio is defined as follows

![]() (30)

(30)

After 9797 iterative steps, the BQSON reaches convergence. All samples are divided into three classes, and each class contains 50 samples. The first class contains 50 “setosa” samples. The second class contains 48 “versicolor” samples and 2 “virginica” samples. The third class contains 48 “virginica” samples and 2 “versicolor” samples. The Precision Ratio and Recall Ratio of three class samples reach 100%, 96%, 96%, respectively.

After 10,000 iterative steps, the CSON does not reach convergence, where the first class contains 50 “setosa” samples, and for the rest of the 100 samples, the model is powerless. In addition, we running until 30000 iterative steps, the CSON is still not convergence.

For K-mean clustering, after 11 iterative steps, convergence is reached. The first class contains 50 “setosa” samples, the second class contains 61 samples where 47 samples are correct, and the third class contains 39 samples where 36 samples are correct. The Precision Ratio of three class samples reach 100%, 77.05%, 92.31%, respectively, and the Recall Ratio of three class samples reach 100%, 94%, 72%, respectively.

For NNC, All samples are divided into three classes. The first class contains 50 “setosa” samples, the second class contains 62 samples where 50 samples are correct, and the third class contains 38 samples where all 38 samples are correct. The Precision Ratio of three class samples reach 100%, 80.65%, 100%, respectively, and the Recall Ratio of three class samples reach 100%, 100%, 76%, respectively.

5.3. Clustering Results Analysis

From the experimental results, it is clear that both Precision Ratio and Recall Ratio of BQSON are the highest in four algorithms. These results show that the BQSON is obviously superior not only to the CSON but to the K-mean and the NNC as well.

Next, we theoretically explain the above experimental results. First, BQSON adopted a new way to calculate the distance of nodes between input layer and competition layer. In the existing clustering algorithms, the distance measurement is generally taken the Euclidean distance, which this distance is calculated based on coordinates. In BQSON, however, the distance is obtained by calculating the Bloch spherical distance of each dimension between input samples and competition nodes. Let ![]() denote the jth output corresponding to the ith input sample, where t denotes the current iterative step. Let

denote the jth output corresponding to the ith input sample, where t denotes the current iterative step. Let

![]() (31)

(31)

![]() (32)

(32)

![]() (33)

(33)

where C denotes the number of nodes in competition layer, and L denotes the total number of samples.

For the normalized samples, in CSON, the difference of each dimension between sample and weight ![]() belongs to [0, 1]. In BQSON, the difference of each dimension

belongs to [0, 1]. In BQSON, the difference of each dimension ![]() belongs to

belongs to ![]() by applying the Bloch spherical distance. Hence, in order to make fair, we compared the average variance of BQSON after dividing by

by applying the Bloch spherical distance. Hence, in order to make fair, we compared the average variance of BQSON after dividing by ![]() with that of CSON. The contrast results are shown in Figure 4.

with that of CSON. The contrast results are shown in Figure 4.

The Figure 4 shows that the average variance of BQSON is obviously greater than that of CSON, which suggests that the spherical distance have better distinguish ability than Euclidean distance for intensive samples. The “setosa” samples are relatively independent, which lead four algorithms to obtain the ideal clustering results. Both “versicolor” and “virginica” samples present overlapping intensive distribution, where the BQSON have also obtained the ideal clustering results. However, the clustering effect is not ideal for both K-mean and NNC based on the Euclidean distance, the CSON is completely unable to separate these two classes of samples.

Secondly, the BQSON adopted a new way of weight adjustment. In CSON, the vector differences between samples and weights are directly used to adjust the weighs, which is strongly influenced by learning rate, not easy to achieve fine adjustment. In BQSON, however, the weighs are adjusted by rotating them to a sample so as to approximate this sample. Due to the rotation is performed on the Bloch sphere, so, it may conduct a subtle adjustment of weights, which enhance the clustering ability of BQSON.

6. Conclusion

In this work, a quantum self-organization network clustering algorithm is proposed. In our approach, the weights of nodes in competition layer are updated by rotating qubits on the Bloch sphere. The comparative experiments of IRIS show that the clustering ability of proposed approach is significantly higher than the classic self-orga- nizing network. The Precision Ratio and Recall Ratio of BQSON increased by 7.5467% and 8.6667% more than those of K-mean and increased by 3.7833% and 5.3333% more than those of NNC. In addition, the BQSON is inefficient. It is also worth pointing out that, BQSON increases computing operations, such as the axis of rotation, rotation matrix and projection measurement, which lead to the increasing amount of calculation, prolonging running time, and reducing efficiency of clustering. However, the increase of these operations greatly improves

![]()

Figure 4. The average variance contrasts of BQSON and CSON.

the clustering ability of BQSON. In other words, BQSON is at the cost of computing efficiency for enhancing clustering ability, which is consistent with no free lunch theorem. Hence, how to enhance the computing efficiency of BQSON is the subject of further research.

Acknowledgements

This work was supported by the National Natural Science Foundation of China (Grant No. 61170132), Natural Science Foundation of Heilongjiang Province of China (Grant No. F2015021), Science Technology Research Project of Heilongjiang Educational Committee of China (Grant No. 12541059), and Youth Foundation of Northeast Petroleum University (Grant No. 2013NQ119).

NOTES

*Corresponding author.