1. Introduction

The particle swarm optimization (PSO) algorithm is a global search strategy that can efficiently handle arbitrary optimization problems. In 1995, Kennedy and Eberhart introduced the PSO method for the first time [1] . Later, it received considerable attention and was shown to be capable of tackling difficult optimization problems. PSO mimics the social interactions between members of biological swarms. A good analogy for illustrating the concept is a swarm of birds. Birds (solution candidates) are allowed to fly in a specified field looking for food. It is believed that after a certain time (generations; iterations) all birds will gather around the highest concentration of food in the field (global optimum). At every generation, each bird updates its current location using information about the local and global optimums having achieved so far, and information received from other birds. These social interactions and continuous updates will guarantee that the global optimum will be found. The method has received considerable international attention because of its simplicity and because of its skill in finding global solutions to hard optimization problems. At present, the classical PSO method has been successfully applied to combinatorial optimization [2] [3] and numerical optimization [4] [5] . The following improvements have been applied to the classical PSO technique: modification of design parameters [6] - [8] , modification of the update rule of a particle’s location and velocity [9] [10] , integration with other algorithms [11] - [17] , and multiple sub- swarms PSO [18] [19] . These improvements have enhanced the performance of the classical PSO in varying degrees.

Quantum PSO (QPSO) is based on quantum mechanics. A quantum-inspired version of the classical PSO algorithm was first proposed in [20] . Later Sun et al. introduced the mean best position into the algorithm and proposed a new version of PSO, quantum-behaved particle swarm optimization [21] [22] . The QPSO algorithm permits all particles to have a quantum behavior instead of the Newtonian dynamics of the classical PSO. Instead of the Newtonian random walk, a quantum motion is used in the search process. The iterative equation of QPSO is very different from that of PSO, and the QPSO needs no velocity vectors for the particles. One of the most attractive features of the new algorithm is the reduced number of control parameters. Only one parameter must be tuned in QPSO, which makes it easier to implement. The QPSO algorithm has been shown to successfully solve a wide range of continuous optimization problems and many efficient strategies have been proposed to improve the algorithm [23] - [27] .

In order to enhance the optimization ability of QPSO by integrating quantum computation, we propose an improved quantum-behaved particle swarm optimization algorithm. In our algorithm, all particles are encoded by qubits described on the Bloch sphere. The three-dimensional Cartesian coordinates of qubits can be obtained from projective measurement. Since each qubit has three coordinate values, each particle has three locations. Each of the locations represents an optimization solution, which accelerates the search process by expanding the search scope of each variable from an interval on the number axis to an area of the Bloch sphere. The delta potential well is used to establish the search mechanism. Pauli matrices are used to perform the projective measurement, establish the rotation matrices and achieve qubits rotating about the rotation axis. The experimental results show that the proposed algorithm is superior to the original one in optimization ability.

2. The QPSO Model

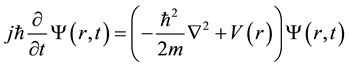

In quantum mechanics, the dynamic behavior of a particle complies with the following Schrodinger equation

(1)

(1)

where  denotes Planck’s constant, m denotes particle quality and V(r) denotes the energy distribution function.

denotes Planck’s constant, m denotes particle quality and V(r) denotes the energy distribution function.

In Schrodinger’s equation, the unknown is the wave function . According to the statistical interpretation of this wave function, the square of its magnitude denotes the probability density. Taking the delta potential well as an example, the design of QPSO is described as follows.

. According to the statistical interpretation of this wave function, the square of its magnitude denotes the probability density. Taking the delta potential well as an example, the design of QPSO is described as follows.

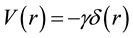

The potential energy distribution function of the delta potential well can be expressed as

(2)

(2)

where  denotes the potential well depth.

denotes the potential well depth.

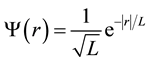

Substituting Equation (2) into Equation (1), we can obtain a particle’s wave function,

(3)

(3)

where  denotes the characteristic length of the delta potential well.

denotes the characteristic length of the delta potential well.

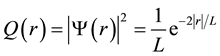

Therefore, a particle’s probability density function can be written as

(4)

(4)

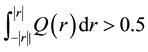

To increase the probability of a particles moving towards the potential well’s center, Equation (4) must satisfy the following relationship,

(5)

(5)

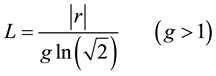

From Equations (4) and (5), the characteristic length, L, must satisfy

(6)

(6)

In the potential well, the dynamic behavior of the particles obeys the Schrodinger equation, in that the particles’ locations are random at any time. However, the particles in classical PSO obey Newtonian mechanics, where the particles must have definite locations at any time. This contradiction can be satisfactorily resolved by means of the collapse of the wave function and the

Monte Carlo

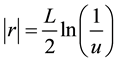

method. We first take a random number u in the

range (0,1) and let , then the following result can be obtained

, then the following result can be obtained

(7)

(7)

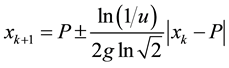

Using Equations (6) and (7), we can derive that . Let

. Let . It is then possible to derive

. It is then possible to derive . By letting

. By letting ,

,

![]() (8)

(8)

The above formula is the iterative equation of QPSO [26] [27] .

3. The QPSO Improvement Based on Quantum Computing

In this section, we propose a Bloch sphere-based quantum-behaved particle swarm optimization algorithm called BQPSO.

3.1. The Spherical Description of Qubits

In quantum computing, a qubit is a two-level quantum system, described by a two-dimensional complex Hilbert space. From the superposition principles, any state of the qubit may be written as

![]() (9)

(9)

where![]() ,

,![]() .

.

Therefore, unlike the classical bit, which can only equal 0 or 1, the qubit resides in a vector space parameterized by the continuous variables ![]() and

and![]() . The normalization condition means that the qubit’s state can be represented by a point on a sphere of unit radius, called the Bloch sphere. The Bloch sphere representation is useful as it provides a geometric picture of the qubit and of the transformations that can be applied to its state. This sphere can be embedded in a three-dimensional space of Cartesian coordinates (

. The normalization condition means that the qubit’s state can be represented by a point on a sphere of unit radius, called the Bloch sphere. The Bloch sphere representation is useful as it provides a geometric picture of the qubit and of the transformations that can be applied to its state. This sphere can be embedded in a three-dimensional space of Cartesian coordinates (![]() ,

, ![]() ,

,![]() ). Thus, the state

). Thus, the state ![]() can be written as

can be written as

![]() (10)

(10)

The optimization is performed in![]() , so the proposed method can be easily adapted to a variety of optimization problems.

, so the proposed method can be easily adapted to a variety of optimization problems.

3.2. The BQPSO Encoding Method

In BQPSO, all particles are encoded by qubits described on the Bloch sphere. Set the swarm size to m, and the space dimension to n. Then the i-th particle is encoded as

![]() (11)

(11)

where ![]()

From the principles of quantum computing, the coordinates x, y, and z of a qubit on the Bloch sphere can be measured by the Pauli operators written as

![]() (12)

(12)

Let ![]() denote the j-th qubit on the i-th particle. The coordinates (xij, yij, zij) of

denote the j-th qubit on the i-th particle. The coordinates (xij, yij, zij) of ![]() can be obtained by Pauli operators using

can be obtained by Pauli operators using

![]() (13)

(13)

![]() (14)

(14)

![]() (15)

(15)

In BQPSO, the Bloch coordinates of each qubit are regarded as three paratactic location components, each particle contains three paratactic locations, and each location represents an optimization solution. Therefore, in

the unit space![]() , each particle simultaneously represents three optimization solutions, which can be described as follows

, each particle simultaneously represents three optimization solutions, which can be described as follows

![]() (15)

(15)

3.3. Solution Space Transformation

In BQPSO, each particle contains 3n Bloch coordinates of n qubits that can be transformed from the unit space ![]() to the solution space of the optimization problem. Each of the Bloch coordinates corresponds to an optimization variable in the solution space. Let the j-th variable of optimization problem be

to the solution space of the optimization problem. Each of the Bloch coordinates corresponds to an optimization variable in the solution space. Let the j-th variable of optimization problem be![]() , and

, and

![]() denote the coordinates of the j-th qubit on the i-th particle. Then the corresponding variables

denote the coordinates of the j-th qubit on the i-th particle. Then the corresponding variables ![]() in the solution space are computed as follows

in the solution space are computed as follows

![]() (16)

(16)

![]() (17)

(17)

![]() (18)

(18)

where ![]()

3.4. The Optimal Solutions Update

By substituting the three solutions ![]() described by the i-th particle into the fitness function, we may compute its fitness, for

described by the i-th particle into the fitness function, we may compute its fitness, for ![]() Let

Let ![]() denote the best fitness so far, and

denote the best fitness so far, and ![]() denote the corresponding best particle. Let

denote the corresponding best particle. Let ![]() denote the own best fitness of the i-th particle, and

denote the own best fitness of the i-th particle, and ![]() denote the corresponding best particle. Further, let

denote the corresponding best particle. Further, let![]() ,

,![]() . If

. If ![]() then

then ![]() and

and![]() . If

. If ![]() then

then ![]() and

and![]() .

.

3.5. Particle Locations Update

In BQPSO, we search on the Bloch sphere. That is, we rotate the qubit around an axis towards the target qubit. This rotation can simultaneously change two parameters ![]() and

and ![]() of a qubit, which simulates quantum behavior and enhances the optimization ability.

of a qubit, which simulates quantum behavior and enhances the optimization ability.

For the i-th particle, let ![]() denote the current location of the j-th qubit

denote the current location of the j-th qubit ![]() on the Bloch sphere, and

on the Bloch sphere, and ![]() and

and ![]() denote its own best location and the global best location on the Bloch sphere. According to [27] , for

denote its own best location and the global best location on the Bloch sphere. According to [27] , for![]() , the two potential well centers in Equation (8) can be obtained using

, the two potential well centers in Equation (8) can be obtained using

![]() (19)

(19)

![]() (20)

(20)

where m denotes the number of particles, r denotes a random number uniformly distributed in (0, 1), and k denotes the iterative step.

Let O denote the center of the Bloch sphere and ![]() denote the angle between

denote the angle between ![]() and

and![]() . From the QPSO’s iteration equation, to make

. From the QPSO’s iteration equation, to make ![]() move to

move to![]() , the angle

, the angle ![]() needs to be rotated on the Bloch sphere so that

needs to be rotated on the Bloch sphere so that

![]() (21)

(21)

Let the qubit corresponding to the point ![]() be

be![]() . From the above equation we know that the new location of

. From the above equation we know that the new location of ![]() is actually the location of

is actually the location of ![]() after it is rotated through an angle

after it is rotated through an angle ![]() towards

towards![]() .

.

To achieve this rotation, it is crucial to determine the rotation axis, as it can directly impact the convergence speed and efficiency of algorithm. According to the definition of the vector product, the rotation axis of rotating ![]() towards

towards ![]() through an angle

through an angle ![]() can be written as

can be written as

![]() (22)

(22)

From the principles of quantum computing, the rotation matrix about the axis ![]() that rotates the current qubit

that rotates the current qubit ![]() towards the target qubit

towards the target qubit ![]() can be written as

can be written as

![]() (23)

(23)

and the rotation operation can be written as

![]() (24)

(24)

where ![]() and k denotes the iterative steps.

and k denotes the iterative steps.

4. Experimental Results and Analysis

4.1. Test Functions

Many benchmark numerical functions are commonly used to evaluate and compare optimization algorithms. In this section, the performance of the proposed BQPSO algorithm is evaluated on 8 standard, unconstrained, single-objective benchmark functions with different characteristics, taken from [28] - [30] . All of the functions are minimization problems.

1)![]() ;

;

2)![]() ;

;

3)![]() ;

;

4)![]() ;

;

5)![]() ,

,![]() ;

;

6)![]() ;

;

7)![]() ,

,![]() ;

;

8)![]() .

.

4.2. Experimental Setup

For all problems, the following parameters are used unless a change is mentioned. Population size: NP = 100 when D = 30 and NP = 80 when D = 20, the precision of a desired solution value to reach (VTR): VTR = 10 − 5

(i.e.![]() ) for

) for ![]() and

and![]() ; VTR = 100 for

; VTR = 100 for ![]() and

and![]() ; VTR = 0.1 for

; VTR = 0.1 for![]() ; VTR = 10

; VTR = 10

for ![]() and

and![]() ; VTR = 0.001 for

; VTR = 0.001 for![]() . The maximum of the number of function evaluations (MNFE): MNFE = 20000; The control parameter:

. The maximum of the number of function evaluations (MNFE): MNFE = 20000; The control parameter:![]() ; Halting criterion: when MNFE is reached, the execution of the algorithm is stopped.

; Halting criterion: when MNFE is reached, the execution of the algorithm is stopped.

To minimize the effect of the stochastic nature of the algorithms, 50 independent runs on 8 functions are performed and the reported indexes for each function are the average over 50 trials. If an algorithm finds the global minima with predefined precision within the preset MNFE the algorithm is said to have succeeded. Otherwise it fails. All of the algorithms were implemented in standard Matlab 7.0 and the experiments were executed on a P-II 2.0 GHz machine with 1.0 GB RAM, under the WIN-XP platform.

4.3. Performance Criteria

Five performance criteria were selected from [31] to evaluate the performance of the algorithms. These criteria are also used in [32] and are described as follows.

Error: The error of a solution ![]() is defined as

is defined as![]() , where

, where ![]() is the global optimum of the function. The error was recorded when the MNFE was reached and the average (mean) and standard deviation (std dev) of the 50 error values were calculated.

is the global optimum of the function. The error was recorded when the MNFE was reached and the average (mean) and standard deviation (std dev) of the 50 error values were calculated.

NFE: When the VTR was reached, the number of function evaluations (NFE) was recorded. If the VTR was not reached within the preset MNFE then the NFE is equal to MNFE. The average (mean) and standard deviation (std dev) of the 50 NFE values were calculated.

Number of successful runs (SR): The number of successful runs was recorded when the VTR was reached before the MNFE was satisfied.

Running time (time (s)): Running time indicates the average time over one function evaluation.

4.4. Comparison Results

In this section, we compare our approach with the classical QPSO of [26] , to demonstrate the superiority of BQPSO. The parameters used for the two algorithms are described in Section 4.2. The results were calculated using 50 independent runs. Table 1 shows the mean and standard deviation of the errors of BQPSO and QPSO on 8 benchmark functions. The mean and standard deviation of NFE are shown in Table 2.

From Table 1 and Table 2, we can see that BQPSO performs significantly better than QPSO for 8 functions. For![]() ,

, ![]() ,

, ![]() , BQPSO succeed in finding the minimum in all runs. For the other functions, BQPSO succeed much more often than QPSO. Furthermore, BQPSO obtains smaller mean and standard deviations than QPSO

, BQPSO succeed in finding the minimum in all runs. For the other functions, BQPSO succeed much more often than QPSO. Furthermore, BQPSO obtains smaller mean and standard deviations than QPSO

![]()

Table 1. Comparison of the mean and standard deviation of the error of BQPSO and QPSO on 8 benchmark functions.

![]()

Table 2. Comparison of the mean and standard deviation of the NFE of BQPSO and QPSO on 8 benchmark functions.

for 8 functions. Especially, for![]() ,

, ![]() ,

, ![]() ,

, ![]() ,

, ![]() , and

, and![]() , BQPSO succeeds many times while all runs of QPSO fail. In Table 1, we can see that there are significant differences in quality between the BQPSO and QPSO solutions of the high-dimensional functions.

, BQPSO succeeds many times while all runs of QPSO fail. In Table 1, we can see that there are significant differences in quality between the BQPSO and QPSO solutions of the high-dimensional functions.

In Table 2, the MNFE is fixed at 20000 for 8 functions. From this table it can be observed that, for all functions, BQPSO requires less NFE than QPSO. For some high-dimensional functions (such as![]() ,

, ![]() ,

, ![]() ,

, ![]() ,

, ![]() and

and![]() , QPSO fails to reach the VTR after 20,000 NFE while BQPSO is successful. It is worth noting that, from Table 1, the running time of BQPSO is about 10 to 20 times longer than that of QPSO. According to the no free lunch theorem, the superior performance of BQPSO is at the expense of a long running time.

, QPSO fails to reach the VTR after 20,000 NFE while BQPSO is successful. It is worth noting that, from Table 1, the running time of BQPSO is about 10 to 20 times longer than that of QPSO. According to the no free lunch theorem, the superior performance of BQPSO is at the expense of a long running time.

It can be concluded that the overall performance of BQPSO is better than that of QPSO for all 8 functions. The improvement based on quantum computing can accelerate the classical QPSO algorithm and significantly reduce the NFE to reach the VTR for all of the test functions.

4.5. The Comparison of BQPSO with Other Algorithms

In this subsection, we compare BQPSO with other state-of-art algorithms to demonstrate its accuracy and performance. These algorithms include a genetic algorithm with elitist strategy (called GA), a differential evolution algorithm (called DE), and a bee colony algorithm (called BC). The BQPSO’s control parameter was![]() . For the genetic algorithm, the crossover probability was

. For the genetic algorithm, the crossover probability was ![]() and the mutation probability was

and the mutation probability was![]() . For the differential evolution algorithm, the scaling factor was

. For the differential evolution algorithm, the scaling factor was![]() , and the crossover probability was

, and the crossover probability was![]() . For the bee colony algorithm, let

. For the bee colony algorithm, let ![]() denote the population size of the whole bee colony, and

denote the population size of the whole bee colony, and ![]() and

and ![]() denote the population size of the employed bee and onlooker bee, respectively.

denote the population size of the employed bee and onlooker bee, respectively.

We have taken![]() . The threshold of a tracking bee searching around a mining bee was

. The threshold of a tracking bee searching around a mining bee was![]() . The other parameters used for the four algorithms are the same as described in Section 4.2. The eight high-dimensional functions were used for these experiments, which had 50 independent runs. Table 3 shows the mean of these 50 errors and the number of successful runs. The mean and standard deviation of the NFE are shown in Table 4.

. The other parameters used for the four algorithms are the same as described in Section 4.2. The eight high-dimensional functions were used for these experiments, which had 50 independent runs. Table 3 shows the mean of these 50 errors and the number of successful runs. The mean and standard deviation of the NFE are shown in Table 4.

From Table 3 and Table 4 it can be argued that the BQPSO performed best among the four algorithms. It obtained the best results for all eight benchmark functions. The best algorithm is not as obvious for the remaining

![]()

Table 3. Comparison of the mean of the error and the number of successful runs of the four algorithms.

![]()

Table 4. Comparison of the mean of the error and the standard deviation of NFE of the four algorithms.

three algorithms. The DE algorithm performed well on average. It obtained the best results among the three algorithms for some benchmark functions, but it did not successfully optimize the functions f2, f4, and f7, because it got trapped in a local optimum. The BC achieved the best results among the three algorithms for the 30-dimensional functions f2, f4, and f7. The GA achieved the best results among three algorithms for the 20-dimensional functions f2 and f7. The DE achieved the best results among three algorithms for the 20-dimensional function f4. According to the experimental results, the algorithms can be ordered by optimizing performance from high to low as

BQPSO

,

DE

, BC, GA. This demonstrates the superiority of BQPSO.

These results can be easily explained as follows. First, In BQPSO, two parameters ![]() and

and ![]() of a qubit can be simultaneously adjusted by means of rotating the current qubit through an angle

of a qubit can be simultaneously adjusted by means of rotating the current qubit through an angle ![]() about the rotation axis. This rotation can automatically achieve the best matching of two adjustments. In other words, when the current qubit moves towards the target qubit, the path is the minor arc of the great circle on the Bloch sphere, which is clearly the shortest. Obviously, this rotation with the best matching of two adjustments has a higher optimization ability. Secondly, the three chains structure of the encoding particle also enhances the ergodicity of the solution space. These advantages are absent in the other three algorithms.

about the rotation axis. This rotation can automatically achieve the best matching of two adjustments. In other words, when the current qubit moves towards the target qubit, the path is the minor arc of the great circle on the Bloch sphere, which is clearly the shortest. Obviously, this rotation with the best matching of two adjustments has a higher optimization ability. Secondly, the three chains structure of the encoding particle also enhances the ergodicity of the solution space. These advantages are absent in the other three algorithms.

5. Conclusion

This paper presents an improved quantum-behaved particle swarm optimization algorithm. Unlike the classical QPSO, in our approach the particles are encoded by qubits described on the Bloch sphere. In this kind of coding method, each particle contains three groups of Bloch coordinates of qubits, and all three groups of coordinates are regarded as the approximate solutions describing the optimization result. As three solutions are synchronously updated in each optimization step (with the same swarm size as QPSO), our encoding method can extend the search range and accelerate the optimization process. In our approach, the particles are updated by rotating qubits through an angle about an axis on the Bloch sphere, and the rotation angles of qubits are computed according to the iteration equation of the classical QPSO. This kind of updating approach can simultaneously adjust two parameters of qubits, and can automatically achieve the best matching of two adjustments. The experimental results reveal that the proposed approach can enhance the optimization ability of the classical quantum-behaved particle swarm optimization algorithms, and for high dimensional optimization the enhancement effect is remarkable. In addition, our approach adapts quicker than the classical QPSO when the control parameter changes. Further research will focus on enhancing the computational efficiency of BQPSO without reducing the optimization performance.