1. Introduction

Learning is one high-level cognitive function in Consciousness And Memory model (CAM) [1] . Through learning, humans, animals and some machines acquire new, or modify and reinforce existing knowledge, behaviors, skills, values, or preferences. Learning produces changes in the systems and the changes are relatively permanent.

Human learning may occur as part of education, personal development, schooling, or training. It may be goal- oriented and may be aided by motivation. Motivation is defined by psychologists as an internal process that activates, guides, and maintains behavior over time. Mook [2] defined motivation as “the cause of action” briefly. Maslow [3] proposed hierarchy of needs which was one of the unified motivation theories. Since it introduced to the public, the Maslow’s theory has a significant impact on every aspect in people’s life. Various attempts have been made to either classify or synthesize the large body of research related to motivation. Green et al. [4] categorized motivation theories as physiological, behavioral or social. Mook [2] took the view that motivation theories are behaviorist, mediationist or biological. Merrick [5] argued that the theories of motivation can be classified into 4 categories: biological theories, cognitive theories, social theories and combined motivation theories.

Motivation learning is based on a self-organizing system of emerging internal motivations and goal creation. The motivation learning mechanism creates higher level motivations and sets goals based on dominating primitive and abstract event signals. It can be treated as a meta-learning technique in which a motivation process provides a learning algorithm with a motivation signal that focuses on learning. The role of the motivation process is to use general, event-independent concepts to generate a motivation signal to stimulate the learning of event oriented behaviors. Intrinsic motivations that trigger curiosity based learning can be compared to the exploratory stage in reinforcement learning. In reinforcement learning, a machine occasionally explores the state-action space, rather than performing an optimal action in the task of maximizing its rewards [6] . However, without proper control of these explorations, a machine may not develop its abilities or even develop a destructive behavior [7] . Intrinsic motivations can also select actions that yield a maximum rate of reduction of the prediction error, which improves learning when the machine tries to improve one kind of activity.

Berlyne [8] defined curiosity as a form of motivation that promoted exploratory behavior to learn more about a source of uncertainty, such as a novel stimulus, with the goal of acquiring sufficient knowledge to reduce the uncertainty. Schmidhuber implemented curious agents using neural controllers and reinforcement learning with intrinsic rewards generated in response to an agent improving its model of the world [9] . In fact, most of curiosities are caused by novelty. Novelty detection is a useful technology to find curiosity. Novelty detection is the identification of new or unknown data or signal that a learning system is not aware of during training [10] [11] .

In CAM we use introspection learning to find novelty and interestingness. By checking and caring about knowledge processing and reasoning method of intelligence system itself and finding out problems from failure or poor efficiency, introspection learning forms its own learning goal and then improves the method to solve problems [12] . Introspective reasoning has a long history in artificial intelligence, psychology, and cognitive science [13] .

In early 1980s, introspective reasoning was implemented as planning within the meta-knowledge layer. SOAR [14] employed a form of introspective reasoning by learning meta-rules which described how to apply rules about domain tasks and acquire knowledge. SOAR’s meta-rules were created by chunking together existing rules and learning is triggered by sub-optimal problem-solving results rather than failures. Birnbaum et al. proposed the use of self-models within case-based reasoning [15] . Cox and Ram proposed a set of general approaches to introspective reasoning and learning, automatically selecting the appropriate learning algorithms when reasoning failures arise [16] . They defined a taxonomy of causes of reasoning failures and proposed a taxonomy of learning goals that is used for analyzing the traces of reasoning failures and responding to them. Leake et al. pointed out that in introspective learning approaches, a system exploited explicit representations of its own organization and desired behavior to determine when, what, and how to learn in order to improve its own reasoning [17] .

Attention is the behavioral and cognitive process of selectively concentrating on one aspect of the environment while ignoring other things. Eriksen et al. developed a spotlight model for selective attention [18] . The attention focus is an area that extracts information from the visual scene with a high-resolution, the geometric center of which being where visual attention is directed. In this paper we will adopt maximal interestingness as the spotlight to pay more attention.

In this paper, a motivation learning algorithm will be proposed and applied to cyborg rat maze search by agent simulation. The motivation learning algorithm is different from reinforcement learning and supervised learning since it does not need global world model and training examples.

The remainder of this paper is organized as follows. Section 2 outlines the motivation processing. Section 3 describes the motivation learning algorithm. Section 4 shows that the motivation learning algorithm will be applied to cyborg rat maze search by agent simulation. Finally, the conclusions of this paper are drawn and future works are pointed out.

2. Motivation Processing

Consciousness And Memory model (CAM) is a general framework for developing brain-like intelligent machines. The architecture of CAM is depicted in Figure 1. We propose the architecture for exploring how human

mind work and study hierarchical memories and consciousness. For consciousness the primary focus is on global workspace theory, motivation model, attention, and the executive control system of the mind in CAM. The consciousness is modeled by a finite state machine. The state of the finite state machine corresponds to the human’s mental state.

Hierarchical memories contain working memory, short-term memory, and long-term memory. Working memory provides temporary storage and manipulation for language comprehension, reasoning, problem solving, reading, planning, learning and abstraction. The working memory involves four subcomponents: central executive, visuospatial sketch pad, phonological loop and episodic buffer. The central executive is the core in working memory. It drives and coordinates other subcomponents in working memory to accomplish cognitive tasks. The visuospatial sketch pad holds the visual information about what the cognitive system has seen. The phonological loop deals with the sound or phonological information. The episodic buffer stores the linking information across domains to form integrated units of visual, spatial, and verbal information with time sequencing, such as the memory of a story or a movie scene. The episodic buffer is also assumed to have links to long-term memory and semantic meaning [19] .

Short-term memory systems are associated with the process of encoding. In CAM model, short-term memory stores agent’s beliefs, goals and intention contents which are changed rapidly in response to environmental conditions and agent’s agenda.

Long-term memory is considered to be relatively permanent. It is associated with the processes of storage and retrieval of information from memory. According to the stored contents type, long-term memory is divided semantic, episodic and procedural memory. Semantic memory stores general facts which are represented as ontology. Ontology specifies a conceptualization of a domain in terms of concepts, attributes, and relations in the domain. The concepts provide model entities of interest in the domain. They are typically organized into a taxonomy tree where each node represents a concept and each concept is a specialization of its parent. Each concept in the taxonomy is associated with a set of instances. By the taxonomy’s definition, the instances of a concept are also instances of an ancestor concept. Each concept is associated with a set of attributes. In CAM, dynamic description logic (DDL) is used to describe ontology.

Cognitive cycle is a basic procedure of mental activities in cognitive level. Human cognition consists of cascading cycles of recurring brain events. In mind model CAM we propose the cognitive cycle shown in Figure 2 [20] . The CAM cognitive cycle depicts as three phases: Perception-Motivation-Action Composition. Perception phase is the process of attaining awareness of the environment by sensory input. Using the incoming percept and the residual contents of working memory as cues, local associations are automatically retrieved from transient episodic memory and declarative memory. Motivation phase focuses on learners’ beliefs, expectations, and needs for order and understanding. Action composition will compose a group of actions through action selection, planning to reach the end goal.

The motivation processing is shown in Figure 3 and consists of 7 modules: environment, internal context, motivation, motivation base, goal, action selection, and action composition [21] . Their main functions are

explained as follows.

1) Environment provides the external information through sensory devices or other agents.

2) Internal context represents the homeostatic internal state of the agent and evolves according to the effects of actions.

3) Motivation is an abstraction corresponding to tendency to behave in particular ways according to environmental information. Motivations set goals for the agent in order to satisfy internal context.

4) Motivation base contains a set of motivations and motivation knowledge with defined format.

5) Goal is a desired result for a person or an organization. It is used to define a sequence of actions to reach specific goals.

6) Action selection is used to perform motivated action that can satisfy one or several motivations.

7) Action composition is the process of constructing a complex composite action from atomic actions to achieve a specific task.

The action composition is composed of overlapping hierarchical decision loops running in parallel. The number of motivations is not limited. Action composition of the most activated node is not carried out at each cycle, as in a classical hierarchy, but only at the end in the action layer, as in a free flow hierarchy. In the end, the selected action is activated.

3. Motivation Learning

In CAM, motivation learning needs a mechanism for creating abstract motivations and related goals. Once implemented, such a mechanism manages motivations, as well as selects and supervises execution of goals. Motivations emerge from interaction with the environment, and at any given stage of development, their operation is influenced by competing event and attention switching signals.

The learning process for motivations needs to obtain the sensory states by observing, then the sensed states are transformed mutually by the events. Where to find novelty to motivate an agent’s interestingness will play an important role. Once the interestingness is stimulated, the agent’s attention may be selected and focused on one aspect of the environment. Therefore, it will be necessary to define observations, events, novelty, interestingness and attention before describing the motivation learning algorithm.

3.1. Observations

An observation is essentially a combination of sensations from the sensed state. For an agent to function efficiently in complex environments, it may be necessary to select only a subset of these combinations as observations.

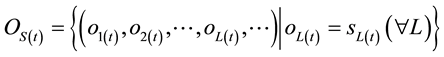

Observation function is a subset to map the sensed state  to a set of observations

to a set of observations .

.

Definition 1: Observation Functions

Observation functions define the combinations of sensations from the sensed state that will motivate further reasoning. Observations containing fewer sensations affect an agent’s attention focus by making it possible for the agent to restrict its attention to a subset of the state space. Where, a typical observation function can be given as:

The equation defines observation function  in each observation which focuses on every element of the sensed state at time

in each observation which focuses on every element of the sensed state at time .

.

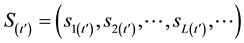

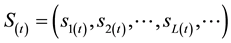

3.2. Events

Events are introduced to model the transitions between sensed states. Events are computed using difference functions and event functions that control the level of attention focus on the transition. Events are represented in terms of the difference between two sensed states. The difference between two sensed states,

and

and  where

where  as a vector of difference variables

as a vector of difference variables

is calculated using a difference function  which is defined as follows.

which is defined as follows.

Definition 2: Difference Function

A difference function  assigns a value to the deference between two sensations

assigns a value to the deference between two sensations  and

and  in the sensed states

in the sensed states  and

and ![]() as follows:

as follows:

![]()

Difference function offers the information about the change between successive sensations and calculates the magnitude of the change.

Definition 3: Event Function

Event functions define which combinations of difference variables an agent recognizes as events, each of which contains only one non-zero difference variable. Event function can be defined as following formula:

![]()

where,

![]()

Events may be of varying length or even empty, depending on the number of sensations to change.

3.3. Novelty

Detecting novel events is an important ability of any signal classification scheme. Given the fact that we can never train a machine learning system on all possible object classes whose data is likely to be encountered by the system, it becomes important to differentiate between known and unknown object information during testing. It has been realized in practice by several studies that the novelty detection is an extremely challenging task. Novelty is a useful motivator in some environments. In complex, dynamic environments, there is often a possibility of random observations or events as a result of either the environment dynamics or sensor noise. Saunders and Gero [22] developed computational models of curiosity and interest based on novelty. They used sigmoid functions to represent positive reward for the discovery of novel stimuli and negative reward for the discovery of highly novel stimuli. The resulting computational models of novelty and interest are used in a range of applications.

Novelty detection identifies new or unknown data or signal that a machine learning system is not aware of during training [11] . Novelty detection is one of the fundamental requirements of a good classification or identification system since sometimes the test data contains information about objects that were not known at the time of training the model. Berlyne [8] defined perceptual novelty is in relation to perceptions, while epistemic novelty is in relation to knowledge. Based on a fixed set of training samples from a fixed number of categories, novelty detection is a binary decision task to determine for each test sample whether it belongs to one of the known categories or not.

Novelty detection determines the novelty of a situation. Novelty detection is considered as a meta-level conceptual process because the concept of novelty is based upon the concepts built by other conceptual pro- cesses categorizing the situation.

Definition 4: Novelty Detection Function

The novelty detection function, ![]() , takes the conceptual state of the agent,

, takes the conceptual state of the agent, ![]() , and compares it with memories of previous experiences,

, and compares it with memories of previous experiences, ![]() , constructed by long-term memory to produce a novelty state,

, constructed by long-term memory to produce a novelty state,![]() :

:

![]()

Novelty can be detected by introspective search comparing the current conceptual state of an agent with memories of previous experiences.

3.4. Interestingness

Interestingness is defined as novelty and surprise. It depends on the observer’s current knowledge and computational abilities. Interestingness can be either objective or subjective: objective interestingness uses relationships found entirely within the object considered interesting, while subjective interestingness compares properties of the object with beliefs of a user to determine interest. The interestingness of a situation is a measure of the importance of the situation with respect to an agent’s existing knowledge; interesting situations are neither too similar nor too different from ones previously experienced.

Definition 5: Interestingness Function

The interestingness function determines a value for the interestingness of a situation, ![]() , basing on the novelty detected,

, basing on the novelty detected,![]() :

:

![]()

3.5. Attention

Attention is the behavioral and cognitive process of selectively concentrating on one aspect of the environment while ignoring other things. After we get the interestingness function we can select certain event. There are two selection strategies: threshold selection mechanism (TSM) and proportion selection mechanism (PSM) [23] .

TSM is a threshold filtering algorithm. Assume that we have a threshold,![]() . If the interestingness value is larger than

. If the interestingness value is larger than ![]() the event is chosen to build a motivation; on the contrary, if the value is smaller than

the event is chosen to build a motivation; on the contrary, if the value is smaller than ![]() the event is omitted.

the event is omitted.

PSM is a bottleneck filtering algorithm. The number of the input stimuli, which can be processed by the brain, is restricted to a maximal one.

Definition 6: Attention Selection

Attention is a complex cognitive function which is essential for human behavior. Attention is a selection process for an external (sound, image, smell...) or internal (thoughts) event which has to be maintained at a certain level of awareness. The selective or focused attention selects the information that should be pro- cessed in priority, according to relevance in the situation at hand or needs in a given context. Selective attention enables you to focus on an item while mentally identifying and distinguishing the non-relevant information. In CAM we adopt maximal interestingness strategy to select attentions to create a motivation.

3.6. Motivation Learning Algorithm

Motivation learning creates internal representations of observed sensory inputs and links them to learned actions that are useful for its operation. If the result of the machine’s action is not relevant to its current goal, no intentional learning takes place. This screening of what to learn is very useful since it protects machine’s memory from storing unimportant observations, even though they are not predictable by the machine and may be of sufficient interest for novelty-based learning. Novelty-based learning still can take place in such a system when the system is not triggered by other motivations. However, it will play a secondary role in goal- oriented learning.

The following describes basic steps of novelty-based motivation learning and goal creation algorithm in CAM.

Motivation learning algorithm

1) Observe ![]() from

from ![]() using the observation function

using the observation function

2) Subtract ![]() using the difference function

using the difference function

3) Compose ![]() using the event function

using the event function

4) Look for ![]() using introspective search

using introspective search

5) Repeat (for each![]() )

)

6) Repeat (for each![]() )

)

7) ![]()

8) Create a Motivation by Attention.

The algorithm involves collaboration of CAM processing within central executive, reward and consciousness processing blocks, episodic and semantic memory, and interaction with sensory and motor pathways. The central executive block in working memory is spread between several functional units without clear boundaries or a single triggering mechanism. It is responsible for coordination and selective control of other units. This block interacts with other units for performing its tasks. Its tasks include cognitive perception, attention, motivation, goal creation and goal selection, thoughts, planning, learning, supervision and motor control, etc. The central executive directs cognitive aspects of machine control and learning experiences but its operation is influenced by competing signals representing motivations, desires, and attention switching coming from short-term memory. In CAM, short-term memory looks like BDI structure with me- mory and reasoning functions. Semantic memory stores previous experiences represented as ontology. In- trospective search can perform novelty detection to discover novel events.

4. Application for Cyborg Rat Maze Search

One outstanding feature of motivational system is that it can enhance the automatic level and improve system quality. Here we apply the motivation system to cyborg rat maze search.

Using rat sensitivity to ultraviolet (UV) flash, mixed perception of the robot directly extracted from rat visual cortex of rat brain signals, decoding the rat visual perception of their own results and visual recognition and machine intelligent integration, and finally can be used for large Mouse robot autonomous navigation.

To get the flash visual stimuli related neural information, we select the visual nerve pathways in rats which contain the most abundant of the primary visual cortex of visual information as an experimental brain region. By embedding 16 channels filaments in the rat visual cortex area recording electrodes, nerve signal acquisition using professional equipment, access to the rat visual cortex nerve signals. Freely moving rats with recording elec-

trodes are placed in the stimulus act which has a flash tank, record neural signals, experimental environment under different conditions of darkness and bright selection of two states, high and low to stimulate multiple source selection stimuli. It was found that the particular characteristics of the flash frequency stimulation can elicit specific release in rat brain neurons; the group established a multi-channel spectrum-related characteristics and decoding model to achieve a UV flash nerve signals with specific resolution.

In the maze search task, the machine will give certain maze clues message alert information via electrical stimulation to robot rat, so that the machine can be aware of information in the biological brain, making decisions for its maze search. Figure 4 shows a pattern of maze.

We define 3 kinds of actions for maze search: go ahead, turn left, and turn right, which correspond to high frequency, middle frequency and lower frequency UV flash respectively. Cyborg rat sends a signal to agent when cyborg rat gets a high UV flash and agent creates motivation which causes cyborg rat to go ahead.

We have developed a multi-agent simulation platform MAGER. One agent plays the role of cyborg rat and receives UV flash from outside. Agent performs motivation learning to decide the moving direction after get the UV flash signal.

5. Conclusions

Motivation learning creates abstract motivations and related goals. It is one of the high-level cognitive functions in CAM. This paper presents a new motivation learning algorithm which allows an agent to create motivations or goals based on introspective process. The simulation of cyborg rat maze search shows that the motivation learning algorithm can adapt agents’ behavior in response to dynamic environment.

Motivation learning is an important topic for collaboration work of brain machine integration. We will continue research on meta-cognition for introspective process to improve the motivation learning algorithm’s efficiency.

Acknowledgements

This work is supported by the National Program on Key Basic Research Project (973) (No. 2013CB329502), National Natural Science Foundation of China (No. 61035003, 61202212), National Science and Technology Support Program (2012BA107B02).

NOTES

*Corresponding author.