1. Introduction

SVM (Support Vector Machine) [1] is a supervised learning algorithm commonly used for classification tasks and has been successfully applied to many technological fields, such as text categorization [2] , financial forecast [3] , image classification [4] and so on. This paper focuses on a binary classification problem. Given training samples

, where

,

, the objective of SVM is to identify an optimal separating hyperplane to separate data points into two classes. Scholars have proposed some classic SVM models based on convex loss functions, such as the hinge loss (also called L1 loss) in classic SVM [5] , the least square loss in LSSVM [6] and the huberized pinball loss in HPSVM [7] . However, in practice, the real dataset often contain noise. Since convex loss functions are generally unbounded, convex losses are highly sensitive to outliers and potentially influenced by outliers. Therefore, some nonconvex loss functions are proposed to improve robustness compared with the convex loss functions [8] . For example, [9] proposed the ramp loss based on hinge loss, the truncated pinball loss was proposed by [10] . Recently, a noise insensitive and robust support vector machine classifier with huberied truncated pinball loss (HTPSVM) was proposed in [11] , this loss is smooth and nonconvex loss function. The HTPSVM can be transformed into format of “Loss + Penalty”, in which the penalty is a hybrid of

norm and

norm penalty.

Here, the HTPSVM model and algorithm of literature [11] are briefly introduced. Consider a classification problem with training samples

. The HTPSVM seeks to solve the following regularization problem:

(1)

the huberied truncated pinball loss

function is defines as

(2)

which is a nonconvex and smooth function. The HTPSVM combine the benefits of both

and

norm regularizers and and it has been demonstrated in [11] that it can reduce the effects of noise in the training sample. Therefore, we consider that studying the HTPSVM model is meaningful. The APG algorithm was used to solve the model in [11] . [12] applied the APGs method (first proposed in [13] ) to solve problem (1) and obtain better convergence behavior. However we find that the proximal operator for computing the

norm causes the subproblem to be solved slowly in APG and APGs algorithms, we attempt to accelerate the solution process for this model. Recently, [14] propose the Bregman APGs (BAPGs) method, which avoids the restrictive global Lipschitz gradient continuity assumption. In this paper, we improve BAPGs algorithm to solve the problem (1) and replace the Lipschitz constant by an appropriate positive definite matrix and obtain better results after we perform numerical experiments on 10 datasets to test our method.

The rest of this paper is organized as follows. In the next section, we provide preliminary materials used in this work. In Section 3, we introduce the BAPGs algorithm proposed by [14] and present our algorithm based on the BAPGs method for solving the HTPSVM model (1). The convergence of our method is also discussed. Section 4 performs some experiments.

2. Preliminaries

In this paper, we let

denote the set of real numbers. We work in the Euclidean space

, and the standard Euclidean inner product and the induced norm on

are denoted by

and

. The domain of the function

is defined by

. We say that f is proper if

. A proper function f is said to be closed if it is lower semicontinuous at any

, i.e.

.

Definition 1. [ [15] , Definition 8.3] For a proper closed function f, the regular subdifferential of

at

is defined by

(3)

The (general) subdifferential of f at

is defined

(4)

where

means both

and

. Note that if f is also convex, then the general subdifferential and regular subdifferential of f at

reduce to the classical subdifferential [ [15] , Proposition 8.12], that is

(5)

Definition 2. (Kernel Generating Distances and Bregman Distances [16] [17] [18] ) Let C be a nonempty, convex and open subset of

. Associated with C, a function

is called a kernel generating distance if it satisfies the following:

1)

is proper, lower semicontinuous and convex, with

and

;

2)

is continuously differentiable on

.

We denote the class of kernel generating distances by

. Given

, the Bregman distance

is defined by

For exmple, when

, then

. If

, then

. In this article, the gradient Lipschitz continuity condition of the function f is no longer required, instead it is replaced by the L-smooth adaptive function of pair

. The definition of L-smooth adaptable as follows.

Definition 3. A pair of functions

,

,

is a proper and lower semicontinuous function with

and f is continuously differentiable on

, is called L-smooth adaptable (L-smad) on C if there exists

such that

and

are convex on C.

Lemma 1. (Full Extended Descent Lemma [19] ) A pair of functions

is L-smad on

if and only if:

,

.

Definition 4.

is called μ-relative weakly convex to

on C if there exists

such that

is convex on C [14] .

3. The Modified BAPGs Method for HTPSVM

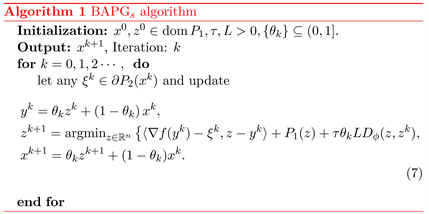

In this section, we first describe the BAPGs method proposed in [14] , then the modified BAPGs method with adaptive parameter is given for HTPSVM.

3.1. BAPGs Method

Consider the following optimization problem:

(6)

where f is a μ-relative weakly convex continuously differentiable function, P1 is a proper, lower semicontinuous convex function and P2 is continuous and convex. Besides, F is level-bounded i.e., for every

, the set

is bounded; F is bounded below i.e.,

. The iterative scheme of BAPGs [14] for solving probelm (6) is shown in Algorithm 1, where

is a Bregman distance defined in Section 2.

We see that when

, BAPGs reduces to APGs in [13] . [14] proved the global convergence of the iterates generated by BAPGs to a limiting critical point under some assumptions.

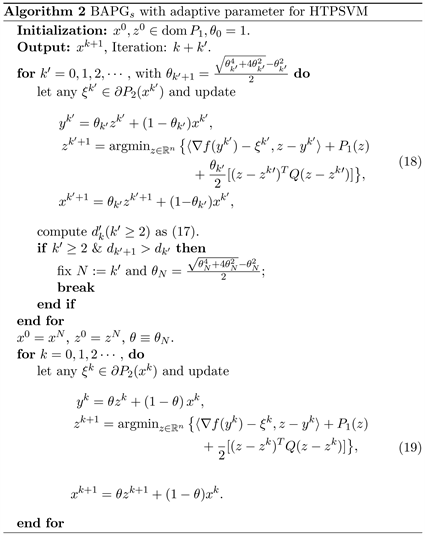

3.2. Adaptive BAPGs Method for HTPSVM

By writing the nonconvex loss

as the difference of three smooth convex functions, the problem (1) can be expressed as following from [12]

(8)

where

,

is the regularization parameter; for

,

, and the smooth convex functions

are defined as

(9)

(10)

(11)

Then we can apply the BAPGs to solve problem (8) in the form of (6)

•

:

(nonconvex),

(convex);

•

:

(nonconvex),

(convex).

Next, We will briefly illistrate that the problem (8) can be solved by the BAPGs [14] .

Theorem 1. Let f as defined in

and

. Set

, where

,

. Then, the pair

is L-smooth adaptable on

with

.

Proof. Firstly, for

, since

(12)

and

(13)

are monotonically increasing, it is easy to verify that

and

are convex. Then we can easily get the convexity of

(14)

and

(15)

the proof is similar for

. It is clear that

is 1-smooth adaptable on

, this further implies that there exists

such that

is convex.

We can see that the problem (8) satisfies the conditions required in [14] with

for the pair

, where Q defined as Theorem 1. Therefore the BAPGs method (Algorithm 1), here we let

and replace (7) with the following steps, can be used for solving (8)

(16)

The selection of parameter

in [14] as: for fixed positive integer N, let

,

and

for all

. It is to see that the value of the positive integer N is difficult to determine. Combining with the adaptive parameter selection criterion proposed in [12] : let

,

for

and compute

(17)

when

, where

and

(the assumption of sequence

given in [14, Assumption 2]). Let N be the first k satisfying

. The BAPGs algorithm with adaptive parameter for problem (8) (HTPSVM) is shown in Algorithm 2.

4. Numerical Results

In this section, we aim to show the performance of Algorithm 2 for solving problem (1) by using MATLAB R2020b on a 64-bit PC with an Intel(R) Core(TM) i7-10870H CPU (2.20GHz) and 16GB of RAM.

First, consider the optimality condition (19) of Algorithm 2

Due to there is no explicit solution for this subproblem, we try to instead the

norm by linear approximation, that is,

, where

(here we take

), then we construct a new iteration step to replace the subproblem in Algorithm 2 as

(20)

it is easy to calculate its solution:

which means

Then the update (18) and (19) are replaced by

(21)

in experiments, where

and

. The experiments are conducted on several real world datasets. We select 10 datasets from UCI [20] , to compare the Algorithm 2 with APG (method in [11] ), APGs [12] and GIST [21] , where in GIST, we set

with

. The corresponding parameters of these methods are set the same as in [12] . For each dataset, The 21 initial points are used commonly for all methods: one zero vector, and 5 vectors selected independently from N (0, σ2I) for each

. All algorithms stop if

or the number of iterations hits 3000. The average results are given in Table 1 and Table 2, including the number of iterations (iter), objective function value (fval) and CPU time in seconds (CPU) at termination with

and

, where BAPGs-

and APGs-

represent using BAPGs (algorithm 2) and APGs [12] for

respectively (

described in section 3.2).

![]()

Table 1. Comparison on 10 datasets with

.

![]()

Table 2. Comparison on 10 datasets with

.

From the above tables, we see that Algorithm 2 for

always obtain the smaller function values and converge faster than others, this means that Algorithm 2 for solving HTPSVM model (1) performs well.

5. Conclusions and Suggestions

In this paper, based on the BAPGs method proposed by [14] , we construct the modified BAPGs with the adaptive parameter selection technique introduced in [12] for solving the HTPSVM model. The linear approximation method is used to improve the subproblem in algorithm and a function

with a suitable matrix Q is set to obtain the L-smad property. Finally, numerical experiments show that our algorithm convergence faster.