Sectional Dimensions Identification of Metal Profile by Image Processing ()

1. Introduction

One of the most important goals is to produce quality products in all sectors involving manufacturing. Depending on the material used in production, the production environment, the systems controlling the production, and the human factors involved in the production, situations may occur where the desired quality cannot be achieved. Although taking measures to prevent such situations from occurring is the main objective, it is equally important to make early detection in cases where they do occur.

Studies are carried out in all these areas in the iron and steel industry, like other sectors. To prevent deviation in production stage, mathematical models or artificial intelligence techniques are utilized for correct determination of production parameters. Povorov and Semenov tried to build a mathematical model for predicting material shape in profile rolling. [1] Analyzing the effect of the profile of the hot-rolled sheet on cold-rolling, Ma et al. developed the ANN model [2] .

In addition to obtaining the models that best describe the process, studies have also been carried out to determine the defects that occurred on products. Detecting surface defects, measuring surface roughness and dimensions, identifying the shape of the product are some of the studies for determining quality-related problems. In such studies, especially image processing-based systems are used.

In the profile or pipe production, the measurement of dimensions is mostly done manually by the operators. While it is easy for operators to take measurements in profiles produced by cold rolling, they cannot take measurements in hot rolled profiles due to high-temperature. For this reason, the use of automatic measurement systems in hot-rolling production environments will increase the quality of production. Real-time measurement will provide the opportunity to detect problems that may arise during the production phase as soon as possible.

A measuring system was developed by Kawasaki Steel for the recognition of profile sections using two laser light sources [3] . It is seen that TBK Automatisierung und Messtechnik GmbH has developed a system that makes measurements using laser light sources (TBK Automatisierung und Messtechnik). No detailed information could be obtained about the details of the related system.

In dimensioning, extraction of the information from an image is as important as taking it. Wang et al. carried out a study on estimating the dimensions by taking images with the help of a thermal camera and detecting the edges [4] . This method will be a more costly solution compared to other measurement systems. Studies are carried out for the processing and interpretation of the captured images. In the project “Plate Localization Using an Alternative Morphological Method”, the parallelism of the two lines found as a result of the Hough transform was used to flatten the perspective image, starting from the lines that are certain to be rectangular. [5] . In another similar project, image smoothing was applied using affine transform [6] . Lin has developed the “Top-View Transformation Model” by taking advantage of the camera’s mounting height and perspective view so that vehicles can park comfortably [7] . Ågren used the Hough Transform to detect the lines on the road in the algorithm he developed to find the position of the car on the road [8] . No studies are known by the authors for the identification of metal profiles’ shapes in the literature.

In this study, it is aimed to obtain images by using 4 laser light sources and to reveal the dimensions of a profile from the images obtained. Using laser light source and oordinary camara will be a much cheaper solution compared with thermal camera application. Since we do not have the opportunity to test hot-rolled profiles in real-time, sample pieces from the profiles produced were used. Profile sizing was done by processing the images obtained in the prototype environment introduced. We use morphological operations similar to the ones used by others in the literature to process images. Conversion of the perspective view is also considered in our study since the images are gathered from the cameras mounted in different height positions.

2. Material and Method

The model to be developed requires the implementation of more than one step. These steps are shown in Figure 1.

2.1. Simulation Platform

Cameras and laser light sources were placed 75 cm from the center of the table at four corners of the table having 200 × 200 mm measurement area. The laser light sources were located 15 mm above the table surface and were used as the measuring plane.

It is aimed to determine the edges of the rectangular profile placed in the center of the simulation environment by taking images from the cameras placed at the 4 corners around the profile (Figure 2). Then, the dimensions will be determined based on the edges.

Perspective photography was taken from all four sides of the profile. The red laser line in each image was detected and thinned. The processed image is converted from perspective format to vertical view format. Lines and shapes are identified by the Hough Transform. For scaling, a calibration procedure was created and reference points and calibration coefficients for measurement were determined. The cross-sectional dimensions of the profile are calculated using reference points and calibration coefficients.

For the measurement, firstly, a calibration method was determined. A 200 × 200 mm table is used for calibration. To detect the edges, the red line laser light from the four corners is projected onto the profile to be parallel to the table. To detect the reflection of the table in the plane where the laser light is emitted, a 45 × 45 mm profile was placed on each corner of the table, respectively, and images were taken from the cameras. In this way, the size of the table in the plane where the laser light is generated will be determined. A total of 16 images were taken from 4 cameras, one for each corner of the measuring table.

White pixels on the image are found by applying laser light detection, binary conversion, and morphological refinement processes on each image, respectively. Each pixel of the edge in the image is located on the x and y axes in the new image.

The new image in the vertical coordinate plane is rotated 90˚ and the same process is applied to the image of the next camera. At the end of the process for each camera, the image in the vertical plane is rotated 90˚. The parallel and vertical edges in the image are obtained in the vertical coordinate plane Finally, the profile size is determined by calculating the lengths between the parallel edges.

2.2. Image Processing

An image can be defined as a two-dimensional function f(x, y), where x and y are the plane coordinates and f is the amplitude in the (x, y) coordinate of the image at that point, referring to the gray level or darkness. If the amplitude values of x, y, and f are finite values, that is, discrete values, we call the image a digital image. An image transferred to a computer cannot go beyond a raw photograph. Image processing will be necessary to determine and extract the areas of interest in the photograph [9] [10] .

2.3. Morphological Operations

Mathematical morphology is a powerful image-processing tool in nonlinear neighborhood operations and is used to distinguish objects that we want to remove or distinguish from other regions in an image [11] [12] . Two morphological operators, erosion and dilation, are the basis of mathematical morphology. The application is made by sequentially moving the structuring element over the image matrix. The image is first converted to a “binary” format to be able to make dilation or erosion on the image by applying a structural filter.

Structuring element includes structures in the form of a matrix prepared in the desired dimensions and the desired shape. The structuring element can be one of several geometric shapes. The most used structuring elements are square, rectangular, and circular. Examples of structuring elements are shown in Figure 3.

There may be breaks or gaps in the lines in the images obtained. To correct these errors, morphological dilation, in which enlargement or thickening operations are performed, is applied to the digital image (Figure 4). How the dilation will be done is determined by the structuring elements.

In the images obtained, small protrusions on the lines or the borders of two different shapes may be mixed with each other. To correct these defects, morphological erosion is applied to the image, where reduction or thinning operations are performed (Figure 5). The erosion process is somewhat the reverse of the dilation process.

![]()

Figure 4. Morphological dilation process.

![]()

Figure 5. Morphological erosion process.

As a result of the dilation and erosion morphological processes, the original image is deformed. Opening and closing morphological processes are used to minimize damage traces.

If the dilation and erosion operations are applied sequentially to a digital image, the opening process occurs in the image. In the opening process, two objects close to each other are separated without causing much change in the image. Contrary to the opening, the closing process occurs through the sequential application of the erosion and dilation operations on the digital image. Therefore, two objects that are close to each other are connected without much change in the image. The mathematical representations of these operations are as follows.

1) Dilation: A

B

2) Erosion: A Θ B

3) Opening: A B = (A Θ B)

B

4) Closing: A ● B = (A

B) Θ B

To reveal the laser light line formed on the profile, morphological operations are applied to improve the non-overlapping edges, and the edges in the image are sharpened.

2.4. Edge Detection with Hough Transform

Hough transform is an approximation method used to detect regular geometric shapes that can be expressed mathematically such as lines, circles, and ellipses, in image [13] . The basic principle is to use the analytical expression of the geometric location of the points forming the edge of the shape [14] . With this technique, shapes such as lines, circles, and ellipses that can be expressed mathematically can be detected.

One of the difficulties faced in image processing is detecting groups of points with the same linearity. All straight lines in the image form a two-parameter array (Figure 6). If a parameter is specified for this sequence, any straight line can be represented by a single point in the parameter space and represented in the normal parameter space [15] . The definition is given in Equation (1).

(1)

Hough transform was used to detect the laser light line in the obtained image by identifying the point groups with the same linearity after morphological operations.

![]()

Figure 6. Hough transform of a straight-line (a) ρ and θ representation of a straight line; (b) Image space, (c) Hough space (Aydoğan, 2008).

2.5. Desargues Theorem

Desargues theorem is a mathematical expression that helps to understand perceptiveness in the image (Figure 7). According to this theorem, two triangles (ABC and A'B'C') in three-dimensional space can be seen in perspective by viewers if the parallel lines AA', BB' and CC' all intersect at a single point. [16]

According to Desargues Theorem, the edges, and vertices forming similar geometric shapes in different planes are parallel to each other. Interplanetary lines connecting conjugate edges and vertices converge at a perspective (vanishing) point. Then, looking from the vanishing point, we can move a shape or pixel in any plane to another plane whose properties we know.

2.6. Conversion of Perspective Image to Vertical Plane

A camera view of a scene is essentially a 2D representation projection of a 3D scene. When an object or scene is lighted by a light source, some of the light will be reflected toward the camera and captured as a digital image. The light reaching the lens is reflected on the camera image plane based on the camera projection model. The camera projection model changes the 3-dimensional coordinates

(x, y, z) and converts them to 2-dimensional (x, y) coordinates in the camera image plane. The spatial quantization of the image plane, in which the pixels are displayed as a grid, is the transformation of the 2D image coordinates in the image plane to a pixel (c, r) position [17] .

The scene image we are considering will be captured using perspective camera projection. In this study, the Desargues Theorem is used to transform the lines detected in the perspective image into the top view.

The perspective image formed in the camera can be symbolized as shown in Figure 8. The image formed by the camera that shoots from the horizontal plane at a certain angle is the image on the horizontal plane transferred to a plane with the same angle as the camera viewpoint. Shots taken with the camera can be interpreted as transferring the image from one plane to another plane.

According to Desargues Theorem, we can move a shape or pixel in any plane to another plane whose properties we know, by looking from the vanishing point. For this conversion, it is necessary to look at an object with known properties from the same vanishing point. It is sufficient to produce a reference value by obtaining the reflection of this object in the plane whose properties are known. Unknown pixels can be moved to a known plane when viewed from the vanishing point using the reference value (Figure 9).

It is certain that corresponding sides in the camera perspective image of a reference rectangle shape will converge at a vanishing point (Vp1, Vp2). A measurement line is drawn with the same slope as the line passing through the point Vr and passing through the points Vp1 and Vp2, from which we can reflect the perspective image backward from the points Vp1, Vp2. The sides of the rectangular shape intersect the measurement line at points Vx, Vy, and Vr. Calibration coefficients dx and dy are introduced in (mm/pixel) unit using the real dimensions of the rectangle (Equation (2), Equation (3)).

![]()

Figure 8. Image modeling in a single-line camera.

![]()

Figure 9. Conversion from 2D perspective angle plane to vertical coordinate plane.

(2)

(3)

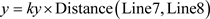

After this stage, a line is drawn between each point in the perspective image and the vanishing point, and the intersection points of these lines are found. The value of the x and y coordinates in the vertical coordinate plane is calculated by multiplying these points and their distance from the Vr reference point and the calibration values (Equation (4), Equation (5)).

(4)

(5)

Since the cross-sectional measurement will be made from the vertical plane image, first of all, the calibration values of the x and y axes in the vertical plane must be determined.

For this purpose, there are two groups of lines parallel to each other and vertical to each other in the image of the reference rectangle transferred to the vertical plane. By calculating the distance between parallel lines, kx and ky calibration values in terms of (mm/pixel) unit (Equation (6), Equation (7)) are calculated.

(7)

(8)

The kx and ky values are used for the size measurement of the profile placed on the measuring table. To obtain the view of the vertical coordinate plane, the camera images in the perspective plane are converted. In the vertical plane image, the existing parallel and vertical lines in the image are determined with the Hough algorithm. The dimensions of the profile are determined by calculating the distances between the lines parallel to each other with Equation (9) and Equation (10).

(9)

(10)

(10)

3. Results

The performance of determining the outer cross-section dimensions of rectangular profile placed in the middle of the simulation system was examined. In this study, an application has been developed to measure the dimensions of the object by distinguishing the red laser light on the object (Figure 10).

The algorithm has been tested on different sizes of rectangular profiles. The thresholding algorithm is used for the segmentation of red color. To refine the line, morphological techniques were applied. Then, the image was transformed from perspective to top-view form. Morphological processes and thinning processes were applied by combining the top-view images of the cameras. The dimensions of the object were obtained by obtaining the edge lines with the Hough algorithm.

![]()

Figure 10. Images obtained by applying algorithms.

The developed algorithms have been tested for materials of 6 different sizes. The test was conducted 5 times and statistical results were obtained. Average measurement results for each dimension and their deviations are given in Table 1.

These studies were performed on an Intel i7 1.6 GHz laptop. Processing times for each size are given in Table 2. The average processing time is 1.25 s. As the material size increases, the processing time also increases.

4. Discussion

There is no similar study in the literature to compare the results. Hence, we analyzed the results in terms of accuracy and computation time to see if it can be an alternative to the manual identification of the cross-sectional dimension of profiles by operators.

When the results in Table 1 are evaluated, it is seen that the material size can be measured with an average deviation of 1.04% for each size. Almost 99%

![]()

Table 1. Profile size test results.

![]()

Table 2. Profile test processing times.

accuracy is an indication of the success of the method used. There is no relationship between the deviation and the size of the material.

When the size of the profile increases, mostly the computation time also increases. However, there is not so large difference between computation time of each size. In this offline simulation platform, 1.25 s is in reasonable time for obtaining dimension of profile. In real-time application depending on the line speed, much faster response may be needed. In that case, either using more powerful computer or reducing some frames from the stream of the images will be a solution.

It is obvious that the identification of the edges of the profile highly depends on the image quality. Using a better quality laser light source and a camera with high resolution and selecting a suitable filter for wavelength of laser light will help the accuracy of the result further.

5. Conclusions

In this study, it has been shown that the method developed for extracting the cross-sectional dimensions of a rectangular profile can be applied in the production environment.

The program has been tested on the square and rectangular profiles. According to the measurements made in the prototype measuring system, average accuracy is achieved at around 99%. Better results can be achieved by obtaining high-quality images. This barely depends on the measurement system items.

It is planned to develop the application to include I, H, and L profiles and the rail in future studies.

To speed up the image processing time a dedicated computer with high capacity can be used. With this design real-time implementation of the method can be implemented.

On the other hand, the method introduced in this study can be utilized to develop 3-dimensional applications for calculating the volumes of objects with different geometric shapes.