Typos Correction in Overseas Chinese Learning Based on Chinese Character Semantic Knowledge Graph ()

1. Introduction

Chinese characters have been used continuously for the longest time so far. They are the only characters in the major writing systems of ancient times that have been passed down till now. Other ancient characters such as Hieroglyphics and Cuneiform have disappeared. Only Chinese characters still have a lasting vitality. They are also the only highly developed ideographic characters that are still widely used in the world. Ideograph is a system of writing words or morphemes in symbolic writing, which does not directly or simply represent speech.

Chinese characters are among the most widely adopted writing systems in the world by the number of users. In recent years more and more foreign friends begin to learn Chinese characters. The majority of Chinese characters are pictophonetic characters, accounting for 85% of the total. A pictophonetic character is composed of two parts: the descriptive component and the phonetic component. The descriptive component usually gives the hint of the boundary of the character’s meaning, while the phonetic one suggests the pronunciation of the character. Therefore, many foreigners learn Chinese characters employing the descriptive component or the phonetic component. However, due to the development of the glyphs and the phonetic system of Chinese characters, many Chinese characters have the phenomenon that the descriptive component does not indicate the meaning or the phonetic component does not indicate the pronunciation. Even for native Chinese speakers, the pronunciation of Chinese characters cannot be simply deduced from their glyphs, and the specific meanings of Chinese characters cannot all be deduced from their descriptive components. Not to mention foreign Chinese beginners whose native language is phonetic [1] . It is not always possible to get consistent results according to the structure of the six categories of Chinese characters, because the same character may be classified into different categories by different experts [2] . The meaning expressed by Chinese characters is related to the ideograph used, while the meaning of most Chinese characters is the extension of the concept expressed by ideograph, or the expansion or reduction of the meaning of words. Therefore, if the knowledge structure of ideograph is mastered, it is equivalent to mastering the meaning of most Chinese characters. The Chinese character writing system represents and classifies lexical units according to semantic classes. So it is important to learn the meaning of the Chinese character glyph.

Being proficient in Chinese is difficult, and even native Chinese speakers often make mistakes. After reviewing about 3000 books, 1000 journals, and 100 newspapers, the Journal of YAOWEN JIAOZI has sorted out a batch of mistake characters based on the frequency of errors and experts’ comments [3] . The top 10 of them are shown in Table 1.

In Chinese communication and international education, the understanding of Chinese characters is the most basic requirement. However, the current Chinese character learning methods, beginners mainly rely on the Chinese character glyph to learn, it often encounters great obstacles, such as UTF8gbsn “肓(the organ between the heart and the diaphragm)” and “肓(blind)”, “粟(millet)” and

![]()

Table 1. The top 10 mistake characters of native Chinese speakers (the characters in the brackets are correct, and the letters in square brackets are the Pinyin of Chinese characters).

“粟(chestnut)”, it difficult to distinguish and understand them through the glyph. From the HSK Dynamic Composition Corpus [4] , we have calculated the top 10 Chinese characters (the group marked in blue) that foreign learners are most likely to make mistakes, as shown in Figure 1.

In Figure 1, the common Chinese character mistakes come from the HSK Dynamic Composition Corpus. Two groups are compared in the figure, where the blue one is the common mistakes of foreign learners and the red one is the common mistakes of native Chinese speakers. And those characters in the red group are corresponding to Table 1.

Comparing Figure 1 and Table 1, we can find that Chinese beginners and native speakers have very different levels of understanding of Chinese. The top 10 most common wrong Chinese characters listed in Table 1 do not maintain the same trend in the HSK Dynamic Composition Corpus. Therefore, it is inconsistent that foreigners who want to learn Chinese use the same method to study Chinese. We should base on the actual situation and needs of foreign learners and provide targeted learning methods.

The mistake Chinese characters can be divided into wrongly written characters and mispronounced characters. With the help of the Chinese input methods, there is little chance of wrongly writing, so most mistakes belong to mispronounced characters. The common causes of mispronounced characters are similarity in the glyph, similarity in pronunciation, similarity in meaning, similarity in both glyph and pronunciation, similarity in glyph, pronunciation and meaning. However, correct recognition of mispronounced characters requires an accurate understanding of the meaning of Chinese characters.

For example, several common mistakes are shown in Table 2. They come from the real composition exams corpus [4] of foreign students who are learning Chinese. It is worth noting that these Chinese characters are all sorted out from the handwritten answer sheets.

In Table 2, the left side of “|” is English, and the right side of “|” is Chinese. As can be seen from Table 2, it is not enough to learn Chinese characters only by their glyphs and pronunciations. It is more important to learn and compare them from a semantic point of view. If Chinese character learning is integrated into the understanding of the semantics, the learning efficiency can be greatly improved. For example, the character “片(slice)” is related to “木(wood)” and it means to split “木(wood)” into two halves [5] . Their semantic correlation is shown in Figure 2.

![]()

Figure 1. The top 10 frequent Chinese characters of common mistakes.

![]()

Table 2. Samples of common mistakes in Chinese learning.

It can be seen from Figure 2 that the correlation between “木(wood)” and “片(slice)” is established through “cut”. Taking “木(wood)” and “片(slice)” as nodes and “cut” as edges to form a semantic association diagram, then “木(wood)” and “片(slice)” can be combined into a knowledge unit to establish a common cognitive model, greatly improving the traditional learning mode of Chinese characters.

All the Chinese characters have such semantic association relations. If the Chinese characters are associated together using their semantics, and thus form a large knowledge graph, the Chinese learners can use it to master a large number of Chinese characters quickly. For the construction of the Chinese character

![]()

Figure 2. The semantic relation between wood and slice.

semantic knowledge graph (CCSKG), the main task is to discover the entities and the semantic relations between them. HowNet [6] is an online common-sense knowledge base unveiling inter-conceptual relations and inter-attribute relations of concepts as connoting in lexicons of the Chinese and their English equivalents. Its set of sememe is established on a meticulous examination of about 6000 Chinese characters. Therefore, it is a powerful tool for constructing the CCSKG.

Chinese characters are ideographic characters, and radicals are ideographic components. Chinese learners must understand the components and radicals. Xiong et al. [7] used radicals to express the semantics of Chinese characters and proposed the concepts of Chinese character genes and families. However, they did not take into account the problem that the same character has different semantics in different contexts.

With the rapid development of deep learning, many research and applications have achieved breakthrough results, such as Oracle Bone Inscription detection [8] [9] , corn variety identification [10] . The knowledge base still has its advantages in the era of deep learning. Niu et al. [11] verified that integrating word sememe information can improve word representation learning. Xie et al. [12] studied the automatic prediction of lexical sememes based on semantic meanings of words encoded by word embeddings. Zeng et al. [13] used the sememe information with the attention mechanism to capture the exact meanings of a word, so as to expand and improve the lexicon. However, these studies are advanced applications of Chinese and do not provide a good solution to the problems faced by beginners, especially foreigners, in learning Chinese.

In summary, existing research has not focused on the actual situation of overseas Chinese learners and has not analyzed the differences in the causes of their typos compared to native Chinese speakers. These studies also do not represent Chinese character knowledge from the perspective of their semantics, so they cannot solve the problem of typos caused by similar shapes or pronunciations. This paper constructs a knowledge graph from the perspective of Chinese character semantics and attempts to solve these problems.

The main contributions of this paper are listed as follows:

• Consider the intellectual associations of Chinese characters in terms of their original meanings rather than their glyphs. This makes it easier to solve the difficulties of foreigners in learning Chinese.

• The CCSKG is constructed from the semantic perspective, which makes the entity relations in the graph have rich semantic information. The prototype of the CCSKG comes from the commonly used Chinese characters, which are the basis for foreigners to learn Chinese.

• The scale extension of the CCSKG based on HowNet, which is still built on the basis of semantics. Because HowNet itself is a powerful Chinese-English common sense knowledge base, it has an inherent semantic computing advantage.

• The Chinese sentences are represented as subgraphs in the CCSKG, and typos are corrected by using graph algorithms.

• The elementary level is based on the understanding of Chinese characters rather than words, which greatly reduces the difficulty of learning Chinese.

• The basic requirements of Chinese language learning can be met without large-scale labeled samples and training sets.

The rest of the paper is structured as follows: Section 2 introduces the process of the CCSKG building in detail. Section 3 is the experiment and analysis. The corpus and dataset used are also presented. Finally, Section 4 presents our conclusions and points out the next research priorities.

2. CCSKG Construction

We have presented the construction process of CCSKG in [5] . Firstly, we build an initial set of Chinese characters, called seed set. Secondly, we establish semantic relations between Chinese characters based on the seed set to form the prototype knowledge graph. They are classification clusters based on the Chinese character semantic families. Thirdly, use word pairings in HowNet to expand the radical knowledge graph. Fourthly, based on the similarity calculation of OpenHowNet, more Chinese character entities and relations can be obtained, thereby enriching the knowledge graph. Finally, the integrated entities and relations form the CCSKG. The construction process is shown in Figure 3.

2.1. Knowledge Graph Seed Set

There are about 100,000 Chinese characters. Considering the learning and usage scope of overseas Chinese learners, we have collected and organized 6374 commonly used Chinese characters from authoritative Chinese textbooks, including 256 single-component characters. We refer to the set of these Chinese characters as a seed set, where each element is a node in the CCSKG.

2.2. Knowledge Graph Prototype Construction

Sorting the seed set will form a prototype of the knowledge graph. The Chinese characters in the seed set are divided into 190 groups according to 190 radicals, as shown in Figure 4. The first Chinese character in each line is a radical, which

![]()

Figure 3. The CCSKG construction process.

![]()

Figure 4. The Chinese characters are divided into 190 groups based on their radicals.

is the basic structural unit of Chinese characters. The characters after the colon in each line are composed of that radical as a component, each line forms a group. Each Chinese character in the same group is related to its radical of the group.

Radicals contain semantic information, and semantic families of Chinese characters can be constructed based on radicals. In this way, we have constructed 190 semantic families. Therefore, the prototype of a knowledge graph of Chinese characters is obtained. The prototype knowledge graph nodes and their corresponding Chinese characters are shown in Figure 5.

We notation the original seed character set C. For each Chinese character

, according to the Chinese character radical set

, the initial classification is made to form k subsets

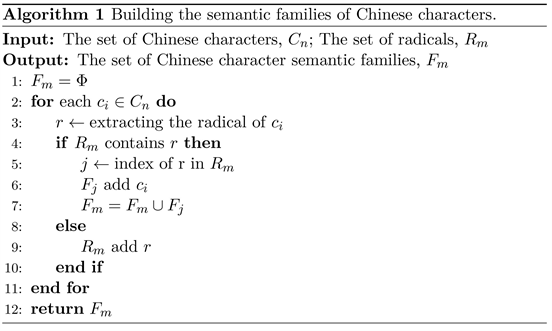

named Chinese character semantic families. The reason for the seed set selection is that those commonly used Chinese characters are closest to the daily life, and they are more frequently used and easier to master. There are two reasons for the preliminary classification based on the radicals. The first one is that the radicals and components contain semantic meaning of Chinese characters and the second one is that they are also the basis of current Chinese character learning methods based on glyph. Algorithm 1 for constructing the semantic families of Chinese characters is as follows.

![]()

Figure 5. The prototype knowledge graph of Chinese characters.

2.3. Knowledge Graph Extension Based on HowNet Pairings

HowNet provides examples of words that have multiple meanings. These examples emphasize their ability to distinguish rather than their ability to interpret. Their purpose is to provide reliable help for disambiguation. So we can find the correlation between Chinese characters from these examples. Figure 6 shows an example.

In Figure 6, the Chinese character “学(study)” in HowNet provides several examples, by using these examples we can find new Chinese characters as well as the relations between them, thus obtaining a new graph.

The method to extend the knowledge graph based on HowNet is as follows: for each Chinese character

, to find the pairing characters through the word examples in HowNet. These paired characters form a new set

. For each

, if

, the correlation between

and

is established.

![]()

Figure 6. An example of extension using HowNet pairings.

If

, the

is added to the set C, then build the relation between

and

. By using this method, the entities in the knowledge graph of Chinese characters can be expanded, and the more relations between entities can be obtained, so we can get richer semantic information. Since we are concerned about the relations between Chinese characters, we only choose 2-character words as the target when looking for pairing words.

Take any Chinese character node from the prototype of the CCSKG and input it into HowNet to find its senses. By selecting the 2-character words corresponding to the key E C from the description structure of the senses (see Figure 6(a)), more nodes and relations can be obtained based on HowNet’s pairings, thereby expanding CCSKG.

2.4. Knowledge Graph Enrich Based on OpenHowNet Similarity

Since most existing machine learning datasets merely provide logical labels, label distributions are unavailable in many real-world applications. Research on label distribution learning (LDL) has gradually attracted attention [14] . Word embedding transforms words into a distributed representation. Therefore, the similarity between words can be obtained through word vectors. Niu et al. [11] found that integrating sememe information of Hownet into word representation learning can effectively improve the performance of word embedding. OpenHowNet [15] provides a convenient way to search information in HowNet, display sememe trees, calculate word similarity via sememes, etc. Inspired by this, we also consider fusing distributed representation and knowledge representation to calculate the character semantic similarity.

We extended our calculation model for the correlation of Chinese characters, which is composed of multiple factors. As long as the result of the correlation between two Chinese characters is greater than the specified threshold, they can establish a semantic relation, thereby expanding the CCSKG. The formulas for calculating the relevance of two Chinese characters are as follows.

(1)

(2)

(3)

(4)

where

indicates the semantic correlation degree between the two Chinese characters

and

;

is called the matching coefficient, which refers to whether

and

can be combined into a meaningful word. The combination of

and

is disordered;

is called the component coefficient, which indicates how many common parts of the glyph components that make up

and

;

is the set of components that make up the Chinese character

, and

is the set of components that make up the Chinese character

;

is referred to as the intimacy coefficient, which represents the semantic distance relationship between the Chinese character and the family of the Chinese character;

represents the sum of the shortest paths between the nearest common ancestors of

and

;

means the number of semantic families spanned by

.

In formula (1),

represents the semantic relevance of

and

, which is provided by the HowNet calculation tool. HowNet provides interfaces for semantic relevancy calculations, using these interfaces to compute semantic relevancy between words. If the result is 1, it indicates that there is a correlation between words, so a relation can be established between them. If the result is 0, there is no semantic correlation between them and there is no need to establish the relation.

represents the similarity between

and

, provided by OpenHowNet, its algorithm implementation is based on [16] .

2.5. CCSKG Integration

The integration of CCSKG is to integrate the relations based on partial classification and the new entities and relations based on HowNet and OpenHowNet extension, and remove duplicates in both methods. All Chinese characters in character set C are regarded as nodes, and the relations between characters as edges, thus forming the semantic knowledge graph of Chinese characters. The knowledge graph based on semantic correlation can intuitively display the related Chinese characters. Taking these related Chinese characters as the knowledge community, they can be grasped and understood semantically, thus effectively avoiding the problem of learning Chinese characters based on glyph discrimination.

The expansion of pairing words and semantic relevancy calculation based on HowNet and OpenHowNet greatly enriches the prototype of CCSKG. Comparing the prototype knowledge graph with the integrated one, we found that the nodes and relations in the knowledge graph have increased dramatically. The number of nodes increased by 5.93% and the number of relationships increased by 400%. As shown in Figure 7.

![]()

Figure 7. The scale comparison before and after integration.

The CCSKG consists of Chinese characters as nodes, and the relations between the nodes are directional, which indicates the collocation of the characters with their radicals and with other Chinese characters. To facilitate queries and semantic computations, we store the CCSKG in the Neo4j graph database. The current size of the CCSKG is 6752 nodes and 104,187 relations. A screenshot of the CCSKG fragment is shown in Figure 8.

2.6. Link Prediction Algorithm for Chinese Correction

Once the CCSKG is constructed, we can perform Chinese correction based on the graph structure. It is regarded as a link prediction problem. That is, the set of Chinese characters that constitute meaningful words in a sentence is regarded as a subgraph in the CCSKG, and the correctness of the target word is determined by computing whether the characters that make it up belong to the same community as that subgraph. Since link prediction algorithms help determine the closeness of a pair of nodes using the topology of the graph. The computed scores can then be used to predict new relationships between them [17] .

Specifically, given a CCSKG, denoted by

, where E denotes the set of nodes, R denotes the set of relations, and F denotes the set of facts. A Chinese sentence can be converted into a subgraph

of G, where

is the set of Chinese characters,

is the set of relations among Chinese characters, and

is the set of facts composed of Chinese characters. The

composed by a sentence can be regarded as a community, and our approach is to calculate whether the candidate Chinese characters belong to the same community to determine whether the word they constitute is a correct word. For the sake of simplicity, we assume that there is only one word in a Chinese sentence that needs to be judged as correct or not. The calculation process is as described in Algorithm 2. Thanks to Neo4j for providing Graph Data Science (GDS) library that can provide us with Community detection and similarity algorithms.

3. Experiments and Analysis

3.1. Dataset

The dataset used in this paper comes from the HSK dynamic composition corpus

version 1.1 created by Beijing Language and Culture University, which is a corpus of compositions written by foreigners whose native language is not Chinese for the Advanced Chinese Proficiency Test. The compositions of some foreign students from 1992 to 2005 are collected. The scale of the corpus is 11,569 articles and 4.24 million characters in 29 composition topics [18] . The corpus provides two versions: annotated corpus and original corpus. The annotated one is a corpus that is manually entered into a computer and manually marked with various interlanguage errors. The original one refers to the electronically scanned corpus of the students’ original compositions.

The dataset was generated based on the following considerations: 1) since we mainly focus on the correction of mispronounced characters, we chose the annotated mispronounced characters corpus from the HSK dynamic composition corpus; 2) we have separated the correct Chinese characters and mispronounced characters, since the original annotated sentences in the corpus merges the two together.

There are several dataset samples shown in Table 3.

In Table 3, the annotated sentences are the original sentences in the HSK composition corpus, and the characters marked with B indicate that they are mispronounced characters; the source sentences represent the actual composition sentences of the foreign students, which may contain errors or may be correct; the target sentences are correct, they may be the same as the source sentences, or they may be manually corrected sentences. It is worth noting that we are concerned about the B-marked characters in the HSK corpus, and other marked characters need to be processed accordingly. For example, the Chinese character “慮” marked F is a traditional Chinese character, it is semantically correct, but the glyph is different from the simplified Chinese character “虑”. Therefore the target sentences will contain both glyphs. See sentences No. 2 and No. 3 in Table 3.

3.2. Chinese Character Typos Correction

To verify the validity of the CCSKG, we also conducted experiments with word correction as the task. Among the experimental data, the probability of incorrect sentences is 14.67%. The experimental task is described as giving the target word that needs to be detected, finding its corresponding position in the experimental sentences, removing it through MASK, and transforming it into a cloze task. The predicted result is considered as the corrected word and then compares with the word dropped by MASK. Since our current CCSKG considers 2-character words, our word correction targets are also 2-character words. We employ False Acceptance Rate (FAR) and Recall as evaluation criteria. The formulas are as follows:

(5)

(6)

where

is the number of sentences that were correct but were incorrectly corrected;

is the number of correct sentences originally;

means the number of sentences that were originally incorrect and were finally corrected correctly;

is the number of incorrect sentences originally.

We compare the effect of our proposed method with keras-bert and pycorrector-ernie. BERT [19] is designed to pretrain deep bidirectional representations from unlabeled text by jointly conditioning on both left and right context in all

layers. ERNIE [20] is designed to learn language representation enhanced by knowledge masking strategies, which includes entity-level masking and phrase-level masking. Pycorrector [21] is a Chinese text error correction tool. It uses the language model to detect errors, Chinese Pinyin feature and shape feature to correct Chinese text error, it can be used for Chinese Pinyin and stroke input method. The experimental results are shown in Table 4.

It can be seen from Table 4 that our method has achieved the best results. It has the lowest FAR and the highest Recall. We are more concerned with the whole word mask, compared to the best results of keras-bert and pycorrector + ernie, our FAR is reduced by 38.28%, and the Recall is increased by 40.91%. The analysis of the experimental results is as follows:

• Incorporating knowledge into self-supervised learning methods can indeed improve the performance of natural language processing tasks. For example, compared to keras-bert and pycorrector + ernie our method incorporates the knowledge elements of HowNet.

• The importance of the 2 characters that make up the word is significantly different, and predicting the latter character by the former word is significantly better than predicting the former character by the latter word. This also proves that the relationship between words in the CCSKG is directional.

• Since the HSK composition corpus comes from the compositions of international students, there are some grammatical errors in the sentences, which affect the performance of typos correction.

• The accuracy of word segmentation tools has an impact on text error correction. For example, “世界/上” is mistakenly divided into “界上”, “现在/考虑” is mistakenly divided into “在考” and so on.

• Bert and Ernie provide large-scale pre-training corpora, but these corpora are from high-quality Chinese material, which is significantly different from the HSK composition corpus. In the absence of large-scale HSK pre-training corpora, incorporating knowledge as a guide is an effective solution.

• HSK composition corpus contains traditional Chinese characters, such as “考慮”. In experiments, we found that keras-bert and our method have poor performance in processing traditional characters. In the case of pycorrector, although it supports traditional Chinese characters, it does not always convert them to the correct simplified Chinese and often substitutes them based on Pinyin, which leads to errors.

• When correcting typos, the methods used in the experiment have a lower accuracy in correcting homophones than those caused by similar shapes. Adding pronunciation attributes to CCSKG may be a good solution.

4. Conclusions

To help foreign learners to catch the meaning of Chinese characters during the Chinese foreign communication and propagate, the CCSKG construction method is proposed. Unlike other Chinese character glyph description methods, we pay close attention to the Chinese characters’ original meaning and their semantic relevancy. In addition, as a powerful knowledge base, HowNet is used to extend and perfect the knowledge graph. We have obtained 6752 Chinese characters and 104,187 relations to meet the needs of Chinese overseas communication and international education. We realized the visualization of the CCSKG and verified its effectiveness in word correction through experiments. However, HowNet currently does not collect traditional Chinese characters and words, so it is temporarily unable to perform semantic analysis on traditional Chinese characters which are often encountered in overseas Chinese communication scenes. Moreover, currently, our CCSKG only considers 2-character words. In future work, we will integrate other resources of Chinese character knowledge into semantic representation models, such as Oracle Bone Inscriptions characters, traditional Chinese characters. And extend them to the CCSKG. And the representation of multi-character words is also an issue that needs to be studied. Thus, we can better serve Chinese cultural exchange and overseas dissemination.

The earlier simple version of this paper was presented at the 2022 International Conference on Computer Engineering and Artificial Intelligence.

Acknowledgement

This work is supported by the Key Technology Project of Henan Educational Department of China (22ZX010), the National Natural Science Foundation of China (62106007), the Chinese Ministry of Education and National Language Commission Special Project for Research and Application of Oracle Bone Inscriptions and other ancient characters (YWZ-J023), the Industry-University-Research Innovation Fund of China (2021RYA05002), and Henan Provincial Department of Science and Technology Research Project (232102320169).