1. Introduction

Phase retrieval is a problem in recovering the unknown signal

from the following model:

(1)

where

is the known measurements matrix, n is the number of measurements,

is the squared-magnitude measurements,

is noise or outliers [1],

denotes the element-wise absolute-squared value. Especially, when both A and x belong to the real field, the problem is called real-valued phase retrieval [2] [3]. Phase retrieval problem has many important applications, including X-ray crystallography [4], optics [5], astronomy [6], and blind ptychography [7]. The methods to solve PR problems can be roughly divided into two categories: the first is based on alternating projection proposed by Gerchberg and Saxton. Examples include Hybrid Input-Output (HIO), Hybrid Projective Reflection, and so on. In recent years, some scientists have proposed more advanced alternating projection algorithms. The second is the convex method of semidefinite programming (SDP) or convex relaxation for quadratic equations in the dirt (1). The most representative is PhaseLift [8], which was proposed by Cand, Strohmer, and Voroninski. This is an algorithm for minimizing convex trajectories (kernels) using an SDP-enhanced technique. In many applications, especially those related to imaging, the signal

allows sparse representation under certain known and deterministic linear transformations. Without losing generality, we assume that signal x itself is sparse in the rest of this paper. In this case, model (1) is called the sparse phase recovery model.

To solve the sparse problem,

norm regularization is a common approach in the field of compression perception. In further study of the phase diagram, [9] results are as follows: 1) As the p-value decreases, the solution obtained by L regularization becomes more sparse. 2) When

, L1/2 regularization always gets the most sparse solution, and when

, there is no significant difference in

regularization performance. Therefore, the L1/2 regularization can be used as a representative of the

regularization.

For asymmetric noise or outliers, some stable results have been obtained. For example, [10] developed for minimizing the least squares empirical loss and designed a two stages algorithm, which starts with a weighted maximal correlation initialization and then follows by the reweighted gradient iterations. However, most of the above methods are built upon the least squares (LS) criterion which is optimal for Gaussian noise, but may not be optimal if the noise is not Gaussian or asymmetric. To enhance the robustness against asymmetric noise or outliers, introduced quantile regression (QR) method which includes LAD method as a special case. Compared to LAD method, QR method involves minimizing asymmetrically-weighted absolute residuals, which permits a much more accuracy portrayal of the relationship between the observed covariates and the response variables. Therefore, it is more approproate in certain non-Gaussian settings to use QR method. For these reasons, QR has attracted tremendous interest in the literature. Recently, the penalized QR method has also gained a lot of attention in the high dimensional linear models, see for example [11] [12] [13] [14] [15].

On the other hand, recall that L1/2-regularization introduced by [9] and [16] has been widely used in many fields since it can generate more sparse solutions under fewer measurements than L1-regularization. Inspired by these works, we here propose a novel method which consists of QR and an L1/2-regularization. We call this method L1/2-regularized quantile regression phase retrieval (L1/2 QR PR). Because the L1/2-regularized problem is non-convex, non-smooth, non-lipschitz, it is difficult to solve directly. Therefore, we design an ADMM algorithm to solve the correspoding optmization. Fortunately, all subproblems have closed solutions and convergence is guaranteed. Numerical experiments show that the proposed method can recover sparse signals with fewer measurements and is robust to asymmetrically distributed noises such as dense bounded noises and Laplacian noises.

The rest of this article is organized as follows. In Section 2, we design a novel algorithm based on ADMM to solve our method L1/2 QR PR and discuss the convergence of proposed algorithm. In Section 3, we use a large number of numerical experiments to prove the effectiveness and robustness of our algorithm. Conclusions and future work are presented in Section 4.

2. Optimization Algorithm

2.1. The Problem Formulation

The optimization problem that we consider is minimizing the problem as follow:

(2)

where

have been described in (1),

,

stands for the inner product.

is the regularized parameter, and

.

Noting that

and

, we obtain that

which together with the continuity of the object function yields that Problem (2) has a bounded solution. To design an appropriate iterated algorithm, we give the following two lemmas.

Lemma 1. (see [16] [17]) The global solution

of following problem has analytic expression,

where

with

Lemma 2. The global solution

of following problem has analytic expression,

where

,

is a constant. It can be derived

where

and

(3)

as

.

One can get this lemma in the similar way of [18]. So, we omit the details.

2.2. Solving the Objective with ADMM

We now employ the alternating direction method of multipliers (ADMM) algorithm to solve Problem (2). By introducing a pair of new variables, we reformulate Problem (2) as

(4)

where

and

. Then, the augmented Lagrangian function of the above problem is

(5)

where

are the Lagrange multipliers, r is a positive constant.

Based on the framework of ADMM, the

th iteration can be calculated as

(6)

In the following, we discuss the solution to each sub-minimization problem with respect to (w.r.t.)

.

1) Sub-minimization problem with respect to x: This problem can be written as

(7)

Notice that

(8)

By the first order optimal condition, we can obtain the optimal solution. After analysis and comparison, we can conclude that

where

stands for the identity matrix.

2) Sub-minimization problem with respect to q: This problem can be written as

(9)

By simple calculation, one can see that the above problem is equivalent to the following minimization,

(10)

According to the Lemma 1, we get

where

is the d-th element of the vector

, and

.

3) Sub-minimization problem with respect to z: This problem can be written as

(11)

where

. For each

, the following problem

(12)

can be solved by

based upon Lemma 2. To the ease of presentation, we introduce the notation

whose i-th element is defined as

. Therefore, the problem (11) can be solved by

where

denote the Hadamard product, respectively.

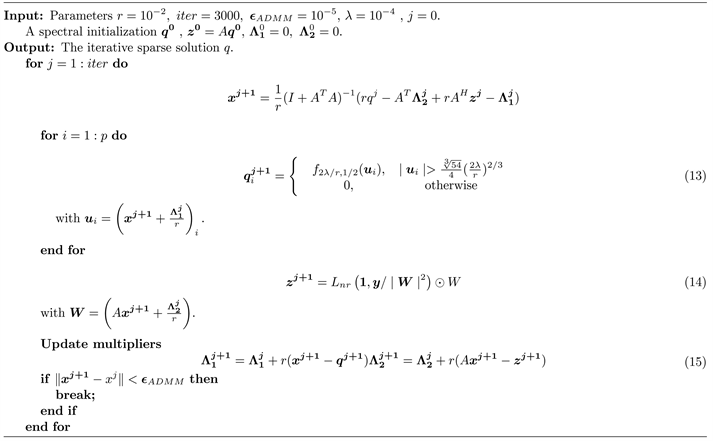

According to the above analysis, the iterative scheme for solving (4) can be given in Algorithm 1.

2.3. Convergence Analysis

As discussed above, one can see that each subproblem of the proposed algorithm is well defined. Therefore, we discuss its convergence. Consider q-sub-problem

Algorithm 1. L1/2QR PR: ADMM method for solving (4).

(16)

where

. According to [19], the first-order stationary point definition of (16) is given as follow.

Definition 1. Let

be a vector in

and

where

denotes a

diagonal matrix whose diagonal is formed by the vector

. The vector

is a first-order stationary point of (16) if

Similar to [20], we introduce the Karush-Kuhn-Tucker (KKT) conditions of the Lagrangian

in (2) as follows

(17)

where

is a saddle point,

represents the partial derivative,

,

is the first-order stationary point of q-subproblem (10).

Since the Lagrangian

is nonconvex w.r.t.

. After analyzing the first-order optimality conditions for the variable z and the subproblem (12), the above KKT conditions corresponding to these three variables can be described as

where

Similar to Theorem 2.4 in [18], we show that our proposed algorithm converges to a saddle point satisfying KKT conditions.

Theorem 3. Assume that the successive differences of the two multipliers

converge to zero and

is bounded. Then there exists a subsequence of iterative sequence of Algorithm 1 converging to an accumulation point that satisfies the KKT conditions of the saddle point problem (5).

3. Numerical Experiments

All simulations were performed on a 64-bit laptop computer running Windows 11 system with an AMD A8-6410 APU and 4 GB of RAM. To demonstrate the efficiency of our proposed method, we calculate the relative error between

and

as

where

is the true signal and

is the solution obtained by a solver. In all experiments, we carry out 100 Monte Carlo runs.

3.1. Experimental Parameters and Initialization

We take

in all experiments, generating the true signal as Gaussian random sparse vector. The measurements matrix A generates from

. Regularization parameter

is given by a fixed 10−4. The penalty parameter r is chosen as 10−2.

For nonconvex problems, ADMM can converge to different (and in particular, nonoptimal) points, depending on the initial values and the penalty parameter [21]. We take Wirtinger flow [22] with initial point

, which obeys

, where

. Hence the algorithm proposed converges from the neighborhood of the global minimizer.

Like many phase retrieval methods, we use spectral initial values to achieve better recovery. To evaluate the efficiency of our method, we compare the successful recovery rate with 5 kinds of algorithm listed in Table 1.

![]()

Table 1. Comparison of reconstruction methods.

3.2. Success Rate Comparisons

We compare the recovery success rates of L1/2QR PR at different values of

in the noise-free case and noisy case including dense bounded noise and Laplace noise.

1) When not disturbed by noise, let

. We report the success rates on Table 2 where the recovery is considered successful if the relative error is less than 0.0001.

2) When in dense bounded noise, let

. The entries of the dense bounded noise are generated independently from

, where

. We say that the recovery is successful if the relative error is less than 0.001. Since the recovery success rate is 0 as

, we here report the other cases on Table 3.

3) When in Laplace noise, let

and

. The entries of Laplace noise are generated from Laplace

, where

. We say that the recovery is successful if the relative error is less than 0.005 and report the success rate on Table 4.

From Tables 2-4, one can see that the recovery success rate of L1/2QR PR with

is usually better than when

equals other values. For Laplace noise, L1/2QR PR with

and

both perform well when

.

![]()

Table 2. Success rate of L1/2QR PR (Without noise).

![]()

Table 3. Success rate of L1/2QR PR (Dense bounded noise).

![]()

Table 4. Success rate of L1/2QR PR (Laplace noise).

Next, let’s continue to compare recovery success rate with the other five algorithms under different measurement fractions (n/p). We continue to use the same parameters and recovery success criteria as above. And let

. Figure 1 shows how these algorithms behave without noise. Through repeated experiments, we find that median-MRWF has a better recovery success rate when measurement fractions (n/p) = 2, 3 and no noise interference in real field. The recovery success rate of L1/2QR PR is slightly lower than that of median-RWF and median-MRWF, and higher than that of other algorithms. Figure 1 also shows how these algorithms behave when disturbed by noise. When receiving interference from dense bounded noise, the recovery success rate of L1/2QR PR is higher than that of the other five algorithms in the real field. When receiving interference from laplace noise, the recovery success rate of L1/2QR PR is higher than that of the other five algorithms in the real field.

In the following chapters, we make

. And we fix the sparsity to s = 8 and use the competing algorithms listed in L1/2QR PR the L1 norm loss function, and L0L1PR adds L0 regularization. The median-RWF and the median-MRAF of the L2 norm loss function are highly robust to outliers through heuristic truncation rules. We compare the relative errors relative to the iteration count T under different measurement fractions (n/p), x is the true signal, x (t) is the tth iteration point.

Remark 3.1. We take n = 2p, 3p, 4p, 5p, 6p in real case. In detail, n = 2p and n = 4p are approximate theoretical sample complexity. When lad-admm returns to stability, we actually use n = 6p.

3.3. Exact Recovery for Noise-Free Data

In the noise-free case, Figure 2 shows in real case, when n = 2p, L1/2QR PR and L1/2LAD PR have good recovery performance. When n = 3p, 4p, 5p, 6p, L1/2QR PR is slightly better than other 5 algorithms.

3.4. Stable Recovery with Dense Bounded Noise

Now, we consider the existence of dense bounded noise. The entries of the dense bounded noise are generated independently from

, where

. It can be seen from Figure 3, L1/2QR PR shows great robustness to dense bounded noise in real case, while LAD-ADMM shows poor performance. L1/2QR PR and L1/2LAD PR have similar performance when n = 4p

![]() (a)

(a)![]() (b)

(b)![]() (c)

(c)

Figure 1. Recovery success rate. (a) No noise; (b) Dense bounded noise; (c) Laplace noise.

in real case. Median-RWF and median-MRAF have similar performance when n ≥ 4p in real case. Another reasonable observation, we find the relative reconstruction error has 10 times increase as η shrinks by a factor of 10 for all algorithms.

3.5. Stable Recovery with Laplace Noise

Finally, we consider the presence of Laplace noise, the entries of Laplace noise are generated from Laplace

, where

. As can be observed in Figure 4, surprisingly, L1/2QR PR and L1/2LAD PR are very robust to Laplace noise, especially in real case, no matter when n = 2p, 3p, 4p, 5p, 6p. However, other methods show poor performance, even when n = 6p, LAD-ADMM, L0L1PR, median-RWF, median-MRAF don’t have satisfactory recovery. Another logical observation, we find the relative reconstruction error has 10 times increase as

shrinks by a factor of 10 for all algorithms.

The simulation results show that the L1/2QR PR algorithm with quantile loss function and L1/2 regularization has two significant advantages over other methods. One is the ability to recover the signal with fewer measured values, and the other is the robustness to asymmetrically distributed noise (such as dense bounded noise and Laplacian noise).

4. Conclusion

We proposed the L1/2 QR PR method and designed an efficient algorithm based on the framework of ADMM. A series of numerical experiments show that the proposed method can recover sparse signals with fewer measurements and is robust to asymmetrically distributed noises such as dense bounded noises and Laplace noises. An interesting future research direction is to consider the complex situations, such as Fourier basic measurements, which is an application of coded diffraction patterns [26].

Acknowledgements

The authors would like to thank the editor and anonymous reviewers for their insight and helpful comments and suggestions which greatly improve the quality of the paper. The research was supported by the National Natural Science Foundation of China (NSFC) (11801130), the Natural Science Foundation of Hebei Province (A2019202135), the National Social Science Fund of China (17BSH140), “The Fundamental Research Funds for the Central Universities” in UIBE (16YB05) and “The Fundamental Research Funds for the Central Universities” (2022QNYL29).

NOTES

*Si Shen and Jiayao Xiang are joint first authors.

#Corresponding author.