1. Introduction

With the development of modern computer information technology, various data have emerged in many application fields such as mobile Internet, statistics, and psychometrics. These data are not only huge in scale, but also have the remarkable characteristics of extremely high dimensionality and complex structure. As a natural representation of multi-dimensional data, tensors are the generalization of 1-order vectors and 2-order matrices in higher-order forms [1].

In recent years, the research interest in tensors has expanded to many fields: e.g., computer vision [2], machine learning [3], pattern recognition [4], signal processing and image processing [5] [6]. For example, in a video recommendation system, user rating data can be expressed as a 3-order tensor of “user × video × time” [7]; a color image is a 3-way object with column, row and color modes. Compared with vectors and matrices, in these applications, tensors can better retain the intrinsic structure information of high-dimensional spatial data. But the tensor of interest is frequently low-rank, or approximately so [8], and hence has a much lower-dimensional structure. This motivates the low-rank tensor approximation and recovery problem. The core of the low-rank tensor recovery model is the tensor rank minimization.

In matrix processing, the rank minimization is NP-hard and is difficult to solve, the problem is generally relaxed by substitutively minimizing the nuclear norm of the estimated matrix, which is a convex relaxation of minimizing the matrix rank [9]. However, as a high-level extension of vectors and matrices, whether there is also a method similar to the matrix LMRA for low-rank tensor approximation and how to extend the low-rank definition from a matrix to a tensor is still a question worth studying. Because the notions of the tensor nuclear norm, and even tensor rank, are still ambiguous or nonuniform.

The most two popular tensor rank definitions are the CANDECOMP/PARAFAC(CP)-rank, which is related to the CANDECOMP/ PARAFAC decomposition [10], and Tucker-rank, which is corresponding to the Tucker decomposition [11] [12]. The CP rank is defined as the smallest number of rank one tensor decomposition, where the computation is generally NP-hard [13] and its convex relaxation is intractable. For the Tucker rank minimization, Liu et al. [14] first proposed the sum of nuclear norms (SNN) of unfolding matrices of a tensor to approximate the sum of Tucker rank of a tensor for tensor completion. Unfortunately, albeit the tucker rank and its convex relaxation are tractable and widely used, its direct unfolding and folding operation tend to damage intrinsic structure information of the tensor and SNN is not the convex envelope of the tucker rank according to [15].

Recently, Kilmer et al. [16] focused on a new tensor decomposition paradigm, namely tensor singular value decomposition (T-SVD), which is similar to the singular value decomposition of matrix. By using T-SVD, Kilmer et al. [16] defined the concept of tensor tubal rank, which is based on tensor-product (t-product) and its algebraic framework. T-SVD allows the matrix analysis to be extended to the tensor, while avoiding the inherent information loss in matricization or flattening of the tensor. The tensor tubal rank can well characterize the intrinsic structure of a tensor, and thus, solving low-tubal-rank related problems starts to attract more and more reasonable research interest these years.

This work focuses on the low-tubal-rank tensor recovery problem under the T-SVD framework. For the underlying tensor with low-tubal-rank assumption, it can be recovered by solving the following optimization:

(1)

where

is a regularization parameter,

denotes the tensor tubal rank.

It has been proved that solving (1) is NP-hard. The commonly used convex relaxation for the rank function is the tensor nuclear norm. In the recent work [17], Lu et al. first defined the tensor average rank and deduced that a tensor always has low average rank if it has low tubal rank and then proposed a novel tensor nuclear norm that is proven to be the convex envelope of the tensor average rank within the unit ball of the tensor spectral norm, which performed much better than other types of tensor nuclear norm in many tasks. This methodology is called as tensor nuclear norm minimization (TNNM). The nuclear norm of a tensor

, denoted by

. The TNNM [17] approach has been attracting significant attention due to its rapid development in both theory and implementation. A general TNNM problem can be formulated as

(2)

However, even though tensor nuclear norm relaxation is becoming a popular scheme for the low-tubal-tensor recovery problem, it is also associated with shortcoming. The tensor nuclear norm suffers from the major limitation that all singular values are simultaneously minimized, which implies that large singular values are penalized more heavily than small ones. Under this circumstance, method based on nuclear norms usually fails to find a good solution. Even worse, the resulting global optimal solution may deviate significantly from the ground truth. In order to break through the limitations of the tensor nuclear norm relaxation method, there is currently work to solve the low-tubal-rank recovery problem by employing a weighted tensor nuclear norm and nonconvex relaxation strategy. The essence of relaxation is to overcome the unbalanced punishment of different singular values. Essentially, they will keep the larger singular values larger and shrink the smaller singular values. Hence, the method has already been well studied and applied in many low-tubal-rank tensor recovery problems, and in real applications, weighted tensor nuclear norm relaxation methods usually perform better than tensor nuclear norm relaxation. In addition, for low-rank representation, the Frobenius norm has been shown to perform similarly to the nuclear norm [18] [19].

This paper mainly studies the tensor completion problem. Different from the existing nonconvex tensor completion models, this paper also considers the stability and low rank of the solution of the tensor recovery model, and proposes a relatively stable low-rank nonconvex approximation of tensors. Specifically, our contributions can be summarized in the following three points.

・ First, Similar to the tensor nuclear norm definition form, we show that the square of the tensor Frobenius norm can be defined as the sum of its singular value squares. Based on the above definition, assign different weights to singular values of a tensor. We propose a surrogate of the tensor tubal rank, i.e., the WTNFN.

・ Second, to optimize the WTNFN-based minimization Problem, we extend the weighted tensor nuclear and Frobenius norm singular value thresholding (WTNFNSVT) operator which is given with careful derivations. For the tensor in the complex field, and demonstrate that it is the exact solution to the WTNFN-based minimization problem. The bounded convergence is also demonstrated with strict proof.

・ Third, we adapt the proposed weighted tensor nuclear and Frobenius norm model to different computer vision tasks. In tensor completion the model outperforms TNN methods in both PSNR index and visual perception. The proposed WTNFN-TC models also achieve better performance than traditional algorithms, especially, the low-rank learning methods designed for these tasks. The global optimal solution obtained above is relatively stable compared to existing weighted nuclear norm models.

The rest of this paper proceeds as follows: Section II briefly introduces some notations, preliminaries, and some preliminary works; Section III analyzes the optimization of the WTNFNM models, including the basic WTNFN operator in detail, and the convergences of the proposed methods are specifically analyzed with strict formulation; Section IV applies the WTNFNM model to tensor completion and compares the proposed algorithm with numerical experiments demonstrate the effectiveness of the proposed methods compared with other state-of-the-art methods; finally the conclusions and future work are given in Section V.

2. Notations and Preliminaries

2.1. Notations

We denote tensors by boldface Euler script letters, e.g.,

. Matrices are denoted by boldface capital letters, e.g.,

, vectors are denoted by boldface lowercase letters, e.g.,

, and scalars are denoted by lowercase letters, e.g., a. Real and complex numbers are in

and

fields respectively. For a 3-way tensor

, we denote

,

, and

as the i-th horizontal, lateral and frontal slice. Generally, the frontal slice

can also be denoted as

. We denote its

-th entry as

or

. The tube is denoted

. The inner product between

and

in

, is defined as

, where

stands for the trace, and

is the conjugate transpose of

, and the inner product of any two tensors

and

in

,

.

For any

, the complex conjugate of

is denoted as

which takes the complex conjugate of each entry of

.

is the nearest integer greater than or equal to b. Now we consider applying the Discrete Fourier Transformation (DFT) on tensors.

is the result of the Discrete Fourier Transformation of

along the third dimension, that is,

(3)

We denote

as a block diagonal matrix with its i-th block on the diagonal as the i-th frontal slice

of

,. i.e.,

(4)

Next, we define the block circulant matrix

reshapes tensor

into a block circulant matrix with size

, i.e.,

(5)

2.2. T-Product and T-SVD

For tensor

,

unfolds tensor

as follows:

(6)

According to [17], t-product is equivalent to the familiar matrix multiplication in the Fourier domain.i.e.,

is equivalent to

. Then the tensor product of two third-order tensors can be defined as follows:

Definition 1 (T-product [16]) Let

and

. Then the t-product

is defined to be a tensor of size

(7)

Definition 2 (Conjugate Transpose [16]) The conjugate transpose of a tensor of

size

is a

tensor

obtained by conjugate transposing each of the frontal slice and then reversing the order of transposed frontal slices 2 through

. If tensor is

, Conjugate transpose is also recorded as

.

Definition 3 (Identity Tensor [16]) The identity tensor

is the tensor whose first frontal slice is an

identity matrix, and whose other frontal slices are all zeros.

Definition 4 (Orthogonal Tensor [16]) A tensor

is orthogonal if it satisfies

(8)

Definition 5 (F-diagonal Tensor [16]) A tensor is called F-diagonal if each of its frontal slices is a diagonal matrix.

As stated above, tensor-SVD can be defined.

Theorem 1 (T-SVD [17]) Let

. Then it can be factorized as

(9)

where

,

are orthogona tensors, and

is an F-diagonal tensor.

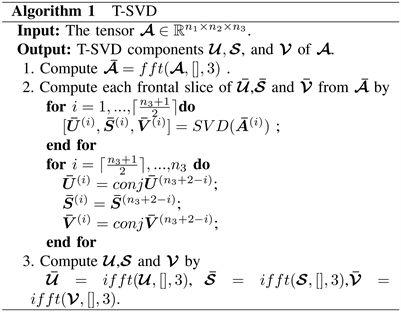

It is worth noting that T-SVD can be efficiently computed based on the matrix SVD in the Fourier domain. The details of T-SVD are described in Algorithm 1.

Definition 6 (Tensor Tubal Rank [20] [21]) For

, the tensor tubal rank, denoted as

, is defined as the number of nonzero singular tubes of

, where

is from the T-SVD of

. The tensor tubal rank is equivalent to the number of nonzero singular values of

. That is

(10)

where

, it uses the property induced by inverse DFT. Denote

with

, therefore, the Tensor unclear norm and Frobenius norm tensor are defined as follows.

Definition 7 (Tensor Nuclear Norm (TNN) [22]) For tensor

, based on the result about the tensor nuclear norm derived from the T-product. we have the tensor nuclear norm (TNN) is defined to be

(11)

Theorem 2 (Tensor Frobenius Norm (TFN)) Given a tensor

, if

the following hold:

(12)

3. Proposed Algorithm Scheme

Based on the definition of tensor nuclear norm and tensor Frobenius norm in Definition 8 and Theorem 2, we present a new nonconvex regularizer which is defined as the sum of the weighted tensor nuclear norm and the square of the weighted tensor Frobenius norm (WTNFN) of

(13)

where

is the weight matrix,

,

is a positive parameter.

3.1. Problem Formulation

Consider the general weighted tensor nuclear and Frobenius norm framework for tensor tubal rank minimization problems

(14)

where

,

is a positive parameter. And the loss function

satisfy the following assumptions:

・

is continuously differentiable with Lipschitz continuous gradient, i.e., for any

(15)

where

is the Lipschitz constant.

・ f is coercive, i.e.,

when

.

To enhance low rank, we need to design a scheme to keep the weights of large singular values sufficiently small and the weights of small singular values sufficiently large, which will lead to a nearly unbiased low rank approximation. Intuitively, one can set each weight, which the entries of matrix

to be inversely proportional to the corresponding singular value

, which will penalize large singular values less and overcome the unfair penalization of different singular values.

3.2. Methodology

We solve problem (14) by generating an iterative sequence

, where

is the minimizer of a weighted tensor nuclear and Frobenius norm regularized problem that comes from the linearized approximation of the objective function

at

. To realize this, for the loss function

, we approximate it as a quadratic function

(16)

Since optimizing

directly is hard, we minimize its approximation (16) instead. Hence, we solve the following relaxed problem to update

(17)

By ignoring constant terms and combining others, (17) can be expressed equivalently as

(18)

Thus, consider

the above formula is equivalent

to the following, which is a nonconvex proximal operator problem. Next, we will prove (18) has a closed-form solution by exploiting the special structure of it.

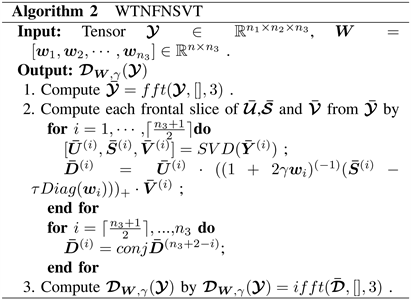

3.3. WTNFNSVT Operator

The weighted tensor nuclear and Frobenius norm singular value thresholding (WTNFNSVT) operator is defined as follows to compute the proximal minimizer of the tensor weighted nuclear and Frobenius norm minimization.

Theorem 3 (WTNFNSVT Operator) Let

be the T-SVD of

. For any

,

, the solution of the problem

(19)

is given by

, which is defined the WTNFNSVT operator as

(20)

where

,

is F-diagonal whose diagonal entries of the j-th frontal slice are equal to the j-th column of weight matrix

. The entries of weight matrix

satisfies

,

,

.

Theorem 2 shows that the WTNFNSVT operator

gives a close-from of the proximal minimizer of the WTNFN minimization with monotone nonnegative weights, which is a natural extension of the weighted matrix SVT. The feasibility of this extension is guaranteed by the T-SVD framework. Using Algorithm 1, we show how to compute

efficiently in Algorithm 2.

Obviously, when

the weighted tensor nuclear norm minimization (WTNNM) was a special case of this problem at that time. And secondly, when all weights were set the same, tensor nuclear norm minimization (TNNM) was a special case of this problem. Therefore, the solution of this model covers the solutions of traditional TNNM and WTNNM.

3.4. The Setting of Weighting Matrix

In previous sections, we proposed to utilize the WTNFNM model and its solving method. By introducing the weight vector, the WTNFNM model improves the flexibility of the original TNNM model. However, the weight vector itself also brings more parameters in the model. Appropriate setting of the weights plays a crucial role in the success of the proposed WTNFNM model.

Inspired by the weighted unclear norm proposed by Gu et al. [23], a similar weighting method can be used in the weighted tensor unclear norm and Frobenius norm, that is

(21)

where

is the singular value of the tensor

in the k-th iteration,

is the corresponding weight of

. Because (21) is monotonically decreasing with the singular values, the non-descending order of weights with respect to singular values will be kept throughout the reweighting process. According to the Remark 1 in [23], we can get Corollary 1 as follow:

Corollary 1. Let

be the T-SVD of tensor

,

denotes singular value of the tensor

. If the regularization parameter C is positive and the positive value

is small enough. By using

the reweighting formula

, and initial estimate

. The reweighted problem

(22)

has a closed-form solution

(23)

where

is a F-diagonal tensor,

. Consider

and

. We get

(24)

The proof of Corollary 1 can be found in supplementary material. Corollary 1 shows that although a reweighting strategy (21) is used, we do not need to iteratively perform the thresholding and weight calculation operations. In each iteration of both the WTNFNM models to solve the typical tensor recovery problems, and the tensor completion algorithms, such a reweighting strategy is performed on the WTNFNSVT subproblem (step 2 in Algorithms 2) to adjust weights based on current

. In implementation, we initialize

as the observation tensor

. The above weight setting strategy greatly facilitates the WTNFNM calculations. Note that there remain two parameters C and

in the WTNFNM implementation. In all of our experiments, we set it by experience for certain tasks. Please see the following sections for details.

3.5. Convergence Analysis

In this section, we give the convergence analysis for (18). Suppose that the sequence

is generated by Algorithm 2. For the simplicity of notation, we denote

as the singular value of

in the k-th iteration, and

is the corresponding weight of

.

Theorem 4. In problem (14), assume that

satisfy the assumptions and

is Lipschitz continuous. Consider

, and the parameter

for any

,

. Then the sequence

and

generated by WTNFNM satisfies the following properties:

1)

is monotonically decreasing. Indeed,

(25)

2) The sequence

is always bounded. Moreover,

.

3) Any accumulation point

of the sequence

is a critical point of

.

Proof. (1) First, with the definition of

, i.e.,

(26)

We have

Then

which can be rewritten as

(27)

Second, note that f is Lipschitz gradient continuous. According to [ [24] Lemma 2.11], we have

(28)

Finally, we combine (27) and (28), it obtains that

which leads

(29)

Since for any integer k, if

we have

always held. Then the monotone search criterion (25) is always satisfied, which makes the

is monotonically decreasing.

2) It’s easy to obtain that

is bounded. Then, we know that

is bounded as well from the assumption. Besides, summing up the inequality (27) for all

, it leads that

Hence, we get

(30)

From (30), it yields that

(31)

3) Because of the boundedness of the sequence

, there exists a subsequence

such that it converges to an accumulation point

, i.e.,

. Based on that convergence result (31), we have

, which implies that

for all i and j. Note that the subsequence

is bounded obviously, according to the [ [25] Proposition 2.1.5], there exists

such that

for all i and j. Denote

(32)

Since

is optimal to problem (30), there exists

such that

(33)

such that

(34)

Let

in the above equation, then we obtain that there exists

(35)

Thus

is a critical point of problem (14). This completes the proof.

4. Experiments

In this section, we conducted a series of experiments on both synthetic and real-world data to demonstrate the superiority of the proposed model. we empirically demonstrated that the WTNFN regularization algorithm leads to more stable solutions than WTNN (with

). Moreover, it could achieve better performance when compared to the previous algorithm.

We solved the low rank tubal tensor completion problem by the following program using the Discrete Fourier Transform based tensor nuclear norm based on the proposed weighted tensor nuclear and Frobenius norm

(36)

where

were respectively the observed data and the underlying recover result, a binary support indicator tensor. Zeros in

indicate the missing entries in the observed tensor, and

was a linear operator that keeps the entries in unchanged and sets those outside zeros. It was easy to know that the gradient of the squared loss function was a Lipschitz continuous function with a Lipschitz constant

. The quality of the restored image was evaluated in terms of the peak-signal-to-noise ratio (PSNR) defined by

(37)

4.1. Stability of the WTNFN Regularization Model

In this section, we show that WTNFN model leads to more stable solutions and provides improved experimental results than WTNN for color images. In our experiments, the testing images shown in Figure 1 were the “Im1” and “Im5” with size 300 × 300 × 3. For each image, pixels were sampled randomly with sampling rates of 30%, 50%, and 70%. These two images were used to illustrate the effectiveness of the Frobenius norm in the WTNFN.

We first applied the WTNFN with different

values to “Im1” and “Im5”. A suitable value of should

result to a stabilized solution and meanwhile result to a solution of high PSNR value. We denote WTNN the WTNN regularization model with

, WTNFN1 with

is about 10−6, WTNFN2 with a lager

about 10−5. Figure 1 shows the PSNR values of sampling rates of 30%, 50%, and 70%, respectively, for recovering images “Im1” and “Im5” 10 times. Comparing the WTNFN with different values of

, we can find that the PSNR values of WTNFN1 and WTNFN2 are more stable than that of WTNN, which indicates that the Frobenius norm in the WTNFN penalty can induce stable solutions. In addition, we found that WTNFN11 and WTNFN2 behave very similarly for the values of PSNR for the same image at the same sample rate. So we should choose moderate value of

to balance the stability and the quality of the restored image. The results in Figure 1 show that setting

to make sure that always achieves good results.

4.2. Synthetic Experiments

We conducted an experiment to demonstrate the practical applicability of the WTNFN heuristic for recovering low-rank tensors. We generated a tensor by

, where the entries of

and

were independently sampled from standard Gaussian distribution. The tubal rank of tensor

was defined as r. We solve (36) and obtain the solution

. Then we reported to the tubal rank

of

and Relative Error was defined as

(38)

We simply considered tensors of size

, with

. To

![]()

Figure 1. Performance of the WTNFN on images “Im1” and “Im5” for different randomly sampled sets. (a) Results for sampling rate 30% “Im1”. (b) Results for sampling rate 30% “Im5”. (c) Results for sampling rate 50% “Im1”. (d) Results for sampling rate 50% “Im5”. (e) Results for sampling rate 70% “Im1”. (f) Results for sampling rate 70% “Im5”.

create the known entries, we sampled

items uniformly from

, where p was the sampling rate. A reference quantity that is defined as

in the literature [26]. We set the parameter

,

for model (36) in experiment. Table 1 respectively gives the recovery results for the linear transform DFT. It can be seen that the WTNFN program (36) gives the correct rank estimation of

in all cases and the relative errors are small. Therefore, these results do a good job of validating the correct recovery guarantees of our algorithm.

Next, we studied the recovery phenomenon with different tubal ranks of

and different sampling rates of p. We generated a 50 × 50 × 50 tensor

similarly to the previous experiment. The range of variations for tubal ranks r and sampling rates p were

and

. The tubal rank was gradually increased in steps of 1. The sampling rate was gradually increased in steps of 0.01. We conducted an experiment for each

pair. The algorithms stopped when the Relative Error was less than 10−5. Figure 2 reports the percentage of success for each pair of WTNFN and TNN for the linear transform DFT, in which the yellow region reflects the full successful recovery, and the blue region reflects the full failed recovery. The bigger the yellow area, the stronger ability to recover. It can be seen that the WTNFN method has a much larger yellow area than the TNN method. The recovery phenomena can be explained by the fact that WTNFN has a stronger ability to recovery than TNN. In a word, the WTNFN minimization model is more robust and efficient than the classic TNN minimization model.

4.3. Real World Experiments

Due to coding and transmission difficulties, photos may be partially damaged or obscured by text or branding. Matrix completion methods, of course, can be used to recover the missing information in these images. We considered the

![]()

Table 1. Exact tensor completion on random data.

![]()

Figure 2. Comparison of the recovery capacity varying tubal rank r and sampling rate p of the WTNFN (a) and TNN (b). The x-axis represents the sampling rate p, ranging from 0.01 to 0.99, the y-axis represents the tubal rank r, ranging from 1 to 20.

WFNFN to color image recovery with partially missing pixels, as color images with three channels (Red, Green, Blue) can be modeled as third-order low-tubal rank tensors. The Peak Signal-to-Noise Ratio (PSNR) is a widely used measure for assessing image quality. To compare the performance of different approaches, we utilize the PSNR value of the recovered image. Larger values of PSNR indicate better image restoration performance.

We considered four methods for image recovery and compare their performance: 1) TNN-DFT [26]: tensor nuclear norm based discrete Fourier transform tensor completion model; 2) TNNR [27]: tensor truncated nuclear norm regularization; 3) Lp [22]: tensor completion by nonconvex Lp function regularization; 4) TCTF [28]: tensor completion by tensor factorization.

a) Random Mask: We first conducted experiments to deal with a relatively easy tensor completion problem. We randomly selected 5 images with a size of 300 × 300 × 3 from the LJU dataset, and randomly sampled 50% of pixels for each image.

Five recovered image examples with sampling rate of 50% by different methods are shown in “Fig.”. In the magnified views of the comparable sections in color boxes, it reveals that our recovered images have a clearer shape, fuller color, and brightness than the other four state-of-the-art tensor completion methods. Furthermore, we display the PSNR values achieved by various approaches on five images in Figure 3 when the sampling rate is 50%. It can demonstrate that the WTNFN-based algorithms can achieve much higher PSNR values compared with the other four state-of-the-art tensor completion methods quantitatively for the majority of the images.

b) Text Mask: Text removal is difficult because the pixels covered by the text are not spread evenly throughout the image, and the text may obscure essential textural information. We start by looking at the text's location and treating the

![]()

Figure 3. Five images of natural scenes.

![]()

Table 2. PSNR values of the various methods on text mask for image recovery.

![]()

Figure 4. Comparison of the PSNR values obtained by TNNDTF, TNNR, LP, TCTF and WTNFN on the tested images in Figure 5

items that correspond to it as missing values. As a result, the text removal problem can be thought of as a tensor completion problem in general. In this experiment, we apply our method to estimate the images from text mask.

As previously noted, we compared our results to the TNN-DFT, TNNR, Lp, and TCTF methods. PSNR measurements are used to assess performance. The recovered images from the five tensor completion algorithms are shown in Figure 6. On all five image sequences, We rounded the experimental results to two decimal places. Table 2 provides the PSNR values of the compared approaches. We

![]()

Figure 5. Performance comparison for image recovery on some sample images. (a) original image; (b) observed image; (c) recovered images by TNN-DFT, (d) recovered images by TNNR, (e) recovered images by Lp, (f) recovered images by TCTF, (g) recovered images by WTNFN.

can see that the WTNFN has absolute superiority, as evidenced by its far higher PSNR values.

5. Conclusions

In this work, we have presented a novel method called the weighted nuclear and Frobenius norm regularization for low-tubal rank tensor recovery. We proposed the solving approach for the weighted nuclear and Frobenius norm proximal (WTNFNSVT) operator. The WTNFN model was subsequently extended to tensor completion tasks, and efficient algorithms were developed to solve these using the derived WTNFNSVT operator. Influenced by the weighted nuclear for automatic weight setting. We also developed a rational scheme for automatic weight setting, which yields closed from solutions of the WTNFN operator. This approach can shorten the calculation time.

On both synthetic and actual visual datasets, we ran many experiments. In comparison to state-of-the-art tensor completion approaches based on the tenor nuclear norm or tensor factorization, the experimental findings indicate the

![]()

Figure 6. Comparison of tensor completion algorithms for text removal problem. (a) original image; (b) observed image; (c) recovered images by TNN-DFT (d) recovered images by TNNR, (e) recovered images by Lp, (f) recovered images by TCTF, (g) recovered images by WTNFN.

advantages of the WTNFN-based algorithms.

Acknowledgements

This work is supported by Innovative talents support plan of colleges and universities in Liaoning Province (2021).

Appendix A. Proof of Theorem 2

Lemma 1. Given a third-order tensor

if

is orthogonal, i.e.,

, that is

(39)

The theorem 2 can be proved by lemma 1.

Proof By lemma 1 and

can get

(40)

End of proof.

Appendix B. Proof of Theorem 3

Proof of Theorem 3 requires the following Lemma 2.

Lemma 2 (Proximal Operator for WNFN) For a matrix

, denote by

the singular value decomposition of matrix

, and

, the following minimization problem

(41)

The solution is

,

(42)

where

B1. Proof of Lemma 1

Proof In order to prove Lemma 2, we first show the following theorem for the proximal operator of weighted nuclear and Frobenius norm for real-valued matrices. Then, we extend the result to prove Lemma 2 for complex-valued matrices.

For any

, denote by

and

the singular value decomposition of matrix

and

, where

, and

are the diagonal singular value matrices. Based on the property of Frobenius norm, the following derivations hold:

Based on the von Neumann trace inequality, we know that

achieves its upper bound

if

,

. Then we have

From the above derivation, we can see that the optimal solution of the WNFNP problem is

, where

is the optimum of the constrained quadratic optimization problem.

The following proof holds the Lemma 2 on the complex number field.

Let

be an arbitrary complex-valued matrix and its SVD be

, where

,

,

,

,

,

,

, and

. Then, it is easy to verify that the SVD of the matrix

(43)

can be expressed as

(44)

Let

,

,

, and the functions

(45)

(46)

From the decomposition formula of the above matrix,

, According to Lemma 1, one minimizer to the following minimization problem

(47)

The optimal solution is

. Since

, the function

the same minimum value as

with

.

End of proof.

B2. Proof of Theorem 3

Proof Theorem 3 describes one minimizer to the following minimization problem

(48)

From Lemma 2, the optimal solution of the above formula can be expressed as

(49)

where

, denotes by

the singular value decomposition of matrix

. So denotes

,

,

as three tensors. Their frontal slices are

,

,

. Performing the inverse fast Fourier transform to get

,

,

, the optimal solution as follow

(50)

End of proof.

B3. Proof of Corollary 1

Proof From Lemma 1, we can see that the weighted near-end operator problem:

(51)

The closed form solution is

(52)

where

(53)

According to the updated rules of the algorithm, in each iteration, the weight

are in a non-descending order, and the singular values

can be updated independently in a non-increasing order as

(54)

Based on the prior condition that the weights are initialized as

, then we have

(55)

Since

. Then in the next iteration, we have

(56)

Then, the weights in the first and second iteration satisfy:

(57)

Hence, in the I iteration, suppose that, holds for all the singular values, then the inequality

(58)

In summary,

,

,

and

. Because the singular value

is bounded below by 0, then the sequence is convergent. And sequence

converges to a non-negative number

.

(59)

Then we have

(60)

where

and

.

End of proof.