Communication-Censored Distributed Learning for Stochastic Configuration Networks ()

1. Introduction

In traditional machine learning, it is generally assumed that the whole dataset is located at a single machine. However, in the big data environment, the massiveness and incompleteness of big data make single-threaded optimization algorithms face huge challenges that are difficult to solve. Therefore, parallel optimization and learning algorithms using shared-nothing architectures have been proposed, such as MapReduce [1], by dividing sample data and model parameters, using multi-threaded methods to train sub-dataset in parallel. The model parameters are updated in a shared memory [2]. However, it is impossible to collect data centrally for calculations on one machine sometimes. Data may need to be communicated between different machines [3], and the amount of data stored by one machine is limited after all. To solve the problem of distributed data storage and limited computation, a better way is to process data in a distributed environment, which leads to distributed learning algorithms.

Distributed learning is to use the same algorithm to train data distributed on multiple agents connected in a network and coordinate the state of each agent through neighboring communication to jointly obtain a consistent value, to achieve the effect close to the centralized training and learning on the whole dataset [4]. A general framework for training neural networks by distributed methods is summarized in the work of [5]. Generally speaking, in the distributed learning algorithm, a large amount of information communication between nodes on the network is required to achieve the purpose of cooperation. As the network scale increases, the communication load between network nodes may be unbearable. Especially in an environment with limited resources, redundant communications can increase the computational load and communication. The energy consumed by communication between nodes is much greater than the energy consumed by calculations [6]. In the case of limited energy resources, how to reduce the frequency of communication to obtain better system performance is an important topic of current research. This leads to an event-triggered communication mechanism. The concept of event-triggered control was introduced in [7]. The main idea is that information is transmitted between agents only when they are in extraordinary need [8]. Distributed event-triggered control has been developed in the present. [9] discusses the event-triggered consensus problem, applying event-triggered control to the multi-agent consensus problem. Model-based edge-event-triggered mechanisms in a directed communication topology were investigated in [10]. In the context of distributed coordination control, dynamic event-triggered control was first introduced [11].

Event-triggered control was originally a control method in the field of industrial control. In the process of data transfer between controllers, sensors and actuators, the updates of the controller output are mostly constant sampling with equal cycles. However, when a system is expected to run smoothly without external interference or less interference if the control tasks are still performed periodically at this time, this conservative control method may cause a heavy load on the communication and the controller. And even cause problems such as data congestion and delay. Therefore, in order to alleviate the shortcomings of the periodic sampling method, many researchers began to study event-triggered control, which is considered to be an effective alternative to the periodic sampling control method. Compared with the traditional periodic sampling control method, event-triggered control can effectively reduce the computational load and reduce the amount of communication.

Up to now, various trigger schemes have been proposed to improve communication efficiency. For the first time, Dimarogonas et al. used event-triggered control in the cooperative research of multi-agent systems. Under centralized and distributed control strategies, they designed event-triggered functions based on state dependence, and obtained corresponding event-triggered time series, getting two event-triggered methods. One is the centralized event-triggered control method [12]. This method requires all nodes to know the global information of the network, and also requires all nodes to update their control signals at the same time, that is, events occur at all nodes at the same time. The other is a distributed event-triggered control method [13]. According to this method, all nodes need to constantly access the state of neighboring nodes to determine when to update their state parameters. However, these requirements may be difficult to meet in practice. This paper mainly studies distributed event-triggered control based on state dependence.

This paper aims to introduce an event-triggered communication mechanism into the distributed learning algorithm based on stochastic configuration networks (SCN) to improve the distributed learning algorithm. Only when each node and its neighboring nodes on the network topology are in great need, information will be transmitted between them. By designing a trigger function and continuously monitoring the parameters, only when the error of the node parameters exceeds the threshold to meet the trigger function conditions, the node will transmit the variable information to its neighbors and update its variable information in time. Finally, achieve the purpose of effectively reducing the communication volume of distributed learning algorithms.

2. Related Works

2.1. Notation

For matrices

and

,

is represented as stacking two matrices by row.

as the inner product of

and

, the Euclidean normal form

of vector v is naturally derived. In the whole paper, network topology

, where

is a set of M directed arcs and

is adjacency matrix, the elements

in the matrix represent the degree of association between a node i and another node j, if

, then

, otherwise

. The degree matrix is defined as

. Furthermore, the arc source matrix of expansion block is defined as

, which contains

square blocks

. If arc

, then

, otherwise it is an empty set. Similarly, the extended block arc objective matrix

is defined, where

is not an empty set but

if and only if arc

points to node j. Then, the extended directional incidence matrix is defined as

, the undirected incidence matrix is defined as Gu = As + Ad, the directed Laplacian is denoted as

, and the undirected Laplacian is denoted as

.

2.2. Alternating Direction Method of Multipliers (ADMM)

ADMM is a powerful tool to solve structured optimization problems with two variables. These two variables are separable in the loss function and constrained by linear equality. By introducing Lagrange multiplier, the optimization problem with n variables and k constraints can be transformed into an unconstrained optimization problem with

variables.

It can be used to solve the unconstrained and separable convex optimization problem with fixed topology in a communication network

(1)

The problem can be rewritten as a standard bivariate form, by introducing the copy of global variable

, local variable

and auxiliary variable

at node i. Since the network is connected, the problem (1) is equivalent to the following bivariate form:

(2)

The kernel optimal solution satisfies

and

, where

is the global optimal solution of the problem (1). By concatenating variables to obtain

and

, and introducing the global function

to express

and

, the matrix form of problem (2) can be changed to

(3)

The augmented Lagrangian function of problem (3) is introduced

A penalty parameter

is a normal number, Lagrange multiplier

. Then, in the

iteration, the update of parameters is

(4)

When variables

and

are initialized,

can be eliminated and

can be replaced by a low dimensional dual variable

, so that (4) the variables will be updated as follows

(5)

(6)

The unconstrained and separable optimization problem in (1) can be solved through continuous iterations.

2.3. Stochastic Configuration Network (SCN)

The SCNrandomly assigns the hidden layer parameters within an adjustable interval and introduce a supervised mechanism to ensure its infinite approximation property. As an incremental learning algorithm, SCN builds a pool of candidate nodes to select the best node in each incremental learning process, which accelerates the convergence speed. Given N training set samples

, the input vector is

, and the corresponding output is

. The SCN model with

hidden layer nodes can be expressed as:

(7)

where

as activation function and

is the output weight. The residual error, that is, the difference between the actual observation value and the fit value can be expressed:

(8)

If

does not reach the target value, it generates a new

, and the output weight

is also recalculated as:

(9)

The incremental construction learning algorithm is an effective method to solve the network structure, starting from randomly generating the first node, and gradually adding nodes to the network. According to the general approximation property already proved in [14], given span(

) is dense in

and for any

where

when adding a new node, the new input weights and deviations are subject to the following inequalities:

(10)

where

,

,

. The optimization problem for the output weights can be obtained by the following equation:

(11)

where

and

.

3. Distributed Event-Triggered Learning for SCN

For a distributed learning algorithm, it will impose a consistency constraint on the consistency of each node, making the final solution effect close to that of the centralized processing approach. In the centralized SCN model, the output weights

can be obtained by the following equation [15]:

(12)

where

is the training error corresponding to the input vector and

regularization parameter. Bring the constraint conditions to (12) we can obtain

(13)

where the hidden layer output matrix is written as H and the target output matrix is denoted by T, i.e.,

(14)

SCN is placed in a distributed computing scenario where the training samples are to be written

and each agent

on the network topology has its own local dataset, the input vector can be denoted as

, and the corresponding output vector is denoted as

. We want to train all the training sets

using a distributed approach, then we can turn the problem (12) of solving for the global unknown output weight

into an optimization problem of the following form. A common constraint is imposed on this problem. Let

be a copy of

on node i. Problem (12) is written in a distributed form as:

(15)

By introducing

into formula (15), we can get

(16)

Each node i gets the output matrix

of the hidden layer based on the local dataset, and the target matrix corresponding to the training samples is denoted by

.

Here the derivation formula is used in an additive structure to perform the distribution. If a function is decomposable into a sum of local functions with separable variables, then each local function can be trained to obtain local separable variables by itself. In problem (16), since constraint

is imposed on the neighbors adjacent to the node and the objective function is additive and fully separable, the problem is solved in a distributed way.

To facilitate the demonstration, question (16) can be written as

(17)

where

(18)

To solve the distributed learning problem of SCN, we use the ADMM with the local loss function

(19)

Take the local loss function

into the above Equation (5), then in the

iteration, the update of variable

and

become

(20)

(21)

After derivation and solution, the ADMM method is used to solve the above convex consistency optimization problem, and the solution is changed from Equation (20) to

(22)

(23)

Next, we design an event-triggered communication mechanism to prevent the transmission of information about small variables. In other words, when the variable

to be updated is too close to

, if the state change of node i is small, it is not necessary to send all the new information to the neighbors. According to this principle, the trigger function is designed as

(24)

Whether the node i has an event for information communication is controlled by the following formula

(25)

where

is the threshold,

. The triggered error of the trigger function is

(26)

In the training process of algorithm operation, the observation Formula (22) can find that only the transmission variable

is needed for communication. Combined with the trigger function, the equation of event-triggered algorithm based on ADMM method can be written as

(27)

(28)

Furthermore, the matrix form of the algorithm (27) can be written as

(29)

(30)

where matrices

and

are degree matrix and adjacency matrix defined in communication network graph respectively. Each node computes the hidden layer matrix

and the target matrix

based on the local dataset. Node i continuously observes the state of output weights

to detect whether the event trigger condition

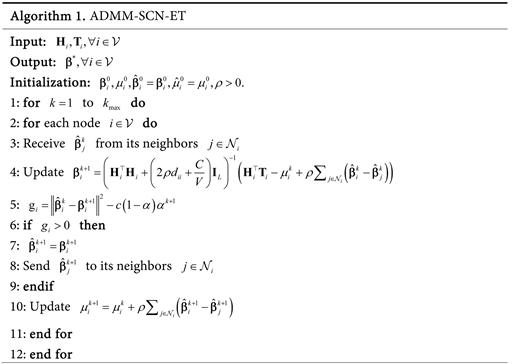

is satisfied. This event-triggered communication algorithm based on ADMM is named ADMM-SCN-ET. The algorithm is shown in Algorithm 1.

4. Numerical Verification

It is easy to understand that if the trigger function part is removed, that is, only the algorithm (22) is used, ADMM-SCN-ET will become a new algorithm ADMM-SCN. In this section, we will compare these two algorithms to prove that the newly proposed distributed improved algorithm can effectively reduce the amount of communication between network nodes.

4.1. Regression on Conhull Dataset

The data set is obtained from the real-valued function given by the following formula [16]:

(31)

We set the sample size of the dataset to 600, randomly selected in

, which is a uniform distribution. Similarly, a test dataset with a sample size of 600 is randomly generated in the range of

.

In the simulation experiment using dataset Conhull, the network topology of node

is shown in Figure 1(a). The regression fitting results of the test dataset using the algorithms ADMM-SCN-ET and ADMM-SCN are shown in Figure 1(b), where the parameter is

. In addition, the trigger parameter related to the event-triggered communication mechanism set by the algorithm ADMM-SCN-ET is

. By carefully observing the fitting effect of the peak data, we can see that the fitting result using ADMM-SCN-ET is slightly better than that using ADMM-SCN. This situation shows that the proposed event-triggered communication mechanism algorithm ensures the performance of the existing distributed learning algorithm. Figure 1(c) shows the root mean squared error (RMSE) value of regression output, which is used to measure the error between the predicted value and actual value. It can be seen from the figure that RMSE shows a trend of sharp decline first and

![]() (a)

(a) ![]() (b)

(b) ![]() (c)

(c)

Figure 1. Results of regression on Conhull dataset. (a) Communication network of four agents; (b) Test outputs of the ADMM-SCN-ET and ADMM-SCN; (c) RMSE of the ADMM-SCN-ET.

then slowly increasing and then slowly decreasing again with the final value RMSE = 0.008. And the RMSE of the ADMM-SCN algorithm with the same parameters is 0.017.

The trajectories of nodes

and

in the ADMM-SCN-ET algorithm are shown in Figure 2, in which the parameters are

. When the red line value becomes zero, it means that the node has carried out information communication. Figure 3 shows the communication time of each node. Figure 3(a) shows that all node events occur in the case of time trigger, that is, information communication is carried out between nodes in each iteration, Figure 3(b) shows the node communication time in the case of event trigger when parameters are

, the communication times of the four nodes are: 42 times for node 1, 40 times for node 2, 44 times for node 3 and 44 times for node 4. The calculation shows that the total communication times of ADMM-SCN-ET algorithm in this example is 21.21% of ADMM-SCN algorithm in the same communication network topology, saving 78.75% of communication resources.

4.2. Classification on 2Moons Dataset

The 2Moons dataset is an artificial dataset where the data points are divided into two clusters with distinct moon shapes [10]. The global training dataset is 300 points randomly selected from the uniform distribution

. The sample

![]()

Figure 2. Trajectories of

and

for each node.

![]() (a)

(a)![]() (b)

(b)

Figure 3. The communication time of eachagent. (a) In the case of time trigger, all nodes communicate every iteration; (b) In the case of event trigger, all nodes communicate when the trigger conditions are met.

size of the test dataset is 100, which is extracted from the regular spacing grid on

. The numerical simulation results are shown in the following figures.

The network diagram used in this data classification example is shown in Figure 4(a), where node

. Figure 4(b) shows the classification result of 2Moons dataset when the parameter is

. In the figure, circles are used to represent the training points of class 1, squares are used to represent the training points of class 2, and the classification boundaries are colored with blue and orange respectively. Figure 4(c) shows the confusion matrix of test dataset classification using the algorithm ADMM-SCN-ET. From the confusion matrix, it can be seen that the classification results of the algorithm are all correct, which proves that the distributed learning algorithm has a strong learning ability for data classification. Figure 4(d) shows the accuracy and RMSE output of ADMM-SCN-ET for classification training. The figure shows that when the hidden layer node of the neural network is greater than 50, the classification accuracy of the training is kept at 1, and RMSR continues to drop to the minimum, with the final value RMSE = 0.021. Under the same parameters, the RMSE of the ADMM-SCN algorithm is 0.017, which ensures the accuracy of the distributed learning algorithm.

The communication time of each node in this example is shown in Figure 5. Figure 5(a) shows the time trigger situation, and all node events occur, Figure 5(b) shows the event trigger situation, and the communication times of each node are: 18 times for node 1, 19 times for node 2, 20 times for node 3, 19 times

for node 4, 20 times for node 5, 19 times for node 6, 20 times for node 7, 22 times for node 8, 18 times for node 9, and 19 times for node 10. The total communication times trained by ADMM-SCN-ET algorithm is 19.4% of that trained by the ADMM-SCN algorithm under the same communication network topology, which saves 80.6% of communication resources.

4.3. Classification on MNIST Dataset

The MNIST dataset is a handwritten dataset initiated and organized by the National Institute of Standards and Technology. A total of 250 different person’s handwritten digital pictures are counted, 50% of which are high school students and 50% are from the staff of the Census Bureau. The dataset includes two parts:

![]() (a)

(a)![]() (b)

(b)

Figure 5. The communication time of each node. (a) In the case of time trigger, all nodes communicate every iteration; (b) In the case of event trigger, all nodes communicate when the trigger conditions are met.

60,000 training datasets and 10,000 test datasets. Each data unit includes a picture containing handwritten digits and a corresponding label. Each picture contains 28 × 28 pixels, and the corresponding label ranges from 0 to 9 representing handwritten digits from 0 to 9. As shown in Figure 6(a).

The network diagram used in this data classification example is shown in Figure 6(b), where node

. Figure 6(c) shows the confusion matrix of test dataset classification using the algorithm ADMM-SCN-ET when the parameter is

. The numbers 1 - 10 in the confusion matrix represent the handwritten numbers 0 - 9, respectively. From the results, it can be seen that the classification accuracy of the ADMM-SCN-ET algorithm test set is finally 89.6%. Figure 6(d) shows the accuracy and RMSE output of ADMM-SCN-ET for classification training. This figure shows that as the hidden layer nodes of the neural network gradually increase, the classification accuracy of the final training reaches 89.6%. Under the same parameters, the classification accuracy of the final training of the algorithm ADMM-SCN is 89.5%, and the RMSE value continues to decrease to the lowest point 0.546, the final RMSE value obtained by the ADMM-SCN algorithm of full communication under the same parameters is 0.548, which guarantees the accuracy of the distributed learning algorithm.

The communication time of each node in this example is shown in Figure 7. Figure 7(a) is the time-driven situation, all node events occur; Figure 7(b) is the communication moment when the event-driven communication mechanism is

used, the communication times of each node are: node 1 is 10 times, node 2 is 8 times, node 3 is 6 times, node 4 is 9 times, node 5 is 10 times, node 6 is 10 times, node 7 is 10 times, node 8 is 9 times, node 9 is 10 times, and node 10 is 10 times. The calculation can be obtained when the parameter is

and

. The total communication times trained by ADMM-SCN-ET algorithm is 9.2% of that trained by ADMM-SCN algorithm under the same communication network topology, which saves 90.8% of communication resources.

Therefore, the simulation results of these three examples show that the proposed distributed learning algorithm can effectively reduce the traffic of distributed learning algorithms in data regression and classification while ensuring the performance of existing distributed learning algorithms.

![]() (a)

(a)![]() (b)

(b)

Figure 7. The communication time of each node. (a) In the case of time trigger, all nodes communicate every iteration; (b) In the case of event trigger, all nodes communicate when the trigger conditions are met.

5. Conclusion

This paper designs a distributed algorithm based on an event-triggered communication mechanism called ADMM-SCN-ET. Combined with the ADMM for solving the convex consensus optimization problem, we propose a communication-censored distributed learning algorithm and use it to solve the optimal output weight problem of neural networks. The algorithm uses an event-triggered communication mechanism to prevent the transmission of variable information with a small change of node state, and reduces unnecessary information communication between nodes. Finally, three datasets are used to verify the effectiveness of the proposed algorithm. The simulation results show that the algorithm proposed in this paper can effectively reduce the communication traffic of distributed learning algorithms while ensuring the performance of existing distributed learning algorithms in data regression and classification.

Acknowledgements

This work was supported in part by the National Natural Science Foundation of China (No. 62166013), the Natural Science Foundation of Guangxi (No. 2022GXNSFAA035499) and the Foundation of Guilin University of Technology (No. GLUTQD2007029).