Improved C-V Level Set Algorithm and its Application in Video Segmentation ()

1. Introduction

Image segmentation is intended to separate objects from the image, and the corresponding border is gained at the same time. In recent years, Researchers in the theory and technology of image segmentation has achieved fruitful research results, and active contours extraction is one of the important research results. The research of active contour can be divided into two groups: parameterized active contour based on Snake model which was proposed by Kass [1]; geometric active contour based on Level set methods which were first introduced by Osher and Sethian [2] for capturing moving fronts. Level set methods overcome the weaknesses of other algorithms. Its segmentation results are not sensitive to the initial position and the topology adaptability is strong. Level set method is a powerful tool of curve evolution, which can effectively deal with cusp and has a strong ability to separate complex structure of objects.

Chan-Vese level set model (C-V model) proposed by Chan and Vese [3] was integrated with the ideal of level set and Mumford-Shah model [4]. Being different from the traditional model based on the deformation parameters and geometry active contour model, this model does not rely on the gradient of image when extracting the boundary of objects, so the images with gradient edge meaningless and ambiguous verge can get a good segmentation. However, it has a weakness like general level set model, amount of computation. At this stage, works on C-V model mainly concentrate on revising its model, such as J. Li [5], etc, through improved C-V model, upgraded the capture of outline from local to the whole image; Y. y. Gong [6], etc, by amending the C-V model, multi-objects can be extracted based on single level set, and so on. In this paper, we propose a improved C-V model, which can greatly improve the efficiency of segmentation, and can be applied to real-time video image segmentation.

2. Description of C-V Model

C-V model, which is integrated with the thinking of level set and Mumford-Shah model, does not take advantage of gradient information, but minimizes the energy function to evolve curve [3]. Assume that image  is formed by two regions: objects (Co) and background (Cb), which is separated by the evolving curve C in

is formed by two regions: objects (Co) and background (Cb), which is separated by the evolving curve C in . The constants

. The constants , depending on C, are the averages of image I inside C (Co) and outside C (Cb) respectively. Chan and Vese introduced the energy function

, depending on C, are the averages of image I inside C (Co) and outside C (Cb) respectively. Chan and Vese introduced the energy function , defined by

, defined by

(1)

(1)

where  is the length of the cure C, and

is the length of the cure C, and  is the area of Co ,

is the area of Co ,

are fixed parameters. Therefore the energy function is minimized if the curve is on the boundary of the object. Optimization (1), we can get the ultimate location of segmentation line C, as well as the unknown

are fixed parameters. Therefore the energy function is minimized if the curve is on the boundary of the object. Optimization (1), we can get the ultimate location of segmentation line C, as well as the unknown .

.

(2)

(2)

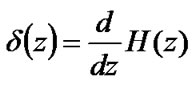

Using the Heaviside function , and the one-dimensional Dirac measure

, and the one-dimensional Dirac measure , and defined, respectively, by

, and defined, respectively, by

,

, (3)

(3)

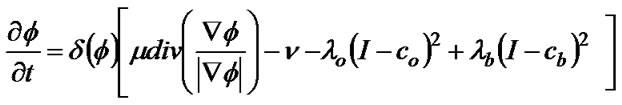

Partial differential equations, gotten by Chan and Vese using Euler-Lagrange method, are as follows: (4)-(7)

(4)

(4)

(5)

(5)

(6)

(6)

(7)

(7)

(8)

(8)

In the numerical calculations, regularizing Function (8) is used to replace  respectively. So that the gradient flow Equation (6) roles in all of the level set, and we can automatically monitor the empty goal with the internal region, and make the overall energy function to the minimum.

respectively. So that the gradient flow Equation (6) roles in all of the level set, and we can automatically monitor the empty goal with the internal region, and make the overall energy function to the minimum.

Let’s disperse the equation in , use a finite differences implicit scheme. Recall first the usual notations: let

, use a finite differences implicit scheme. Recall first the usual notations: let  be the space step,

be the space step,  be the time step, and

be the time step, and  be the grid points, where

be the grid points, where . Let

. Let  be an approximation of

be an approximation of . Knowing

. Knowing , we can get and

, we can get and using (4) and (5). The finite differences are

using (4) and (5). The finite differences are

Chan and Vese compute

Chan and Vese compute  through (11).

through (11).

(9)

(9)

(10)

(10)

(11)

(11)

From the Equation (6), can be seen, the definition of partial differential equations involving image function  is domain-wide map data, and the definition of other two unknown

is domain-wide map data, and the definition of other two unknown  is also image definition of the region, with the overall characteristics. Hence, updating level set function is in the entire defined region, the computation is large [3].

is also image definition of the region, with the overall characteristics. Hence, updating level set function is in the entire defined region, the computation is large [3].

3. Improved C-V Model

From the above analysis, we know that C-V method has a grate calculation. If we can effectively reduce the amount of computation, C-V method can be more widely applied. In traditional level set methods, it is necessary to initialize the level set function  as a signed distance function

as a signed distance function . Curve C divides the plane into internal and external regions.

. Curve C divides the plane into internal and external regions.  is the distance from point (x, y) to curve C. Generally, the distance of internal and external points are negative and positive respectively and signed distance function needs to be re-initialized. C-V method generally defines the symbol distance function (SDF) as a cone, with particularly complex calculation.

is the distance from point (x, y) to curve C. Generally, the distance of internal and external points are negative and positive respectively and signed distance function needs to be re-initialized. C-V method generally defines the symbol distance function (SDF) as a cone, with particularly complex calculation.

Lie [8] demonstrates that the presence of signed distance function is not inevitable. Lie imposed a binary level set model. We will introduce this idea into C-V method. Define radius of the closed curve C as infinite, C represents a straight line in plane , which will be divided into upper regional

, which will be divided into upper regional  and lower regional

and lower regional . Initialization function

. Initialization function  is defined as:

is defined as:

(12)

(12)

So first level set evolution, the curve of the internal and external simplified curve of the upper and lower regions, the calculation of  are very simple, The calculation of difference operator is very simple too, as only points on the boundary of upper and lower region are non-zero constant, and the remaining places are zero value; The initialization level set function is fixed constants, as well as

are very simple, The calculation of difference operator is very simple too, as only points on the boundary of upper and lower region are non-zero constant, and the remaining places are zero value; The initialization level set function is fixed constants, as well as . In a linear mesh C at the point, follow the Reference [7] approach, iterative Formula (9) can be transformed as follows:

. In a linear mesh C at the point, follow the Reference [7] approach, iterative Formula (9) can be transformed as follows:

(13)

Obviously, compared with the traditional C-V method, the calculation of our method is much smaller in the first level set iterative.

In order to ensure the level set method not departure from SDF, the time step must be very small, usually 0.1 s, which increasing the evolution of time. Since the existence of SDF is not inevitable, we appropriately increase the time step. Using larger time step can speed up the evolution, but may cause error in the boundary location if the time step is chosen too large. There is a tradeoff between choosing larger time step and accuracy in boundary location. Usually, we use  ≤ 10.0 for the most images.

≤ 10.0 for the most images.

We list out some of the experimental results in the following paper. In our numerical experiments, we generally choose the parameters as follows: ,

,  =0,

=0,  (the space step),

(the space step),  =2 (the time step),

=2 (the time step), .

.

In the first experiment, we chose the test image cavern. Jpg (Figure 1(a)), whose size is 200 × 200. The region of

Figure 1. The binary image.

Figure 2. The noisy image.

interest is specified by the white box. It needs 15 iterative to achieve the ideal state of division when the level set function is initialized as

, and time-consuming is 1.578 s. Figures 1(b),1(c) show the traditional method of initialization(CV method) and the final result of division. With our improved method (ICV method),

, and time-consuming is 1.578 s. Figures 1(b),1(c) show the traditional method of initialization(CV method) and the final result of division. With our improved method (ICV method), , we can get the desired effect of split after one iterative and the time-consuming is 0.016s (Figures 1(e),1(f)). To compare the segmentation results of the two methods in more detail, we show a zoomed version of the results in Figure (d) and Figure (g) for a region delineated by the white box in Figure (a). It’s clear to see that we can get a smoother curve split with our approach.

, we can get the desired effect of split after one iterative and the time-consuming is 0.016s (Figures 1(e),1(f)). To compare the segmentation results of the two methods in more detail, we show a zoomed version of the results in Figure (d) and Figure (g) for a region delineated by the white box in Figure (a). It’s clear to see that we can get a smoother curve split with our approach.

In Figure 2, we show how our arithmetic and traditional C-V methods work on a noisy synthetic image. The region of interest is specified by the white box (Figure 2(a)). Bose of the two methods can automatically detect the objects. If we use traditional C-V model initialization method, , the outline of objectives are separated after 20 times of iterative, but the right-angle region isn’t well separated(Figures 2(c), 2(e)). After 200 iterative, the situation is improved. However, it spends 20 times iteration to achieve a perfect result with our method. The rectangular region is also divided perfect (Figures 2(g), 2(h)).

, the outline of objectives are separated after 20 times of iterative, but the right-angle region isn’t well separated(Figures 2(c), 2(e)). After 200 iterative, the situation is improved. However, it spends 20 times iteration to achieve a perfect result with our method. The rectangular region is also divided perfect (Figures 2(g), 2(h)).

The test results of cameraman.tif are showed in Figure 3. Size of the picture is 256×256. In Figure 3(a), the level set function was initialized as signed distance function: . Figure 3(b) and Figure 3(c) are results of 10 iterations and 400 iterations respectively. In Figure 5(d) level set function was initialized as a linear curve. We can get the photographer’s outline after only one time of iteration. But there is a lot of noise divisions on the lake, noises would gradually reduce after multiple iterations. Figure 3(e) is the result of 10 iterations. After 150 iterations, we get a stable state. We can see that the noise has been significantly reduced, but not completely filtered out.

. Figure 3(b) and Figure 3(c) are results of 10 iterations and 400 iterations respectively. In Figure 5(d) level set function was initialized as a linear curve. We can get the photographer’s outline after only one time of iteration. But there is a lot of noise divisions on the lake, noises would gradually reduce after multiple iterations. Figure 3(e) is the result of 10 iterations. After 150 iterations, we get a stable state. We can see that the noise has been significantly reduced, but not completely filtered out.

4. Video Image Segmentation Based on ICV

C-V model is the key to resolve the two issues separate, and categories of backgrounds and objectives in the video images are unknown. C-V model can not be directly applied to the whole map. So we get the movement area by analyzing the characteristics of H.264 codec first of all, and then the improved C-V model is applied to the movement region which was detected. This article focuses on the moving target in the same scene, the video image processing and experimental data are based on a single static camera, so the background image is static. According the principles of H.264 video codec, motion vector of macro block as a background is usually zero value, and only motion vector of macro block in the movement region is non-zero, so we can get the moving region through the distribution of motion vector.

When inter coding being chosen, the coding frame need to use the frame before (reference frame) for movement searching, and then motion vector of each block is get. We illustrate in Figure 4 the above remarks. In Figure 4(a), the large box represents 16 × 16 macro block, and the small box represents 16 × 8 or 8 × 16 block, black line is motion vector. By the vector distribu

Figure 3. The complex background image.

Figure 4. Moving target separated.

tion map, we can frame the regional campaign, as shown in Figure 4(b).

Figure 4(c) is a real-time video segmentation results map, target detection of the system and tracking algorithm and real-time are tested respectively. We choose the parameters as follows: ,

, ,

,

,

, 1,

1, =2,

=2, 1,

1, . The regional campaign is extracted, and the moving target is tracked very well. In Figure 4(c), however, we can see that the outline of some of the goal in frame is not very ideal, sometimes the background is also included. It is because the delimitation of regional campaign is mainly based on motion estimation which processed by video coding. If light is changed, or other factors, the reference block selecting is inaccurate in motion estimation, resulting in the coding block is mistakenly believed as campaign block, so the error division is produced. The brightness weight of hands is very close to the brightness weight of the wall. As target tracking algorithm uses the brightness information of pixel, when the goal and background have similar brightness information, the algorithm will get the wrong track. H.264 codec set the frame rate of 25 fps. The size of the regional movement we get by the motion vector information of decoder is an important factor of computing time, which affect the moving target detection and tracking module. Larger the regional campaign is, more time the target detection and tracking module need. We have real-time measured the running time of the module, which is in 15-19 ms range. When the ICV model is evolved in the whole frame, the time-consuming is not more than 20 ms, fully meet the real-time requirements.

. The regional campaign is extracted, and the moving target is tracked very well. In Figure 4(c), however, we can see that the outline of some of the goal in frame is not very ideal, sometimes the background is also included. It is because the delimitation of regional campaign is mainly based on motion estimation which processed by video coding. If light is changed, or other factors, the reference block selecting is inaccurate in motion estimation, resulting in the coding block is mistakenly believed as campaign block, so the error division is produced. The brightness weight of hands is very close to the brightness weight of the wall. As target tracking algorithm uses the brightness information of pixel, when the goal and background have similar brightness information, the algorithm will get the wrong track. H.264 codec set the frame rate of 25 fps. The size of the regional movement we get by the motion vector information of decoder is an important factor of computing time, which affect the moving target detection and tracking module. Larger the regional campaign is, more time the target detection and tracking module need. We have real-time measured the running time of the module, which is in 15-19 ms range. When the ICV model is evolved in the whole frame, the time-consuming is not more than 20 ms, fully meet the real-time requirements.

5. Conclusions

In this paper, we have improved the image segmentation efficiency based on the C-V model from initialization and algorithms simplifying. The improved C-V model guarantees the division in effect while greatly improves the efficiency of the division. We apply it to the real-time H.264 video codec system. The experimental results show that, for the image of simple background, it can partition the outline of objects with dramatic speed and high efficiency segmentation, using the methods proposed in this paper. However, for the complex background image, the outline of objects can be accurately divided, but there will be some regional background mistakenly separated. How to filter this noise fast is the next step that we need to improve. This article improves the C-V method to separate video images, which can be used to real-time detect and track the moving targets.

[1] M. Kass, A. Witkin, and D. Terzopoulos, “Snakes: Active contour models [J],” International Journal of Computer Vision, Vol. 1, No. 4, pp. 321-331, 1987.

[2] S. Osher and J. A. Sethian, “Fronts propagating with curvature dependent speed: Algorithms based on hamilton-jacobi formulations [J],” Journal of Computational Physics, Vol. 79, pp. 12-49. 1988.

[3] F. T. Chan and L. Vese, “Active contours without edges [J],” IEEE Transaction Image Processing, Vol. 10, No. 2, pp. 266-277, 2001.

[4] D. Mumford and J. Shah, “Optimal approximations by piecewise smooth functions and associated variational problems [J],” Communication of Pure Applied Mathematics, Vol. 42, No. 5, pp. 577-685, 1989.

[5] J. Li, X. Yang, and P. F. Shi, “A fast level set approach to image segmentation based on mumford-shah model [J],” Chinese Journal of Computers, Vol. 25, No. 11, pp. 1175 -1183. 2002.

[6] Y. y. Gong, X. n. Luo, H. Huang, G. J. Liao, and Y. Zhang, “Multi-objects extracted based on single level set [J],” Chinese Journal of Computers, Vol. 30, No. 1, pp. 120-128, 2007.

[7] J. s. Xiao, H. Feng, and B. S. Yi, “Finite difference method for semilinear parabolic differential inclusions [J],” Journal of Wuhan University, Natural Sciences Edition, Vol. 52, No. 3, pp. 262-266, 2006.

[8] J. Lie, M. Lysaker, and X. C. Tai, “A binary level set model and some applications to mumford-shah image segmentation,” IEEE Transactions on Image Processing, Vol. 15, No. 5, pp. 1171-1181, 2006.