An Empirical Analysis of the Training Program Characteristics on Training Program Effectiveness: A Case Study with Reference to International Agricultural Research Institute, Hyderabad ()

Received 4 June 2016; accepted 2 July 2016; published 5 July 2016

1. Introduction

Evaluation is the systematic assessment of the worth or merit of some object or aim. It would simply mean the act of judging whether or not the activity to be evaluated is worthwhile in terms of set criteria. The rapid advancement in science, modernization of science and technological changes envisaged agricultural research sector to look for new methods of training in the area of crop improvement. This is essential to change the dimensions of the training needs to cope up with those changes and to keep with the pace of rapid science development. Most of the studies on training evaluation focussed on the impact of the training in improving efficiency and productivity of employees. It was noted that very limited work has been reported on addressing the core and central aspect of the training, its content and methodology. This study focussed on evaluating the training program characteristics using six independent variables and its impact training program efficiency.

Training evaluation is an integral part of most training programs. The evaluation tools and methodologies help to determine the effectiveness of instructional interventions. Despite its importance, there is evidence that evaluations of training programs are often inconsistent or missing [1] - [4] . Possible explanations for inadequate evaluations include: insufficient budget allocated; insufficient time allocated; lack of expertise; blind trust in training solutions; or lack of methods and tools [5] . The evaluation of training is complex and training interventions with regard to learning, transfer, and organizational impact involves a number of complexity factors. These factors are associated with the dynamic and rapid changes in science and technology and compelled the organizations like international agricultural research institute to envisage frequent changes in the structure, instructional methods and content of the trainings they offer [6] . The more complex situation is: where training cannot yield any substantial change when organizational performance is constrained by the structure of the organization, local and national priorities, or legislation and policies [7] .

Several researchers highlighted in their evaluation about the training and its effect on job performance. According to Burke and Day (1986) [8] training positively influences the performance of the managers. As per Bartel (1994) [9] the investment in training increases the productivity. McIvor (1997) [10] argues that it influences organizational commitment, participant knowledge and organizational based self-esteem. Hamblin (1970) [11] defined evaluation of training as―any attempt to obtain information (feedback) on the effects of training programme and to assess the value of training in the light of that information for improving further training.

The three main reasons for training evaluation are 1) to identify ways in which you can improve your training and ways to improve the training and content development. Gathering feedback and data on what participants thought of the training, how they performed in the assessments that were part of the training and how they were subsequently able to transfer that training into the workplace will enable you to identify ways in which improvements can be made. 2) To enable to determine training is aligned with the objectives so assessment of the training is must. This is to identify whether the training delivered is right or not and to check whether the existing facilities and personnel have right skills and knowledge to deliver the training. 3) To know the value that training is adding to the participant’s knowledge.

Considering the several theories, aspects and complexities the research study proposed evaluated the eight training programs spread over three years using six above said independent variables to measure the effectiveness of the training program.

2. Review of Literature

Goldstein (1986) [12] defines evaluation as the systematic collection of descriptive and judgemental information necessary to make effective training decisions related to the selection, adoption, value and modification of various instructional activities. Hamblin (1974) [13] defined evaluation of training as any attempt to obtain information on the effects of training programme and to assess the value of the training in the light of that information. He described evaluation as a training aid and identified five levels 1) reaction level 2) learning level; 3) job behaviour level; 4) organization and 5) ultimate value of evaluation. Williams (1976) [14] defines evaluation as the assessment of value or worth. He observes, value is a rather vague concept, and this has contributed to the different interpretations of the term evaluation. Rackham (1973) [15] offers perhaps the most amusing and least academic definition of evaluation, referring to it as a form of training archaeology where one is obsessively digging up the past in a manner unrelated to the future. Stufflebeam (2003) [16] defined evaluation as a study designed and conducted to assist some audience to assess an object’s merit and worth. He came out with a method of evaluation known as CIPP which is Context, Input, Process, and Product Evaluation, which he presented at the 2003 Annual Conference of the Oregon Program Evaluators Network (OPEN).

Holli and Calabrese (1998) [17] defined evaluation as comparisons of an observed value or quality to a standard or criteria of comparison. Evaluation is the process of forming value judgments about the quality of programmes, products, and goals. Boulmetis and Dutwin (2000) [18] defined evaluation as the systematic process of collecting and analysing data in order to determine whether and to what degree objectives were or are being achieved. Saks studied the relationship between training and outcomes for newcomers. A sample of 152 newly recruited entry level professionals completed a questionnaire after the 1st six m months of socialization. Supervisor rating of job performance were obtained four months later. The result indicated that the amount of training received by newcomers was positively related to job satisfaction, commitment, ability to cope and several measures of the job performance, Newcomers perceptions of training were positively related to job satisfaction [19] .

Training evaluation is defined as the systematic process of collecting data to determine if training is effective [20] . The Commonly used training evaluation has their roots in systematic approaches to the design of training. They are typified by the instructional system development (ISD) methodologies, which emerged in the USA in the 1950s and 1960s and are represented in the works of Gagné and Briggs (1974), Goldstein (1993), and Mager (1962) [20] - [22] . Evaluation is traditionally represented as the final stage in a systematic approach with the purpose being to improve interventions (formative evaluation) or make a judgment about worth and effecttive- ness (summative evaluation) [23] . More recent ISD models incorporate evaluation throughout the process [24] Neeraj et al. (2014) [25] in his case study approach presented evaluation of employee training and its effectiveness elucidated his findings using descriptive statistics and Likert scale with hypothesis testing.

Madgy (1999) evaluated the sales force training effectiveness measuring empirically using statistical methods. [26] . Veermani and Premila Seth (1985) defined evaluation as an attempt to obtain information on the planning of training, the conduct of the training and feedback on the application of learning after the training so as to assess the value of the training. This evaluation finding may be used for a variety of purposes [27] .

The Kirkpatrick (1976) developed four logical levels framework for training evaluation consisting of reaction, learning, behaviour and results. Most of the training programs evaluate reaction and learning levels and other two levels learning and behaviour often not done because of their complexities. Some researchers argue that training should result in some form of behaviour change [28] .

Phillips (1999) stated the Kirkpatrick Model was probably the most well-known framework for classification of areas of evaluation. This was confirmed in 1997 when the American Society for Training and Development (ASTD) assessed the nationwide prevalence of the importance of measurement and evaluation to human resources department (HRD) executives by surveying a panel of 300 HRD executives from a variety of types of U.S. organizations. Survey results indicated the majority (81%) of HRD executives attached some level of importance to evaluation and over half (67%) used the Kirkpatrick Model. The most frequently reported challenge was determining the impact of the training (ASTD, 1997) [29] . Lookatch (1991) and ASTD reported that only one in ten organizations attempted to gather any results-based evaluation [30] .

Muhammad Zahid Iqbal et al., in the year (2011) studied the relationship between characteristics and formative evaluation to signify the use of formative training evaluation. This study revealed of seven training characteristics explained 59% and 61% variance in reaction and learning respectively indicating a positive impact on reaction and learning except training contents [31] .

Pilar Pineda in the year (2010) presented an evaluation model that has been successfully applied in the Spanish context that integrates all training dimensions and effects, to act as a global tool for organizations. This model analyses satisfaction, learning, pedagogical aspects, transfer, impact and profitability of training and is therefore a global model [32] .

In summary the literature suggests a strong relationship between training and its Effectiveness on participant ability to improve his knowledge and there is a need to confirm this through empirical support. The purpose of the present study is to explore the relationship between the said six independent variables with dependent variable on training participants perceived Efficiency on his/her knowledge through the training. The present study elaborates and extends the previous research by measuring participants improved effectiveness using descriptive, inferential statistics and multiple regression methods in an International Agricultural Research Institute where the training is conducted. The hypotheses in this study specifically address the relationship between training as a whole with improved Effectiveness of the training course participant.

3. Objectives and Hypotheses

Research Question

Does there is any relation overall training program methodology of the training program on participant’s effectiveness?

With the above background this study has the following objectives:

・ To determine the Effectiveness of a training.

・ To evaluate whether the objectives were achieved.

・ To find out if the provided training course characteristics met the participant needs.

・ To find out how the trainer did and what he or she could improve on.

Based on the identified problem, research question and the objectives the following hypotheses were formed:

H1: Training participants perceive there are some mean differences in training program effectiveness due to Lectures.

H2: Training participants perceive there are some mean differences in training program effectiveness due to Practical.

H3: Training participants perceive there are some mean differences in training program effectiveness due to Demos.

H4: Training participants perceive there are some mean differences in training program effectiveness due to Field Visits.

H5: Training participants perceive there are some mean differences in training program effectiveness due to Methodology.

H6: Training participants perceive there are some mean differences in training program effectiveness due to Instructor.

H7: Training participants perceive there are some mean differences in training program effectiveness due to six said independent variables.

The quality of the actual needs assessment might increase training program effectiveness and delivery [33] . Conducting need assessment is the fundamental to the success of a training program. However, even perfect training needs assessment does not guarantee training effectiveness [34] . Thus, we hypothesized our focus on the above indicated six independent variables to measure the dependent variable. The nature of training program is also hypothesized to have a relationship with perceptions of training effectiveness [35] . The trainee’s pre- requisites, content, delivery methods and evaluation needs to be focussed and it can be hypothesised that customized training programs increase perceptions of training program delivery (Moore, et al. 2003) [36] .

4. Methodology

4.1. Theoretical Framework

The dependent variable in this study is Effectiveness of training program and six independent variables used in this study are Lectures, Practical, Demos, Field Visit, Methodology and Instructor, (Figure 1).

4.2. Data Collection

Sample Size: The research sample size is of 200 participants who attended the training program spread over three years (2008-2010) across India and some overseas African Countries. The participants are more diverse and represent CSIR Research Centres, State Agricultural Universities, Private sector and small and medium seed companies, Research Foundations, Governmental organizations/institutes of India and Sub-Saharan African Countries. The demography of the participants provided in Table 1 and Table 2.

![]()

Table 1. Demography of research sample.

4.3. Research Instrument

The research instrument used for the survey is a structured undisguised questionnaire―a main source for the primary data collection. Secondary data was collected from various published books, web sites & records pertaining to the topic. The training evaluation questionnaire was divided two sections―in the first section, background information/personal details of the respondent were collected. The Section-II of questionnaire was used to collect the information on six independent variables and a dependent variable, the training program effectiveness. This part contains 53 questions, related to Topic specific independent dimensions―Lecture, Practical, Demo, Field Visit, Methodology, Instructor and a dependent variable Efficiency. The respondents were asked to choose the most appropriate “top-of-the-mind” response for each statement based on their post-training judgment.

4.4. Data Analysis

4.4.1. Methods of Data Analysis

In our empirical investigation we have applied statistical techniques to analyse the data for drawing inductive inferences from our research data. To ensure the data integrity the authors have carried out necessary and appropriate analysis using relevant methods on our findings. The descriptive statistics are used to summarise the data and to investigate the survey questionnaire, formulating the hypotheses the inferential statistics were employed. To measure the central tendency such as means, variance and standard deviation we used the dispersion methods.

Reliability methods: To measure the internal consistency reliability of our research instrument, the survey questionnaire and to maintain similar and consistent results for different items with the same research instrument, we used the reliability methods Spearman Brown split-half reliability static where items are randomly divided the items into two groups. After administering the questionnaire to a group of people the total score each divided group was calculated to estimate the correlation between the total scores. To further confirm, the reliability of our research instrument we have used the Cronbach’s Alpha a mathematically equivalent to the average of all possible split-half estimates [37] . The Statistical Analytical System (SAS) was used to measure the central tendency, measures of variability, reliability statistics, correlations, parametric tests and to predict the dependent variable training program effectiveness based on independent variables multiple regression analysis carried out [38] .

4.4.2. Reliability Test of the Questionnaire

The Likert-type scale with items 1 - 5 was used for the independent dimensions (where 1 = Not Enough, 2 = Just Enough, 3 = Enough, 4 = Very Much and 5 = Too much) and dependent variable Likert scale was (where 1 = Poor, 2 = Fair, 3 = Good, 4 = Very Good and 5 = Excellent). The reliability statistic Cronbach’s alpha coefficient value was calculated for internal consistency of the instrument, by determining how all items in the instrument related to the total instrument [39] [40] . This instrument was tested on a pilot group of 40 training participants. They were asked to fill out the 57-questions, and requested to select the appropriate answer on 5-point Likert Scale. After analysing their responses from the pilot study with SAS program, the Cronbach’s alpha static was found to be 0.80 suggesting a strong internal consistency. Two months later, the same instrument was used with 200 participants to collect the responses. Four questions were dropped out from a set of 57 questions because of unsatisfactory Cronbach’s alpha coefficient values. The overall Cronbach’s alpha for the questionnaire with a set of 53 questions was 0.88, and the increase was an effect of dropping the questions with low C-alpha values. The reliability values were presented in Table 3 which ranged from 0.68 to 0.82 (Table 3). A second reliability measure called Spearman Brown Split-Half Reliability Coefficient was computed to assure the reliability of the scale items. The obtained overall Spearman Brown Split-Hall Reliability was 0.94 suggesting strong reliability of the instrument. Table 3 presents the computed C-Alpha Static, Spearman-Brown Split-Half (odd- even) correlation and Spearman Brown Prophecy values.

5. Results

The general objective of this research was to assess the independent variables Lecture, Practical, Demos, Field Visit, Methodology and Instructor effect on the dependent variable Effectiveness the primary data gathered through questionnaire was analysed. The mean value, standard deviation, standard error and percentages were calculated for the variables from the data collected from the respondents (n = 200). The estimated standard errors for all the dimensions are relatively small, gives an indication that the mean is relatively close to the true mean of the overall population (Table 4). However the results indicate there were differences of opinions of the

![]()

Table 3. Cronbach’s alpha values for variables used in this study.

respondents on some characteristics of the training program.

The correlation analysis was carried out to measure the relationships between the variables (Table 5). The Lectures are positively correlated with Demos and Methodology (r = 0.31, p < 0.01), Practical negatively correlated with Methodology (r = −0.37, p < 0.01), Demos and field visits are positively correlated indicating a strong relationship (r = 0.78, p < 0.01), instructor efficiency are negatively correlated (r = −0.27, p < 0.01) and Methodology is positively correlated with Efficiency (r = 0.47, p < 0.01). Overall the correlations are moderate and with the available data we cannot conclude that the differences in means are statistically significant (Table 5).

The parametric t-Test analysis for two-independent samples means was carried out to measure whether the differences in the means among the independent variables with dependent variable are statistically significant and the results are presented in Table 6.

![]()

Table 4. Mean, standard deviation and standard error values of the primary data.

![]()

Table 5. Correlation among the study dimensions.

**Correlation is significant at prob < 0.01; *Significant at prob < 0.05; Source: Survey data.

![]()

Table 6. Independent two-sample t-test analysis with difference of means (independent variables vs dependent variable efficiency).

**Significance p < 0.001.

5.1. Efficiency Differences Due to the Variables

Lecture: As it can be seen for Table 6, the t-test portray there was no statistically significant in difference of means among Lecture and Efficiency of the training program. The calculated t-value 0.587 is less than the t-tabular value (1.984) at DF (198) and p-value (0.59) also greater than the significance level of p-value (0.05) and lower (−0.1716) and upper (0.0976) values are between positive and negative numbers at 95% confidence interval of the differences. Therefore the results shown non-significant difference of means and we reject the null hypothesis.

Hence the first hypothesis H1 not confirmed that was “Training participants perceived there are some mean differences in training program effectiveness due to Lectures”.

Practical: The t-test results confirm for the variable Practical statistically significant difference in means is among Practical and Efficiency (p < 0.0001) which is less than significance level of p-value (0.05) and lower (−1.1191) and upper (−1.191) values were between negative numbers at 95% confidence interval of the differences (Table 6). Therefore the results show the statistically significant in difference of means between Practical and Efficiency.

Hence the second hypothesis H2 confirmed that was “H2: Training participants perceive there are some mean differences in training program effectiveness due to Practical”.

Demos: The t-test results confirm for the variable Demos statistically significant difference in means is among Demos and Efficiency (p < 0.0001) which is less than significance level of p-value (0.05) and lower (−0.5606) and upper (−0.2476) values were between negative numbers at 95% confidence interval of the differences (Table 6). Therefore the results show the statistically significant in difference of means between Demos and Efficiency.

Hence the third hypothesis H3 confirmed that was “H3: Training participants perceive there are some mean differences in training program effectiveness due to Demos”.

Field Visit: The t-test results confirm for the variable Field Visit statistically significant difference in means is among Field Visit and Efficiency (p < 0.0001) which is less than significance level of p-value (0.05) and lower (−0.6151) and upper (−0.3077) values were between negative numbers at 95% confidence interval of the differences (Table 6). Therefore the results show the statistically significant in difference of means between Field Visit and Efficiency.

Hence the third hypothesis H4 confirmed that was “H4: Training participants perceive there are some mean differences in training program effectiveness due to Field Visits”.

Methodology: The t-test results confirm for the variable Methodology statistically significant difference in means is among Methodology and Efficiency (p < 0.0001) which is less than significance level of p-value (0.05) and lower (−0.46) and upper (−0.20) values were between negative numbers at 95% confidence interval of the differences (Table 6). Therefore the results show the statistically significant in difference of means between Field Visit and Efficiency.

Hence the third hypothesis H5 confirmed that was “H5: Training participants perceive there are some mean differences in training program effectiveness due to Methodology”.

Instructor: As it can be seen for Table 6, the t-test portray there was no statistically significant in difference of means among Instructor and Efficiency of the training program. The calculated t-value 0.735 is less than the t-tabular value (1.984) at DF (198) and p-value (0.201) also greater than the significance level of p-value (0.05) and lower (−0.15) and upper (0.7356) values are between positive and negative numbers at 95% confidence interval of the differences. Therefore the results shown non-significant difference of means and we reject the null hypothesis.

Hence the sixth hypothesis H6 not confirmed that was “H6: Training participants perceive there are some mean differences in training program effectiveness due to Instructor”.

The t-test results confirm that statistically significant difference in means is among the independent variables and dependent variable efficiency which is effecting the Effectiveness of the training program.

Hence the third hypothesis H7 confirmed that was “H7: Training participants perceive there are some mean differences in training program effectiveness due to six said independent variables”.

5.2. Testing Hypothesis-Reasons

The reasons for accepting the hypothesis H2, H3, H4, H5, and H7 are:

Most of the training course participants strongly agree with the way practical, demos, field visits, methodology were carried out during the training program which significantly influenced the Effectiveness of the training program on participants. Further, the instructors for the practical, demos, field visits remain same as is of methodology. These four variables played an important role in improving the personal efficiency of the course participants when tested after two months of training. However, some of the lecture(s) and Instructor(s) rated low by some participants because the delivery style might have not impressed most of the participants. And we have also observed over the three years from these eight courses, the course participants are more eager to apply the gained knowledge form the lectures through practical, demos, field visits. These issues need to be addressed positively.

5.3. Multiple Regression Analysis

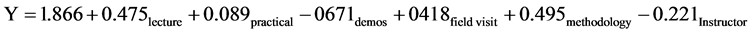

We carried out the multiple regression analysis to predict the value of a dependent variable outcome, Efficiency based on the value of six independent variables, and to measure the cause and effect relationship between independent and dependent variables (Table 7). With the p-value of zero to four decimal places, the model is statistically significant. The R-squared is 0.3333, meaning that approximately 33% pf the variability of Efficiency is accompanied for by the variables in the model and in this case 33% of the variability of Efficiency is accounted for by the model, even after taking into account the number of predictor variables in the model. The coefficients of each variable indicates the amount of change one could expect in Efficiency given a one-unit change in the value of that variable, given that all other variables in the model are held constant. If we consider the variable lecture, we would expect an increase of 0.475 in the Efficiency score for every one unit increase in Lecture assuming that all other variables in the model are held constant. To compare the strength among the coefficients the standardized beta coefficient values computed (Table 7 & Table 8). The Methodology has largest beta value (0.44817) and Demos have smallest beta value (−0.32352). Considering the beta value of Methodology, one standard deviation increase in Methodology leads to 0.44817 standard deviation increase in predicted Efficiency, with the other variables held constant. In the same way one standard deviation increase in Demos leads to 0.032352 standard deviation decrease in Efficiency with other variables in the model held constant, and so on. From the values of the estimated regression coefficients the sample regression equation can be written as:

The independent variables Lecture, Practical and Field Visit have partial impact on overall Effectiveness of training program. The estimated negative sign implies that demos, instructor will decline the overall efficiency of the training program when all other variables of the model held constant and the other variables have positive effect on overall efficiency of training program.

![]()

Table 7. Results from multiple regression analysis (analysis of variance).

![]()

Table 8. Results from multiple regression analysis.

6. Discussion

The purpose of the present study was to see the effect of training methodology as a whole on Training Program Effectiveness. The hypotheses were formulated to measure whether the delivering training will improve the Efficiency of the course participant. The results of the study supported the four hypotheses and the inferential calculations found significant association between those course participants who strongly agreed that they improved their efficiency and received effective coaching and felt practical, demos, field visit, methodology, enhanced their knowledge and will improve the job efficiency. This is in line with the findings of a similar study carried out by Debra Truitt [41] . These results agree with those of Subramanian (2010) [42] , Iqbal (2011) [41] Neeraj et al. (2014) [25] studied the evaluation of training using descriptive, inferential statistics and regression model.

The results of the questionnaire demonstrated a strong relationship between training program characteristics- practical, demos, field visits, methodology and training program efficiency. This finding also supports the case studies that discussed the training program characteristics and training program effectiveness in organizations [43] [44] .

The research did not find any significant differences between the younger and older training participants even in overall training program efficiency. However, women participants indicated positive training participation attitudes than men. Further future research may address this gender-related disparity when conducting future training courses.

In summary authors researched the hypotheses that the six independent variables effect on the dependent variable training program effectiveness and the results have supported the hypotheses. However given the nature and scope of the study, there are some limitations to this study.

Survey research will have some problems associated with its use as these are self-reported instruments may not be complete and reliable. However it can be reported that a strong internal consistency of the instrument was confirmed by both Cronbach’s alpha and Spearman-Brown split-half reliable static at overall and at independent level using ordinal data.

A major limitation to the interpretation of the results is with the instrument i.e. survey questionnaire. The questionnaire was distributed at the end of the training all the course participants. However, even those who have not attended all the sessions would have rated the scale even for the session the participant not attended. But author is very lucky to receive honest answers from some course participants “not attended the session” for some questions. Most of the trainee participants are very frank to rate the sessions as most of them are employees and mature, and even commented exactly what they expect from the training. We are appreciative of the participant’s comments which are really helpful to change the course methodology accordingly. When compared the first course survey with the eighth course, the authors humbly claim from survey responses the huge improvement in the methodology. We believe, still there is a scope for improving the training methodologically, and it is very important to note that the authors have not able find any suitable literature on evaluating the training program effectiveness attempting empirically to answer questions related to the crop improvement training which involved teaching of modern aspects like advanced molecular plant breeding methods, genomics for crop improvement, next generation sequence analysis and data analysis using high-end computing.

Most the of the participants expressed the training is relevant to their present role and will enhance the confidence to pursue their job related research more aggressively, in particular data analysis which most of the participants are not well-versed and happy to receive all the software they learn in a CD.

Future research could build on studying the results by measuring quantitatively actual increase in efficiency of the performing the jobs and measuring the gender-related disparity. When training is perceived by the employee to be relevant and useful, the results will be demonstrated by improved efficiencies―in this case the course participants writing successful proposals for funding, and transferring the knowledge to the fellow colleagues. We have observed from the open-ended question on the survey about the length of the training program, most of the participants have given unique answers increasing the training from two to three weeks. This strengthens our position to make case for increasing funding for such training courses with the donor agencies.

7. Conclusions

To conclude, the data were collected supported our hypotheses that the training program positively correlated with the efficiency of participants positively. The study was conducted with sample size of 200 training course participants from the eight training courses spread over three years, with two weeks of period. The training course content will change to some level in each course, and is need based. The only limitation is that the authors have considered only the common topic areas of the courses for data analysis. As the training is spread over three years, even instructor, lecture also will change at least for some sessions. Further this training estimated efficiency using independent variables.

The overall efficiency of the program as per this study is very good and improvement is needed in certain areas like more time for demos and practicals; increase to three-week training period needs to be considered. The authors believe that the study has been an important first step in this line of research addressing the training issues in modern crop improvement research.