Identification of Textile Defects Based on GLCM and Neural Networks ()

Received 7 October 2015; accepted 29 November 2015; published 2 December 2015

1. Introduction

Texture analysis is necessary for many computer image analysis applications such as classification, detection, or segmentation of images. In the other hand, defect detection is an important problem in fabric quality control process. At present, the texture quality identification is manually performed. Therefore, Tissue online Automatic Inspection (TAI) increases the efficiency of production lines and improve the quality of the products as well. Many attempts have been made based on three different approaches: statistical, spectral, and model based [1] .

In this recherche paper, we investigate the potential of the Gray Level Co-occurrence Matrix (GLCM) and neural networks that used as a classifier to identify the textile defects. GLCM is a widely used texture descriptor [2] [3] . The statistical features of GLCM are based on gray level intensities of the image. Such features of the GLCM are useful in texture recognition [4] , image segmentation [5] [6] , image retrieval [7] , color image analysis [8] , image classification [9] [10] , object recognition [11] [12] and texture analysis methods [13] [14] etc. The statistical features are extracted from GLCM of the textile digital image. GLCM is used as a technique for extracting texture features. The neural networks are used as a classifier to detect the presence of defects in textiles fabric products.

The paper is organized as follows. In the next sections, we introduce a brief presentation of GLCM. In section 3, the concept of neural networks with Training Multilayer Perceptrons is described. Image Analysis (Feature Extraction & Preprocessing Data) is given in section 4. Numerical simulation and discussion are presented in section 5; in the end a conclusion is given.

2. Gray-Level Co-Occurrence Matrix (GLCM)

One of the simplest approaches for describing the texture is using a statistical moment of the histogram of the intensity of an image or region [15] . Using a statistical method such as co-occurrence matrix is important to get valuable information about the relative position of neighboring pixels of an image. Either the histogram calculation give only the measures of texture that carry only information about the intensity distribution, but not on the relative position of pixels with respect to each other in that the texture.

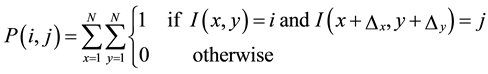

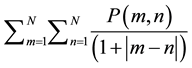

Given an image I, N × N, the co-occurrence, and the matrix P defined as:

. (1)

. (1)

For more information see [16] .

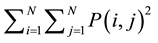

In the following, we present and review some features of a digital image by using GLCM. Those are Energy, Contrast, Correlation, and Homogeneity (features vector). The energy known as uniformity of ASM (angular second moment) calculated as:

Energy: . (2)

. (2)

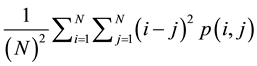

Contrast measurements of texture or gross variance, of the gray level. The difference is expected to be high in a coarse texture if the gray scale contrast is significant local variation of the gray level. Mathematically, this feature is calculated as:

Contrast: . (3)

. (3)

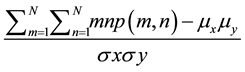

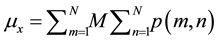

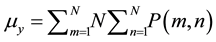

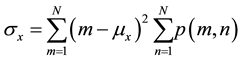

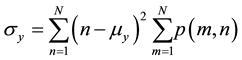

Texture correlation measures the linear dependence of gray levels on those of neighboring pixels (1). This feature computed as:

Correlation: (4)

(4)

where ,

,

.

.

The homogeneity measures the local correlation a pair of pixels. The homogeneity should be high if the gray level of each pixel pair is similar. This calculated function as follows:

Homogeneity: . (5)

. (5)

3. Neural Networks Construction

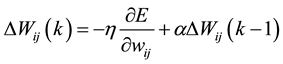

Artificial neural networks (ANN) are very massively parallel connected structures that composed of some simple processing units nonlinear. Because of their massively parallel structure, they can perform high-speed calculations if implemented on dedicated hardware. In addition to their adaptive nature, they can learn the characteristics of the input signals and to adapt the data changes. The nonlinear character of the ANN can help to perform the function approximation, and signal filtering operations that are beyond optimal linear techniques see [17] -[19] . The output layer used to provide an answer for a given set of input values (Figure 1). In this work the Multilayer Feed-Forward Artificial Neural Networks is used as classifier, where the objective of the back- propagation training is to change the weights connection of the neurons in a direction which minimizes the error E, Which defined as the squared difference between the desired and actual results output nodes. The variation of the connection weight at the kth iteration defined by the following equation:

(6)

(6)

where (η) is the proportionality constant termed learning rate and (α) is a momentum term for more information (see [20] -[22] ).

Once the number of layers and the number of units in each layer selected the weights and network thresholds should set so as to minimize the prediction error made by the system. That is the goal of learning algorithms that used to adjust the weights and thresholds automatically to reduce this error (see [23] [24] ).

4. Image Analysis (Feature Extraction & Preprocessing Data)

The feature extraction defined as the transforming of the input data into a set of characteristics with dimensionality reduction. In the other words, the input data to an algorithm will be converted into a reduced representation set of features (features vector).

In this paper, we’re extracting some characteristics of a digital image by using GLCM. Those are Contrast, Correlation, Energy, and Homogeneity. The system was implemented by using MATLAB program. We put the Matlab functions that we utilized in this work between the two <<>>. The proposed system composed of mainly three modules: pre-processing, segmentation and Feature extraction. Pre-processing is done by median filtering <

>. Segmentation is carried out by Otsu method <

>. Feature extraction based on GLCM features (Contrast, Correlation, Homogeneity, and Energy) implemented by <

>. The extraction of the textural characteristics of the segmented image is done by using gray level co- occurrence matrices (GLCM). The textural characteristics extracted from four spatial orientations; horizontal, left diagonal vertical and right diagonal corresponding to (0o, 45o, 90o, and 135o) using <

> with the offsets {[0 1], [−1 1], [−1 0], [−1 −1]}, that are defined as a neighboring pixel in the four possible directions (see

Figure 2).

In the following subsection 4.1, we present a descriptor based on GLCM computation.

4.1. GLCM Descriptorsteps

1) Preprocessing (color to gray, noise, resize (256 × 256));

![]()

Figure 1. Feed-forward neural with three layers.

![]()

Figure 2. Adjacency of pixel in four directions (horizontal, vertical, left and right diagonals).

2) Segmentation;

3) Feature extraction based on GLCM features using <

> with the Offset [0 1; −1 1; −1 0; −1 −1];

4) Concatenation the Contrast, Correlation, Energy and Homogeneity.

The proposed algorithm implemented in MATLAB program developed by the author. After the application of the GLCM descriptor steps (4.1) to the image, we obtained a feature vector of dimension equal to 16 as input for neural networks classifier (Figure 3). The data set divided into two sets, one for training, and one for testing. Preprocessing parameters determined by using a matrix containing all the functionality that used for training or testing; the same settings were used to pre-treat the test feature vectors before transmission to the trained neural network.

A fixed number (m) of examples from each class assigned to the training set and the remaining (m - n) of the testing set. The inputs and targets are normalized, they have means equal zero and standard deviation = 1. The Forward Feed Back-propagation network trained using normalized training sets. The number of inputs of ANN equal to the number of features (m = 16). Each hidden layer contains 2 m neurons and two outputs equal to the number of classes (without defects, with defects) Figure 4 and Figure 5 respectively.

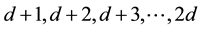

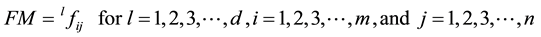

4.2. Simulation and Discussion

We have been developing a real database made by 1500 Images of jeans tissue. Some Images contain defects. The momentum is 0.9 and learning rate is 0.01 ((h, a in the Equation (1)). The ANN output represented by a vector belongs to [0, 1]n. We describe all the features of the training set in a form matrix FM. Each column represents the pattern features. If the training set contains m instances for each model, which belongs to a class, the dimension of the matrix FM is equal to m x n. Where m is the number of features (feature vector size), and n is the number classes. The number of columns 1, 2, 3 … of the matrix FM represents the instance of the model characteristics (textile) that belongs to the class 1. The columns number , represents the instance features of the pattern (textile) which belongs to the class 2, etc. (see Figure 6(a) with d = 6 and Figure 6(b) with d = 9).

, represents the instance features of the pattern (textile) which belongs to the class 2, etc. (see Figure 6(a) with d = 6 and Figure 6(b) with d = 9).

(7)

(7)

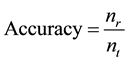

[25] and [26] exist more information about the formulation of input and outputs. The accuracy of the system is calculated by using the following equation:

(8)

(8)

where ![]() the numbers of the correct images identification and

the numbers of the correct images identification and ![]() is the total number of the images testing.

is the total number of the images testing.

Learning set contains six patterns for each category, and the testing set contains nine patterns for each type with accuracy 100%. The system learned with 7 images of each class and tested with 11, 13, 18, 21, 23, 28 images of each category. Figure 7 shows the system accuracy, the best 100% and the worst is 91%. The best 100% results identification obtained when the sizes of each class are 11, 13, and 18.

The system learned with ten images sample of each category and tested with 1, 2… 26 images of each type.

![]()

Figure 3. Flowchart of the proposed method.

![]()

Figure 4. Example of image without defect.

![]()

![]() (a) (b)

(a) (b)

Figure 6. (a) Neural output matrix with learning set, 6 patterns for class (100%); (b) Neural output matrix with Testing set, 9 patterns for class (100%).

Figure 8 shows the system accuracy, the best is 100% and the worst is 90.5%. We obtained the best results 100% identification when the sizes of class testing are 1, 2 … 15.

Figure 7 shows the correlation between the system accuracy and the size of testing data set. With a given data set of learning, the increasing numbers of patterns in the class for testing lead to decreasing the system efficiency. Increase the rate of the examples used in training to the examples used in the testing implies to increase the rate of recognition.

![]()

Figure 7. (a)-(f): Neural output matrix with testing data set are 11, 13, 18, 21, 23, 28 patterns for each class, where the learning data set is 7.

![]()

Figure 8. System efficiency with different size of testing data set (1, 2, … , 26), where size of learning data set = 10.

5. Conclusion

We studied and developed an efficient method of textile defects identification based on GLCM and Neural Networks. The descriptor of the textile image based on statistical features of GLCM is used as input to neural networks classifier for recognition and classification defects of raw textile. One hidden layer feed-forward neural networks is used. Experimental results showed that the proposed method is efficient, and the recognition rate is 100% for training and the 100%, 91% best and worst for testing respectively. This study can take part in developing a computer-aided decision (CAD) system for Tissue online Automatic Inspection (TAI). In future work, various effective features will be extracted from the textile image used with other classifiers such as support vector machine.