Development of a Quantitative Prediction Support System Using the Linear Regression Method ()

1. Introduction

In this digital age, improving a system’s yields is accomplished by rationalizing the mobilized resources involved in a production process through the use of optimization methods and models. To accomplish this, specialists in various fields such as political economists, statisticians, actuaries, mathematicians, and others can make significant contributions to solving certain optimization challenges such us climate factors in agriculture harvesting. Proven optimization methods can be used for this purpose.

The emergence of new data concepts such as big data or voluminous and numerous data necessitates the development of new tools, as evidenced by the rise of optimization or/and classification. Multiple linear regression models, particularly parametric models, are frequently used in data analysis procedures. The linear regression model has a wide range of applications [1] . It enables us to perform analyses and make predictions in particular. As a result, if there is a strict linear relationship between the variable to be explained or target variable and the explanatory variable or predictive variable, the prediction of the value for the target variable is unequivocal when the value for the explanatory variable is known. The model’s random error term is ignored, and the magnitude of this error provides the accuracy of the established estimation [2] .

In order to achieve the main goal, the present work will employ linear regression and the least squares method as mathematical tools and equipment. Furthermore, Python language utilities will be solicited for parameter value determination before discussing the obtained results and emphasizing their novelty and potential implications.

2. Materials, Tools, Equipment and Methods

2.1. Material

The spreadsheet and Python language allow you to create a linear regression model and determine the values of the model’s parameters by solving the system obtained by using the least squares method.

2.2. Tools and Equipment

Sums are calculated in Excel, while python language libraries like numpy help with numerical calculations when pandas are used during the model data loading process.

2.3. Methods

When applied to the linear regression model, the least squares method yields exact and correct results. The least squares method is a tool used in all observational sciences for error theory or purely algebraic estimation [3] . It solves the linear regression model equation by determining the values of the parameters. According to [the Gauss-Markov theorem], “for a linear model, if the errors are uncorrelated and have zero expectation together with variances equal, then the least squares estimator is the best linear unbiased estimator of the coefficients” [4] .

In this present work, the least squares method is used in this work to define the objective function of the model, from which a system of equations is derived by calculating the partial derivatives with respect to the model’s coefficients.

2.3.1. Mathematical Modeling

Linear regression models are classified into two types: 1) simple linear regression, which employs the traditional intercept slope form and requires a and b to be learned in order to make accurate predictions; and 2) multiple linear regression, which begins with the estimation of parameters involving an endogenous variable y and p number of exogenous variables

.

2.3.2. Model of Linear Regression

The equations x and y represent the simple linear regression equation and the multiple linear regression equation, respectively.

(1)

(2)

where Yi is the i-th observation of variable y;

is the i-th observation of variable j-th variable;

is the model’s error. It summarizes the missing information that would allow the values of y to be explained linearly using the p variables

.

To solve the regression problem, we must estimate p + 1 parameters, which leads to the equation number (3) Written as a matrix.

(3)

The dimensions of the matrices involved in the expression of equation 3 are as follows: for Y, its dimension is (n, 1), for X, it is (n, p + 1), for a, it is (p + 1, 1), and finally for its dimension is (n, 1).

The (n, p + 1)-dimensional matrix X contains all of the observations on the exogens, with the first column formed by the value 1 indicating the integration of the constant a0 in the model equation.

2.3.3. Prediction Using Linear Regression

The linear regression model is used in prediction because of three key elements. The model data (dataset) contains the questions x and answers y for the problem to be solved. This data is used to generate a model represented by a mathematical function, with the coefficients of this function serving as the model’s parameters. The cost function or objective function is the set of errors in the model on the data.

3. Results and Discussion

In the next article we plan to carry out tests of the designed support on climatic data in order to predict the harvestable quantities according to the influencing climatic factors. Thus, for practical reasons, the model data (dataset) used to determine the objective function will be taken from those provided by the Geographical Institute of Burundi (IGEEBU) in 2018.

3.1. Production Estimation Based on Weather Conditions

In this study, we used test data from a sampling provided by the Geographical Institute of Burundi as shown on Table 1.

The parameters a, b, c, d, e, f, g, h, i, j, and k are determined by applying the least squares method to the model, which is a formulated linear function.

(4)

To begin, let’s use the least squares method on the model’s linear function:

(5)

(6)

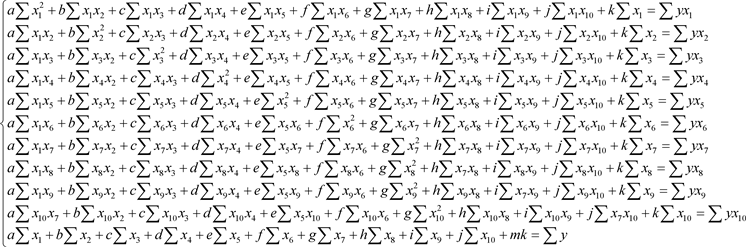

Calculating the partial derivatives in relation to the linear function coefficients yields the equations as shown on Table 2.

We can deduce the system of equations from these partial derivatives calculated with respect (7).

3.1.1. Resultant 1: Gradient Descent Equation System

(7)

(7)

The system of Equations (7) is shown in matrix form in system (8) below:

(8)

(8)

X1: The solar radiation Level, X2: Water stress level, X3: Temperature of the air, X4: Soil depth, X5: Temperature of the soil, X6: Evaporation rate, X7: Precipitation quantity, X8: Wind speed, X9: Soil Humidity, X10: represents relative air Humidity, and Y: represents Production.

3.1.2. Resultat 2: Factor Values or Climate Parameters

The application of the least squares method to the model’s test data yields the effective values of the model’s parameters as shown by the system results (9)

(9)

We obtain the following values of the following parameters after solving the system (9):

The solving system (9) returns the values of the final model’s coefficients, as expressed:

3.2. Discussion on the Obtained Results

Two results were obtained after applying the model to the study data (dataset).

1) A system of equations derived from study data using the law of the smallest squares and linear regression.

2) The values of the model’s coefficients or parameters, which can be used to minimize or maximize the differences between the final and initial models.

3) The objective function found constitutes a quantitative prediction support which can be used in various fields to estimate the values of indicators of a given process involving and interacting quantifiable and countable input factors. For the last one, at the output, the results or products obtained are themselves also quantifiable, countable and optimal according to the case.

4) The determination of the influencing factors using the gradient descent method makes it possible to minimize or maximize the objective function which ultimately can be used for prediction purposes.

A subsequent work will elucidate and investigate the avenues of application of this fourth result using case studies that trace real-world phenomena.

4. Conclusions

The objective function must be determined. Multiple linear regression allows for the determination of an objective function, which can then be optimized by adjusting the influencing factors. The precision of the influencing factors required to obtain an optimal yield has been obtained using the method of gradient descent and can be used for quantitative prediction processes or/and work.

The solution based on least squares methods coupled with multiple linear regression allowed for the determination of an objective function. The specification of influencing factors, combined with the use of gradient descent methods, transforms the latter into a tool, a support for quantitative prediction.

The use of a linear regression model, one of the artificial intelligence supervised learning methods, is what distinguishes this work from others. The work goes beyond the commonly used decision-making approaches. It focuses on prediction modeling for decision support systems in particular.

This final point will be addressed in future work. Future research will particularly concentrate on the specifications of the influencing factors of the objective function, as requested during the optimization process using the gradient descent method.