A Theoretical Comparison among Recursive Algorithms for Fast Computation of Zernike Moments Using the Concept of Time Complexity ()

1. Introduction

Zernike polynomials are widely used in different fields such as optics, astronomy science and digital image analysis. They are able to describe every circular aperture since they have orthogonality over this type of aperture [1]. They could be used in wavefront reconstruction applications [2], object-class detection [3] [4] [5] [6], image classification [7], feature extraction [8] [9], brain tumor diagnosis [10], or even cone dimensions detection in keratoconus [11].

Zernike polynomials have a mathematical definition for optical aberration [12]. These polynomials have been used for more than seventy years in optical applications [13]. Moreover, they are able to describe both fixed and random aberrations [14] and model optical surfaces such as telescope mirrors or corneal topography [15]. Zernike polynomials are able to determine the primary shape of the optical surface and remove individual terms with no effect on the value of reminded terms [16].

Many of the applications that use Zernike polynomials, are related to eye aberration [17]. Eye aberration is the distortion of human vision and is measured using the reflected wavefront at pupil [18]. The wavefront is expressed by measured gradients related to each reflected point [19] and various types of abnormality are classified by Zernike polynomial set [20]. As a result, to form these polynomials, Zernike moments are essential to be determined. These moments are defined as the projection of 1D (speech)/2D (image) signals onto Zernike polynomials. One of the main issues in computing the moments is using factorial terms. Factorial terms cause a heavy computational load and a large time complexity. Therefore, this problem makes Zernike polynomials less proper to be used in real time applications.

In this paper, we have studied the most popular recursive algorithms which tried to reduce the time complexity of Zernike moments [21] - [27]. We have assessed and compared their efficiency by computing the worst-case time complexity.

The paper is organized as follows: we have described the primary concepts related to the time complexity in the next Section. In Section 3, we have introduced Zernike polynomials, Zernike moments, and the classical approach to obtain the moments. Section 4 is specified to some of the popular algorithms that have been existed to decrease the time complexity of Zernike moments, and comparative results and discussion are available in Section 5. Finally, we have the conclusion in Section 6.

2. Primary Concepts of Time Complexity

Time complexity is a criterion to evaluate algorithms. This concept is defined as the minimum time resource that the algorithm needs to be completed considering all of the exceptional situations. Time complexity is classified to theoretical time complexity and practical time complexity. The second, known as running time, is only available on code lines and applications. In addition, this concept is completely dependent on the machine while theoretical time complexity is an absolute independent estimation [28]. Consequently, practical time complexity is an unreliable criterion since different computers have different hardware properties; on the contrary, theoretical time complexity is a global independent standard to evaluate time efficiency of an algorithm [29]. To clarify this matter, the definitions of the two concepts are provided.

Running time of program A is the number of moves A makes (on input x) until the program is completed [30]. The moves are in either time unit or instruction unit. In other words, to calculate the running time of a program we should count the instructions or measure the time until the program completes its function. Numbering instructions of a program is not a standard method to evaluate time efficiency since there are differences between programming languages that the algorithm is implemented by [29]. In addition, the number of instruction lines may differ depending on the programmer choices. Measuring the time unit is not a reliable evaluation because hardware features may vary in different computers [29].

While running time is totally dependent on the program, theoretical time complexity is the number of statements executed by the algorithm on input x [31]. It is based on the raw algorithm and counts the number of the main operation until the algorithm halts [29]. Theoretical time complexity may be evaluated by the exact case, the best case, the average case, or the worst case analysis functions.

The best case time complexity, the average case time complexity, and the worst case time complexity are detected by the minimum, the average, or the worst repetition rate of the main operation respectively and the final result is a function of the size of the input string.

The worst case analysis function has been widely used to estimate time efficiency of an algorithm. The standard notation for this assessment is Big-Oh (O) notation [32]. It ignores worthless details in calculations and determines the certain time bound in which an algorithm can be completed [32] [33]. As a mathematical expression, the exact time complexity

is of

if there are positive constants such C and k (k is integer) that for all input string length

,

[34]. This situation is addressed as

is Big-Oh of

.

Figure 1 shows the difference between the exact time complexity and the worst case time complexity, represented by a black line and a blue line respectively. As we can observe, if the exact time complexity of an algorithm

equals

, the related worst case time complexity will be

.

The other subject to discuss is the model of the calculations. Generally, there are two models to calculate theoretical time complexity [35]. A uniform model supposes that all operations on any size of input take the same constant time to be completed while a non-uniform model allocates a unique computation time to each operation that is executed on every single input length [35]. Uniform models are common for algorithms with low domain numbers while a non-uniform model is recommended to evaluate the algorithms that have large length inputs.

![]()

Figure 1. An example of the exact time complexity and the worst-case time complexity.

In this study, we use the uniform model and the worst-case time complexity to evaluate time efficiency of the selected algorithms. The worst-case time complexity has been chosen since this assessment delivers us the maximum time unit taken by the algorithm. The uniform model has been selected due to low domain size of input strings used in Zernike moments. The input string is the order of the polynomial and doesn’t go further than 15 in the researches [36]. In fact, the notable information is represented by moments up to order 15 [37]. This number doesn’t take a considerable time complexity in computational operations and as a result, using uniform model is completely acceptable for this purpose.

3. Classical Method to Calculate Zernike Moments

Zernike polynomials have been introduced by Frits Zernike in 1934 [38]. They are featured by having orthogonality in a continuous circle with unit radius [39] and are able to describe every function of wavefront aberration or phase [1].

In a discontinuous environment, Zernike polynomials model wavefront representation [1] [40] [41] using (1),

(1)

is Zernike moment in azimuthal frequency m and polynomial order n. The parameters

are the elements of the polar coordinate centered around the aperture.

must be normalized as it is limited to unitary cycle.

is the angle in polar coordinate, and

is the reconstructed wavefront. N shows the maximum order that has been used to model the wavefront. The maximum value of N is 15 in researches [36] while in clinical diagnosis, calculations are up to forth order [42].

represents the error of modeling and measuring the wavefront. It is assumed that the input noise is idd random noise with a zero valued average and a constant variance [40] [43].

Radial normalization is calculated using (2),

(2)

r is the distance between off-center and center points.

is the radius of aperture.

Radial function [1] [36] [39] [40] [43] is defined by (3),

(3)

Finally, Zernike polynomials [1] [40] are determined using (4),

(4)

is the normalization factor defined [1] [40] as follows:

(5)

is the regular delta function. We can merge (4) and (5) and rewrite Zernike polynomials [36] [39] [40] [44] as (6):

(6)

(7)

(8)

After transformation to the discontinuous environment, Zernike moments are determined by (8) [1]. Negative values of m doubles the time complexity, however, it does not change the order. Consequently, we ignore negative values of this variable in the rest of the article.

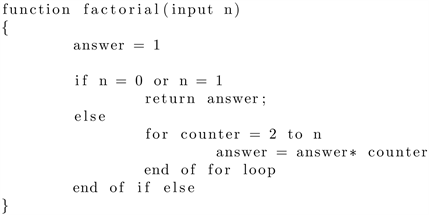

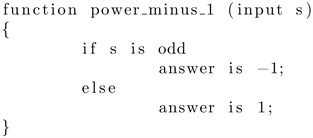

To evaluate the time complexity of Zernike moments, we obtain the time complexity of radial functions at first. The main operation is different in every term of the expression (Table 1). Each term is repeated in a loop to construct the radial function. Table 2 represents the number of repetitions for each term and the related time complexity. The reason to consider multiplication as the main operation of factorial terms, and compare as the main operation of

could

![]()

Table 1. Time complexity of each term in the equation of radial function for 1 time.

![]()

Table 2. Number of repetitions for each term until the algorithm halts and the total time complexity related to the expression.

be discussed as following: To calculate

, the below algorithm is used:

As we can observe, we have a compare operation to check if

or

which is the main operation in the case of

or

. Then, if

the loop will start and the multiple will be the main operation.

The other term is

. The reason is that

could be implemented as below easily without any exceeded calculation:

It only needs a comparison operation to realize the result.

To compute a complete set of radial functions, the equation needs

,

. Then, we calculate the total

time complexity which is independent of m as follows:

(9)

N is the maximum order used by the algorithm. To obtain the total time complexity for each n, we have added a summation expression to

.

Summation limitation is in

and we have replaced m with:

(10)

Then, we have used i instead of m.

is the reminder when n is

devided by 2 which can be ignored.

Finally, a complete set of radial functions could be computed in

for each point of the image. According to (6),

has the same worst-case time complexity with

. As a result, a complete set of Zernike polynomials has the worst-case time complexity of

for a

image.

As we can observe, factorial terms and power terms would be obtained recursively by

and

.

4. Fast Methods to Calculate Zernike Moments

In this section, we will study seven best known recursive methods that tried to reduce the time complexity of Zernike moments. We have selected recursive methods due to their success in this aim. In the following, we will consider these fast algorithms.

4.1. Kintner Method

In 1976, Kintner represented his method to calculate radial functions and used a pure recursive relationship with three terms [21]. This recursive function is represented by:

(11)

And the coefficients

are defined using:

(12)

and

are calculated through the classical method.

To start with the coefficients, multiplication is the main operation.

need 5, 3, 5, 6 multiplications respectively. Devision, expontiation, and multiplying by −1 are considered as multiplication as well. Each

has 6 multiplications in its equation. As a result, to calculate the time complexity of

, we need 25 multiplications. As we can see in Figure 2,

could be ontained when

and

are determined. Thus we can obtain

by (13)

(13)

If we sketch the binary tree of the time complexity (Figure 2), it is obvious that there is 20 node in the first level, 21 nodes in the second layer, 22 nodes in the third layer, and in general

nodes in the layer before the last layer. Thus,

![]()

Figure 2. Kintner method, the binary tree of the time complexity, top-down programming.

we have

nodes. Each node needs 25 multiplications. Therefore, we have

by (14):

(14)

And we have (15) for

and

:

(15)

The final T(N) includes the time complexity for

and the time complexity of

as well. However, these two terms don’t change the order of the worst-case time complexity. Consequently, the time complexity would be of

. To be more precise in the time complexity of

, we only need

. If we calculate the time complexity of each operation and total them together, the time complexity would be

. For

, we use

and

. As a result, the time complexity of

is

. After calculating

and

, each radial function needs 25 multiplications as the main operator. For each m we have (16)

(16)

Obviously, for

The time complexity is the same with the classical method. The time complexity of Kintner algorithm depends on the programming style. The previous calculations are related to the top-down mode. However, if we use button-up programming approach,

and

are obtained using the classical method and the time complexity is of

for them. Then,

are calculated and

is computed by a sequence progress with no excess computation. The related binary tree is provided in Figure 3.

Finally, the time complexity for the complete set of Zernike moments will be

of

and the exact value equals

.

4.2. Prata Method

In another approach, Prata offered a recursive relationship for radial functions in 1989 [22] to expand Zernike polynomials functions. In this method, the coefficients are evaluated by a 2-D integration formula which is the result of the orthogonality of the Zernike polynomials. The algorithm calculates the higher order radial functions from the lower orders using:

(17)

![]()

Figure 3. Kintner method, the binary tree of the time complexity, button-up programming.

which

and

are constants computed by:

(18)

The high order radial functions are computed from low orders using (17) which

are constants computed by (18). The algorithm can not be used in the cases

and

. In these cases, radial functions have to be calculated using a different method such as the classical approach.

Like Kintner algorithm, the time complexity of this method depends on the programming style. By top-down programming style, we may identify

using drawing the binary tree of the calculations which is represented by Figure 4.

and

need 3 and 2 multiplications respectively. As a result,

needs 7 multiplicaitons to be obtained in each level of the tree. We have

noodes in level i of the tree until the level p:

. Then, the number of nodes will decrease until we have

and/or

in the terminals.

Considering the first part of the calculations, we can write the equation below

if we consider

:

(19)

When we consider the calculation trees of top down programming for both

![]()

Figure 4. Prata method, the binary tree of the time complexity, top down programming.

Prata and Kintner method, we can observe that each element could be considered as a local formula which has to reach the lower level elements inside itself. Since, these lower-level elements are not calculated globally, they have to be re-computed for every higher level elements and this matter causes redundancy. As a result, these repeations affect the time efficiency and reduce it. For this reason, we will not continue the top down calculations in the rest of the article.

In Figure 5 we have sketched the binary tree of the time complexity, considering button-up programming. As we can see,

(horizental

level on top) and

(vertical level on left) are needed as the

basic elements. If we consider (3), we only have

for

. Therefore:

(20)

There are four multiplications as the main operation and we have:

(21)

For

, we can use the last row of Table 2:

(22)

![]()

Figure 5. Prata method, the binary tree of the time complexity, button-up programming.

For the rest of the radial polynomials, each

needs 7 multiplications.

are acquired using the basic elements. We have

radial functions in this layer. The next step of the loop is quantifying

(

elements). This process continues to the last layer. In the last layer, the

only radial function is

. If N is odd,

, otherwise, it

calculates only one more radial function which doesn’t effect the order of the time complexity at all. As the result,

could be acquired by (23) and Zernike moments are obtained with the time complexity of

.

(23)

Therefore, for the whole wavefront

.

4.3. Belkasim Method

In 1996, Belkasim and others [23] expanded the complex equation of Zernike moments to obtain a recursive relationship which has been represented below:

(24)

(25)

(26)

In this approach, radial functions are calculated by (24), Zernike moments are determined using (25), and

is realized by (26).

To obtain

, we have to analyze the main operations. In this equation, the main operation is multiplication. There are 5 multiplication in the main equation. We also suppose that factorial terms are calculated recursively since

the article did not mention any specific method. To calculate

, we only need a compare operation to check if

is an odd or even number. As a

result, each

could be obtained in:

(27)

For each

,

and

,

are needed. There are

multiplications in the main equation as well. Then, we may obtain

,

, and

by:

(28)

Then, the time complexity for the complete set of

is of

and as a result, the algorithm is of

.

4.4. Q-Recursive Method

Chong and his colleagues recommended a method for fast calculation of Zernike moments which is known as q-recursive method [24]. This algorithm uses recursive equations to compute radial functions. The recursiveness is based on m and does not change n in the right side of equation. To determine radial functions, Q-recursive approach follows:

(29)

For the

or

we have:

(30)

The coefficients are obtained by:

(31)

As the algorithm mentioned the bottom-up programming style as the main style of the method, we immediately go through this style. The first step is to calculate the initial elements

. The time complexity of

is n. As a result, for the complete set of

:

(32)

In the next level,

could be reached and needs 2 multiplicaions. Then,

. The complete set of

will be obtained in:

(33)

Finally,

, will be reached (Figure 6) using the obtained elements.

For each

and

,

and

must be calculated. They need 6, 4, and 4 multiplications (the main operation) respectively. In addition, there are 3 multiplications in the main Equation (29) and the time complexity of

is 2. Therefore, for each

:

(34)

Consequently,

would be (we suppose n is even, however, there is the same process for odd values of n):

(35)

And

is calculated by:

(36)

![]()

Figure 6. Q-recursive method, the binary tree of the time complexity, button-up programming.

Therefore, the total time complexity would be:

(37)

As a result, the worst case time complexity of the algorithm is of

for the complete set of radial functions, and for Zernike moments this value is of

.

4.5. Wee Method

In 2004, Wee, Paramesran, and Takeda offered an approach for the complete set of Zernike moments that is a merged approach of Kintner, Prata, and Q-recursive algorithms [25]. The main formula is the recursive formula of Prata method. However, as we know, there are some cases that Prata algorithm is unusable. In these cases, Kintner and q-recursive methods have been used instead. In Wee method, radial functions are reachable by:

(38)

(39)

and

are the coefficients of Prata approach, which have been defined by (18).

is calculated by (39).

To consider the time complexity, we need an initial computation of

. For

, we have

and the complete time complexity would be:

(40)

The next step is calculating

which needs 2 multiplications and therefore,

. Then, we have to obtain

each contains

. The time complexities of the coefficients are 4, 10, 6 respectively if we consider multiplication as the main operation. The time complexity of

is 2, and the formula contains 3 multiplications itself. Therefore, there are 25 multiplications required to compute each

and the complete set of it could be obtained using:

(41)

When

and

, the algorithm uses Prata method. Each

needs 7 multiplications. Thus, considering

, we could write:

(42)

And the total time complexity will be

(43)

As a result, the final time complexity is of

.

4.6. Amayeh Method

Amayeh and his colleges designed an algorithm to calculate Zernike moments and claimed that their method needs less time resources than the classical approach [26]. This method uses complex relationship of Zernike moments and obtains

by:

(44)

is identified as the common term of (44), which has a unified repetition. For example, for

we have Table 3. The method is similar to Belkasim algorithm, As we observed previously, the bottleneck of Belkasim algorithm that has increased the time complexity, was

. This expression has been repeated in Amayeh method as well.

We consider multiplication as the main operation. For each

, there are

multiplications. In addition, it is only needed to calculate

for

each

. Thus, we can write:

![]()

Table 3. The process of Zernike moments calculation in n = 10; m = 0.

(45)

For the complete set of Zernike moments, we have:

(46)

The time complexity of

is a constant value. Therefore, we can rewrite:

(47)

Therefore, it does not affect the order of the time complexity and the final

is of

.

4.7. Modified Prata Method

Singh and Walia proposed a modification of Prata algorithm [27] and combined (17) and (18) to:

(48)

The basic elements are

and the time complexity of

is

. Therefore, for the complete set of radial functions,

is obtained by:

(49)

In the next layer, the method produces

. To produce

, we need 7 multiplications as the main operation. The tree of radial function determination is represented in Figure 7. We have

movements to obtain

and

movements to determine

.

It would continue to the last layer with the only member

. As a result,

equals:

(50)

and the complexity of this algorithm is of

.

![]()

Figure 7. The tree of radial function determination in modified Prata method.

5. Results and Discussion

In this paper, we have studied several approaches which tried to decrease the time complexity of Zernike moments. We have used the worst-case time complexity criterion and the uniform model to evaluate time efficiency of the presented algorithms. As the result presented in Table 4, classical method has the worst-case time complexity of

. The bottleneck of the complexity has been created by the factorial terms that must be calculated each time and some of the studied approaches tried to remove these terms.

In general, the time complexity of Kintner, Prata, Q-recursive, Wee, and modified Prata approaches are dependent on programming Style. However, as we discussed before, top-down programming causes redundancy and excess computation of the elements. The most successful approaches, in terms of time complexity order, are Kintner, Q-recursive, Wee, and modified Prata algorithms. These methods could halve the order of the time complexity.

Prata method was successful to reduce the time complexity as wel. However, the worst-case time complexity is higher than the mentioned algorithms in the previous paragraph.

Neither of Belkasim and Amayeh approaches could diminish the order of the time complexity in calculating Zernike moments. However, Belkasim method, has slightly reduced the coefficient of term with the highest order from 0.07 to 0.02 as we have calculated before. Amayeh method had an increment in the coefficient compared to the classical approach.

Therefore, the main competition is among Kintner, Prata, Q-recursive, Wee, and modified Prata algorithms. This competition is in the coefficient of term that has the largest order.

Wee and Modified Prata approaches have the smallest coefficient in their terms with the highest order which is 2.25. To have an exact comparison, we make an inequation supposing that the time complexity of Wee method is larger than the time complexity of Modified Prata algorithm and will see if our assumption

![]()

Table 4. The result of worst-case time complexity for studied recursive algorithms.

is correct or not:

(51)

Therefore if

, the time complexity of Modified Prata approach is more efficient than Wee method’s.

As we mentioned previously, uniform model is used to evaluate the studied algorithms in this article. Although the uniform model is a popular model to evaluate time complexities of the algorithms, one of the disadvantages of this model is supposing a uniform cost (which is time scale in this study) for all operations in every size, while different operations do not have the same cost in the binary machine. For example, if we consider binary production 1 × 1 and 11 × 1, the former takes

and the time complexity of the latter production is

, while the main operation is binary production in both.

This discussion leads us to consider evaluating the algorithms by logarithmic cost model which assumes that the cost of every operation is a function of the numbers of input bits (39).

The other subject to be considered is spatial complexity which is related to the memory space that algorithms take while they are running. Spatial complexity could be reached by the same computational process similar to this study but in the storage field.

6. Conclusions

In this study, we have evaluated seven algorithms that tried to decrease the time complexity of Zernike Moments. Our assessment is done by the worst-case time complexity criteria and the uniform cost model. To have a brief comparison between studied algorithms, the following points could be mentioned:

- The algorithms that removed the factorial functions in their equations, were successful in reducing the order of the worst-case time complexity.

- Belkasim and Amayeh approaches, which had kept factorial terms in their equations, could not succeed in decreasing the order of the time complexity, even though the coefficient of the term with the largest order has been diminished in Belkasim method. The barrier that caused these algorithms to fail, was using factorial terms in their recursive relationships.

- Both Kintner and Prata approaches have limitations in their computations. Kintner’s method is limited to

[45]. Similarly, Prata algorithm is not usable in

and

and classical method must be used in these cases. However, the linear relationships, which enable us to obtain higher order moments from lower orders, may be an advantage of this method [24].

- In Q-recursive method, moments of each order are independent of moments in higher or lower orders which makes it useful for real time and parallel applications. This characteristic lets the whole set of Zernike moments of each order be separately calculated in a loop without any duplicated computations. This characteristic can be observed by drawing the tree of time complexity related to the algorithm in which branches are sequenced instead of being parallel.

- In Wee and modified Prata algorithms, factorial terms have been removed from the main equation and as a result, the efficiencies of the algorithms have improved. In these methods, factorial terms have been completely removed and are replaced with production terms, the equations have small numbers of production operations, and the relationships have changed into linear relationships. As a result, fewer computations have happened during the process. In fact, these two approaches have the least coefficient of term with the largest order among the studied algorithms. However, modified Prata algorithm has a better functionality in terms of time complexity for

.

- In general, recursive approaches are totally programming-style dependant. However, top-down programming style generates excessive steps that must be repeated for each related radial function.

There are other aspects of studying these algorithms. One discussion is about time complexity using the non-uniformed model. While the uniform model assumes the same cost for all the operations and input numbers even keeping large values, Non-uniform models let us know how the time complexity reacts to different numbers and operations. These models may be considered to be one of the future works to study theoretical time complexity of Zernike moments.

Even though computers have a large amount of memory nowadays, another issue is the space complexity of each algorithm, which is related to the amount of digital memory that each algorithm needs to be completed. For instance, in some methods, if the factorial terms are saved in a grid before starting the algorithm, the time complexity will reduce outstandingly. However, the device must specify a certain piece of memory to the algorithm to halt. As a result, the device may be more expensive. The issue is that how to balance between space complexity and time complexity to be both real time and cost-effective.

Compliance with Ethical Standards

This article does not contain any studies with animals or human performed by any of the authors.